This past weekend I’ve attended my third 365 Saturday, this time in Barcelona, as a speaker. As you can see in the post title, my session has been about creating inventory counting journals using AI Builder with the Power Platform.

The event has been great, but my session has left me with a bittersweet feeling because I haven’t been able to show the full app functionality due to stupid technical issues (which were stupid but were my fault) that I solved in less than two minutes after the session.

Anyway, thanks to all the people that came to my session and I’m sorry for that. Thanks to the organizers too, as well as the rest of the speakers.

Counting with AI

So… what was my session about? Nothing original at all. If you’ve seen the 2019 MBAS opening keynote there was a part about a Pepsi distributor that was using AI Builder to scan their store displays and analyze how sales were performing (more or less). My PowerApp uses AI Builder to count objects (you’ll see which objects later) and with that, create an inventory counting journal on Dynamics 365 for Whatever-you-know-the-ERP.

But in the end, my main intention with the session was showing that we can use all the Power Platform with MSDy365FO, not only Power BI, and that it can help in our projects. Because in AX world we’re sometimes like:

AI Builder

AI Builder is a tool for the Power Platform which adds AI functionality to PowerApps and Flow. And it’s really really really simple to set up and use.

Right now AI Builder consists of 4 different models:

- Prediction: answers binary questions like “Will the customer renew the subscription?” or “Which customer will not pay on time?”.

- Text classification: data extraction from texts. You get a sentiment % as an answer, 95% Good, 76% Quick, etc.

- Form processing: data extraction in key-value pairs. Like getting info from an invoice or document (it must always be the same invoice or document).

- Object detection: detects objects in images. That’s the model I used.

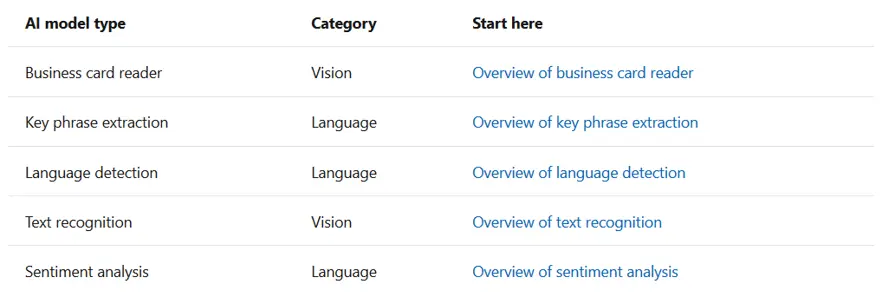

Of these four models only the prediction one is in GA, while the others are in preview. There’s also 5 pre-trained models available:

If you want to know more about AI Builder, there’s a hands-on-lab with all the needed resources to create your App using any of the four models.

Also, if you need a PowerApps environment sign for a PowerApps Community Plan to get a free environment where you’ll be able to use everything you need to and test Flow and PowerApps (and the CDS). If you haven’t signed up yet it’s the right time to do it (any time would be right).

AI 101

To explain how does this work, first I need to explain some AI and ML basics. But real basic, like, as basic as possible so I could explain it in front of an audience. If you want to see this better explained see this Channel 9 video about models, it’s from where I learnt everything I know.

In classic development when you solve a problem you basically get input data and your created function through a process and get a result as an output. The equivalent to this in machine learning is that you get input and solutions through a process and you get a function that will solve the problem related to the solutions you entered. This function is your ML model.

What else you need to know about models? Well, basically that the amount of data you feed the model with is directly proportional to the quality of the answers/solutions you’ll get. In AI Builder’s case, the object detection model asks for a minimum of 15 images. With 15 images you get a shitty model, it will detect the object you’re trying to detect, but it will detect almost anything as your object because the sample is too small.

The PatatApp

This is my app’s name, a joke using the Spanish name for Potato (Patata) and App.

Why this name? Well, I’m actually counting potatoes with the app. Why potatoes? I love them, they’re versatile (you can make omelette, fries, vodka, etc.) and because counting pallets is BOOOORING.

What my PowerApp does is detect potatoes in an image. Then I can choose between using an existing journal or creating a new one, then select an item, fill in its inventory dimensions and finally create the line in that journal in AX. I’ve made a short video showing it.

Simple, right? I detect 3 potatoes using AI Builder, then select a legal entity, create a new journal and select an item with its dimensions. Finally the line is created in the journal and it can be seen in MSDyn365FO.

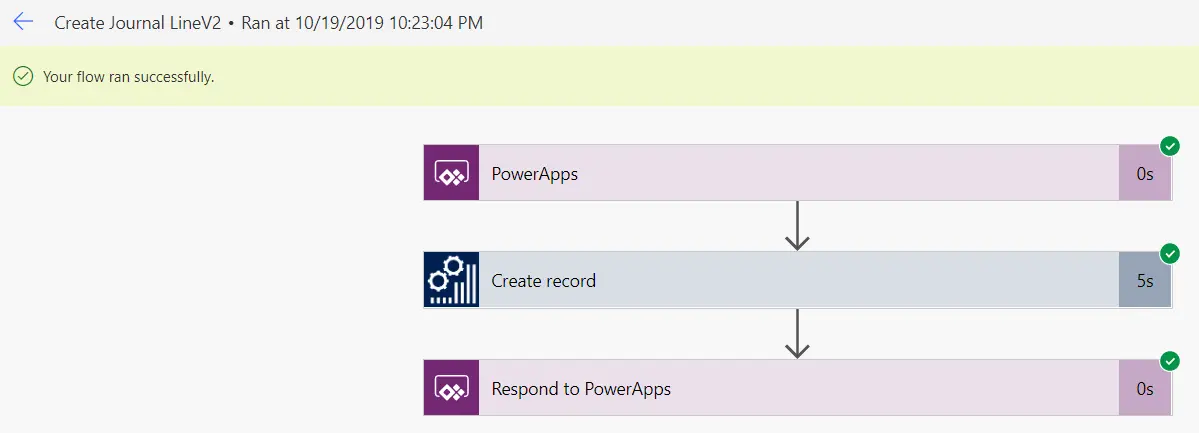

No sorcery or magic at all. (Oh, I hate the “magic” thing when speaking about development, or anything, because it makes it look like its been done with no effort. End of my rant). To create the journal header and the line I’m using two Flows that get the data from the PowerApp and create it into Dynamics 365:

As you can see it’s a really simple app, I had a first working version in four hours, with AI Builder and the Flows it’s really quick.

Shitty model vs. Not-so-shitty model

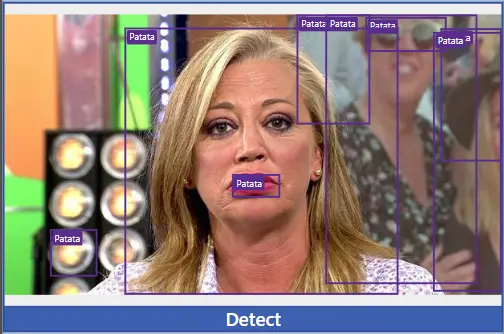

I want to end with real facts and important data. Remember the minimum number of images AI Builder asks for? It’s 15. This is what happens when your model consists of 20 images of lonely potatoes:

If your model sucks you will still detect all the potatoes in an image, but literally everything will be a potato.

I then trained a second version of the model with 40 images of potatoes with people, cats, other vegetables, etc. The result is much better, and it still detects potatoes:

I want to thank cazapelusas for drawing all the lovely potatoes and redesigning the PowerApp, you should have seen V1. Please adopt a graphic designer, your life will be prettier.

No potatoes were harmed during the making of this PowerApp.