You can read my complete guide on Microsoft Dynamics 365 for Finance & Operations and Azure DevOps.

I talked about the LCS Database Movement API in a post not long ago, and in this one I’ll show how to call the API using PowerShell from your Azure DevOps Pipelines.

What for?

Basically, automation. Right now the API only allows the refresh from one Microsoft Dynamics 365 for Finance and Operations environment to another, so the idea is having fresh data from production in our UAT environments daily. I don’t know which new operations the API will support in the future but another idea could be adding the DB export operation (creating a bacpac) to the pipeline and having a copy of prod ready to be restored in a Dev environment.

Don’t forget that the API has a limit of 3 refresh operations per environment per 24 hours. Don’t do this on a CI build! (it makes no sense either). Probably the best idea is to run this nightly with all your tests, once a day.

All these operations/calls can also be done using Mötz Jensen‘s d365fo.tools which already support the LCS DB API. But if you’re using an Azure agent instead of a hosted one (like the Build VM) it’s not possible to install them. Or at least I haven’t found a way 🙂

EDIT: well thanks to Mötz’s comment pointing me to how to add d365fo.tools to a hosted pipeline I’ve edited the post, just go to the end to see how to use them in your pipeline!

Calling the API

I’ll use PowerShell to call the API from a pipeline. PowerShell has a command called Invoke-RestMethod that makes HTTP/HTTPS requests. It’s really easy and we just need to do the same we did to call the API in my post.

Getting the token

$projectId = "1234567"

$tokenUrl = "https://login.microsoftonline.com/common/oauth2/token"

$clientId = "12345678-abcd-432a-0666-22de4c4321aa"

$clientSecret = "superSeCrEt12345678"

$username = "youruser@tenant.com"

$password = "strongerThan123456"

$tokenBody = @{

grant_type = "password"

client_id = $clientId

client_secret = $clientSecret

resource = "https://lcsapi.lcs.dynamics.com"

username = $username

password = $password

}

$tokenResponse = Invoke-RestMethod -Method 'POST' -Uri $tokenUrl -Body $tokenBody

$token = $tokenResponse.access_token

To get the token we’ll use this script. Just change the variables for the ones of your project, AAD App registration, user (remember it needs access to the preview) and password and run it. If everything is OK you’ll get the JSON response in the $tokenResponse variable and from there you can get the token’s value using dot notation.

Requesting the DB refresh

$projectId = "1234567"

$sourceEnvironmentId = "fad26410-03cd-4c3e-89b8-85d2bddc4933"

$targetEnvironmentId = "cab68410-cd13-9e48-12a3-32d585aaa548"

$refreshUrl = "https://lcsapi.lcs.dynamics.com/databasemovement/v1/databases/project/$projectId/source/$sourceEnvironmentId/target/$targetEnvironmentId"

$refreshHeader = @{

Authorization = "Bearer $token"

"x-ms-version" = '2017-09-15'

"Content-Type" = "application/json"

}

$refreshResponse = Invoke-RestMethod $refreshUrl -Method 'POST' -Headers $refreshHeader

This will be the call to trigger the refresh. We’ll need the token we’ve just obtained in the first step to use it in the header and the source and target environment Ids.

If it’s successful the response will be a 200 OK.

Add it to your pipeline

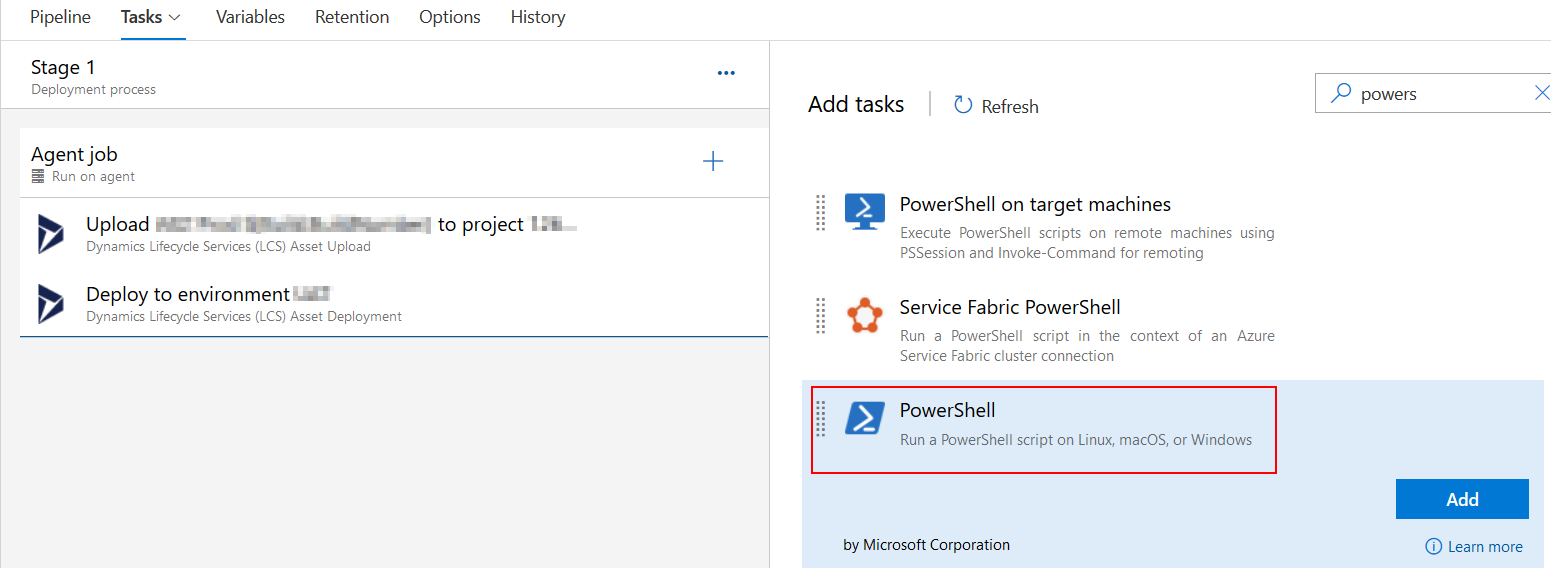

Adding this to an Azure DevOps pipeline is no mistery. Select and edit your pipeline, I’m doing it on a nigthly build (it’s called continuous but it’s not…) that runs after the environment has been updated with code, and add a new PowerShell task:

Select the task and change it to “Inline”:

Then just paste the script we’ve created in the Script field and done! You’ll get a refresh after the tests!

You can also run this on your release pipeline BUT if you do it after the deploy step remember to mark the “Wait for Completion” option or the operation will fail because the environment will already be servicing! And even then it could fail if the servicing goes over the timeout time. So… don’t run this on your release pipeline!

And that’s all. Let’s which new operations will be added to the API and what we can do with them.

Update: Use d365fo.tools in your Azure Pipeline

Thanks to Mötz’s comment pointing me to how to add d365fo.tools to a hosted pipeline I’ve created a pipeline which will install the tools and run the commands. It’s even easier to do than with the Invoke-RestMethod.

But first…

Make sure that in your Azure Active Directory app registration you’ve selected “Treat application as a public client” under Authentication:

The task

First we need to install d365fo.tools and then we can use its commands to call the LCS API:

Install-PackageProvider nuget -Scope CurrentUser -Force -Confirm:$false

Install-Module -Name AZ -AllowClobber -Scope CurrentUser -Force -Confirm:$False -SkipPublisherCheck

Install-Module -Name d365fo.tools -AllowClobber -Scope CurrentUser -Force -Confirm:$false

Get-D365LcsApiToken -ClientId "{YOUR_APP_ID}" -Username "{USERNAME}" -Password "{PASSWORD}" -LcsApiUri "https://lcsapi.lcs.dynamics.com" -Verbose | Set-D365LcsApiConfig -ProjectId 1234567

Invoke-D365LcsDatabaseRefresh -SourceEnvironmentId "958ae597-f089-4811-abbd-c1190917eaae" -TargetEnvironmentId "13cc7700-c13b-4ea3-81cd-2d26fa72ec5e" -SkipInitialStatusFetch

As you can see it a bit easier to do the refresh using d365fo.tools. We get the token and pipeline the output to the Set-D365LcsApiConfig command which will store the token (and others). This also helps to not having to duplicate AppIds, users, etc. and as you can see to call the refresh operation we just need the source and target environment Ids!

15 Comments

Hi,

There is just a little mistake in the “Getting the token” PS script :

$clientSecret and not $clientSecert

Best Regards

Thanks Rabih! I’ve updated the post and fixed the typo!

Hi,

Instead of

$refreshResponse = Invoke-RestMethod $listUrl -Method ‘POST’ -Headers $refreshHeader

$refreshResponse = Invoke-RestMethod $refreshUrl -Method ‘POST’ -Headers $refreshHeader

Best Regards

Thanks again, that’s what happens when I change the code I got in PowerShell in the WordPress editor, and then I don’t proofread the post.

I believe that these commands should be enough, for you to be able to install the #d365fo.tools during your pipeline execution:

Install-PackageProvider nuget -Scope CurrentUser -Force -Confirm:$false

Install-Module -Name AZ -AllowClobber -Scope CurrentUser -Force -Confirm:$False -SkipPublisherCheck

Install-Module -Name d365fo.tools -Scope CurrentUser -Force -Confirm:$false

Thanks, Mötz! I’ll update the post with this info!

I’m running into the following issue upon requesting the token:

“error”: “invalid_grant”,

“error_description”: “AADSTS65001: The user or administrator has not consented to use the application with ID ‘…’ named ‘…’. Send an interactive authorization request for this user and resource,

“error_codes”: [

65001

],

I’ve created an App registration in Azure and added the “Dynamics Lifecycle services” permission. As far as I can tell this permission doesn’t require consent of an Azure AD admin..? Or does it?

Do I still need to be a part of the preview (insiders) to make use of the LCS API?

Yes! You still need to join the preview with the user you’ll be using and the LCS project you want to use the API with.

Can we export PROD database directly to LCS using JSON:

POST /databasemovement/v1/export/project/{projectId}/environment/{environmentId}/backupName/{backupName}