I’m back with additional information about Azure API Management! More Azure content, and I’ll probably continue to produce posts regarding Azure in the future.

I believe there are numerous ways to learn new things, and for me, two of them are writing blog posts and using new technologies to solve problems at work. Of course, my goal is to attempt to apply the Azure themes I write about to Dynamics 365.

Today, I’m presenting an architecture approach for integrations, leveraging API Management and various other Azure components, for Dynamics 365 or anything else that has an endpoint.

You can read the second part of this post: IaC with Bicep: deploy Azure API Management Architecture.

You may also like

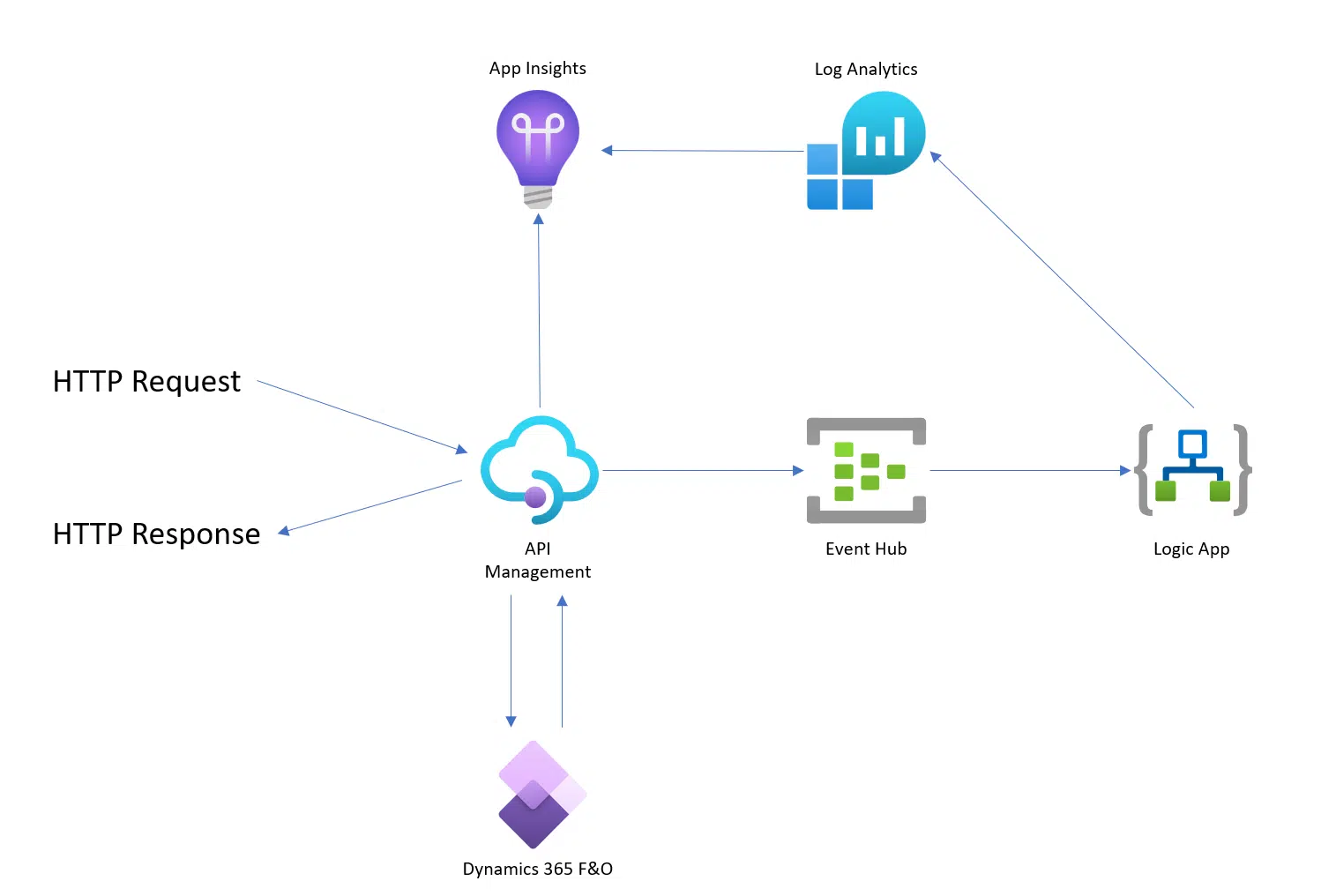

The APIM Architecture

Let’s get to work and let me show you a diagram of what I have in mind, and I’ll explain everything in more detail later:

So my idea is having an API Management in front of Dynamics 365 Finance and Operations, or whatever you’re using, and log all the requests done to the APIM and responses returned by the APIM in an Event Hub as JSON.

Let’s take a look at the individual components.

Azure API Management

This will be the public-facing API endpoint that will connect to our real backend, which in the diagram’s case is Dynamics 365 F&O.

One of the benefits of the APIM is that we can manage the OAuth authentication flow in Dynamics 365 and, for example, use an API key instead.

Additionally, we can create mock APIs, modify requests and responses before they get to and from the backend, and define several other rules in the policies.

Event Hub

The Event Hub is an event ingestion service, and we’ll use it to log the requests and responses of the API Management.

I’m using an Event Hub because… I haven’t been able to get the APIM requests and responses into a Log Analytics Workspace otherwise.

Log Analytics

Log Analytics is a tool that we’ll be using to collect the data from the Event Hub and analyze their results.

Application Insights

And obviously App Insights is the next component. It’s connected to the APIM and will collect data about the performance of the APIM, and we can also use it to query the logs from Log Analytics.

Logic App

Because the Event Hub is not meant to view data, we’ll be using a Logic App to get the logs from the Event Hub into Log Analytics.

But… all of this… what for?

I just want you all to SPEND MORE MONEY ON AZURE!!!

I’m joking, and as we’ll see later, the monthly cost of all the resources will be very low.

I already talked about APIM before and said that I thought it was a good idea to put an APIM in front of our Dynamics 365 endpoints because it gives us several features that can be really helpful.

And before going into more details, a small…

This kind of things, adding an extra layer in front of a Dynamics 365 instance, or any system, has to be justified. If you don’t have many integrations, or are not critical, this might have no sense. It’s always up to you and depending on the requirements of the project to decide using this or not.

Really, what for?

The reason behind building this is to have insights and data of the usage of your APIs, capturing the requests made to the system and the responses it throws.

And in a scenario with many integrations and several external systems accessing data in the ERP, it’s a good idea to have a log of what’s happening. And it’s even a better idea to keep it outside Dynamics 365 🙂

The API

To demonstrate this, I’ve created a calculator API using an Azure Function, and the APIM will be the frontend of that API.

In the APIM I’ve set a policy to use the Event Hub as a logger:

<policies>

<inbound>

<base />

<log-to-eventhub logger-id="aristedotinfoapim-logger">@{

if (context.Request != null)

{

var bodyRequest = context.Request.Body.As<string>(preserveContent: true);

var ret = string.Format("{{'Direction' : '{0}', 'DateTime' : '{1}', 'ServiceName' : '{2}', 'RequestId' : '{3}', 'IpAddress' : '{4}', 'OperationName' : '{5}', 'Body' : '{6}' }}", "Inbound", DateTime.UtcNow, context.Deployment.ServiceName, context.RequestId, context.Request.IpAddress, context.Operation.Name, bodyRequest);

return ret;

}

else

{

return "INBOUND EMPTY";

}

}</log-to-eventhub>

</inbound>

<backend>

<base />

</backend>

<outbound>

<base />

<log-to-eventhub logger-id="aristedotinfoapim-logger">@{

if (context.Request != null)

{

var bodyResponse = context.Response.Body.As<string>(preserveContent: true);

var ret = string.Format("{{'Direction' : '{0}', 'DateTime' : '{1}', 'ServiceName' : '{2}', 'RequestId' : '{3}', 'IpAddress' : '{4}', 'OperationName' : '{5}', 'Body' : '{6}' }}", "Outbound", DateTime.UtcNow, context.Deployment.ServiceName, context.RequestId, context.Request.IpAddress, context.Operation.Name, bodyResponse);

return ret;

}

else

{

return "Outbound EMPTY";

}

}</log-to-eventhub>

</outbound>

<on-error>

<base />

</on-error>

</policies>The logger-id property contains the name of a logger we’re creating in the APIM. We can do that either using Azure’s management REST API or PowerShell. I went for the latter:

$apimContext = New-AzApiManagementContext -ResourceGroupName "YOUR_RESOURCE_GROUP" -ServiceName "YOUR_APIM_NAME"

New-AzApiManagementLogger -Context $apimContext -LoggerId "YOUR_NEW_LOGGER_NAME" -Name "YOUR_NEW_LOGGER_NAME" -ConnectionString "YOUR_EVENTHUB_CONN_STRING;EntityPath=YOUR_NEW_LOGGER_NAME"With the above script, replacing the things in CAPITAL_LETTERS you will have a new logger ready.

And in the policy what we will be doing is saving the request and response in a JSON format along other data, like the date and time, caller IP, endpoint name, etc.

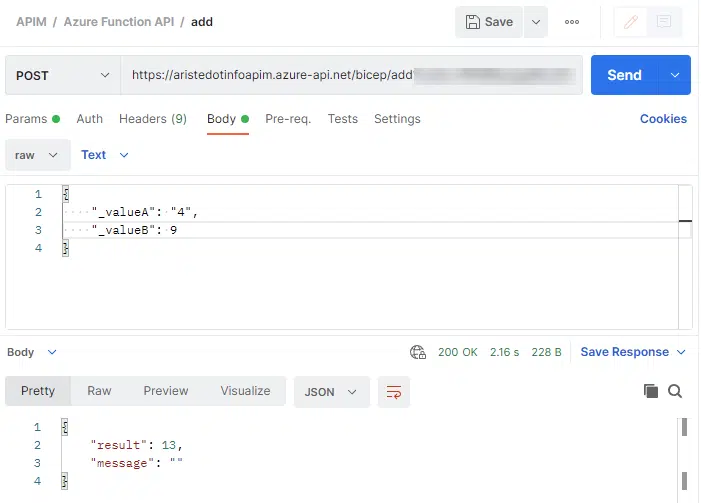

And now, using Postman, we can call our API. I’ll be calling the “add” operation:

OK, so 9 plus 4 makes 13. It looks like the API works.

Logging (the not-so-good way)

UPDATE: read the next section to see an alternative and easier way of logging the requests.

Now we’re in the logging side. When any of the APIM’s operations are called they’re logged into the Event Hub, and thanks to a Logic App we’ll get these events into Log Analytics, and now we can retrieve the data using either Log Analytics or App Insights.

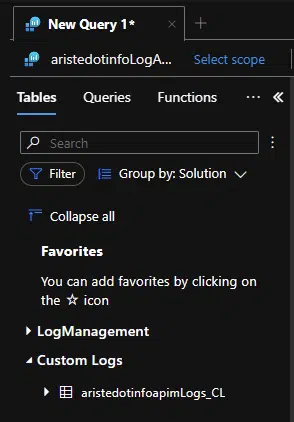

I’ll do it in Log Analytics, and after a few minutes we will see a new custom log:

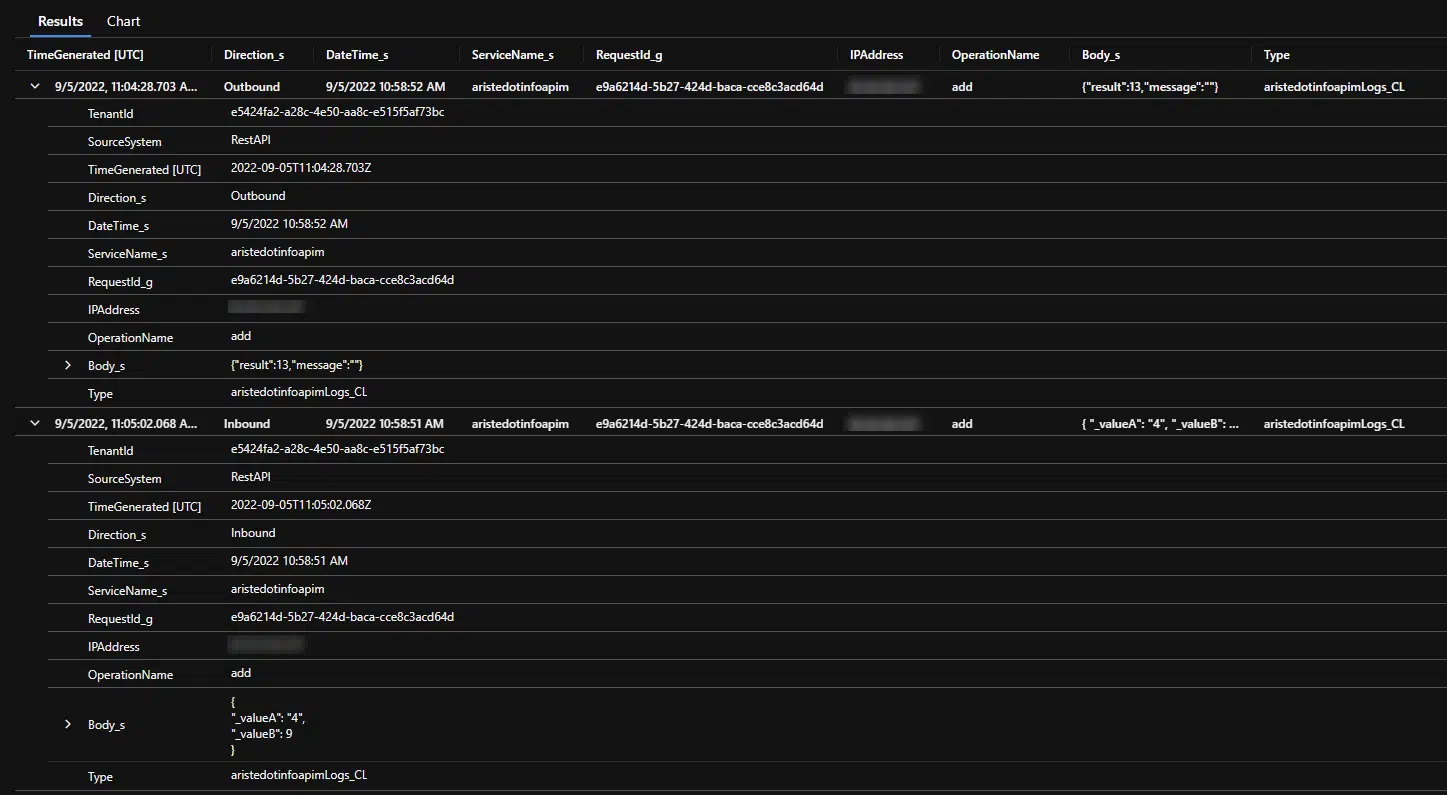

And, after some minutes after the requests, we will see what we’ve sent there:

And now we have the details of whom, when, which endpoint and what is being sent and the response the backend is returning. And if there are issues, at least we can see which body was sent, what error the backend returned, etc.

Logging (the good way)

Well, this is another benefit of sharing things, there’s always someone that knows better than you and can help you. In this case, it’s been Fabio Filardi (and Mötz Jensen also pointed me towards this solution), pointing to this blog post about a feature in API Management that lets us send the bodies of the requests and responses directly into App Insights.

The fun thing is I did try this and never managed to see the bodies, so thanks a lot to Fabio for making me try again even when I told him I already tried 😅!

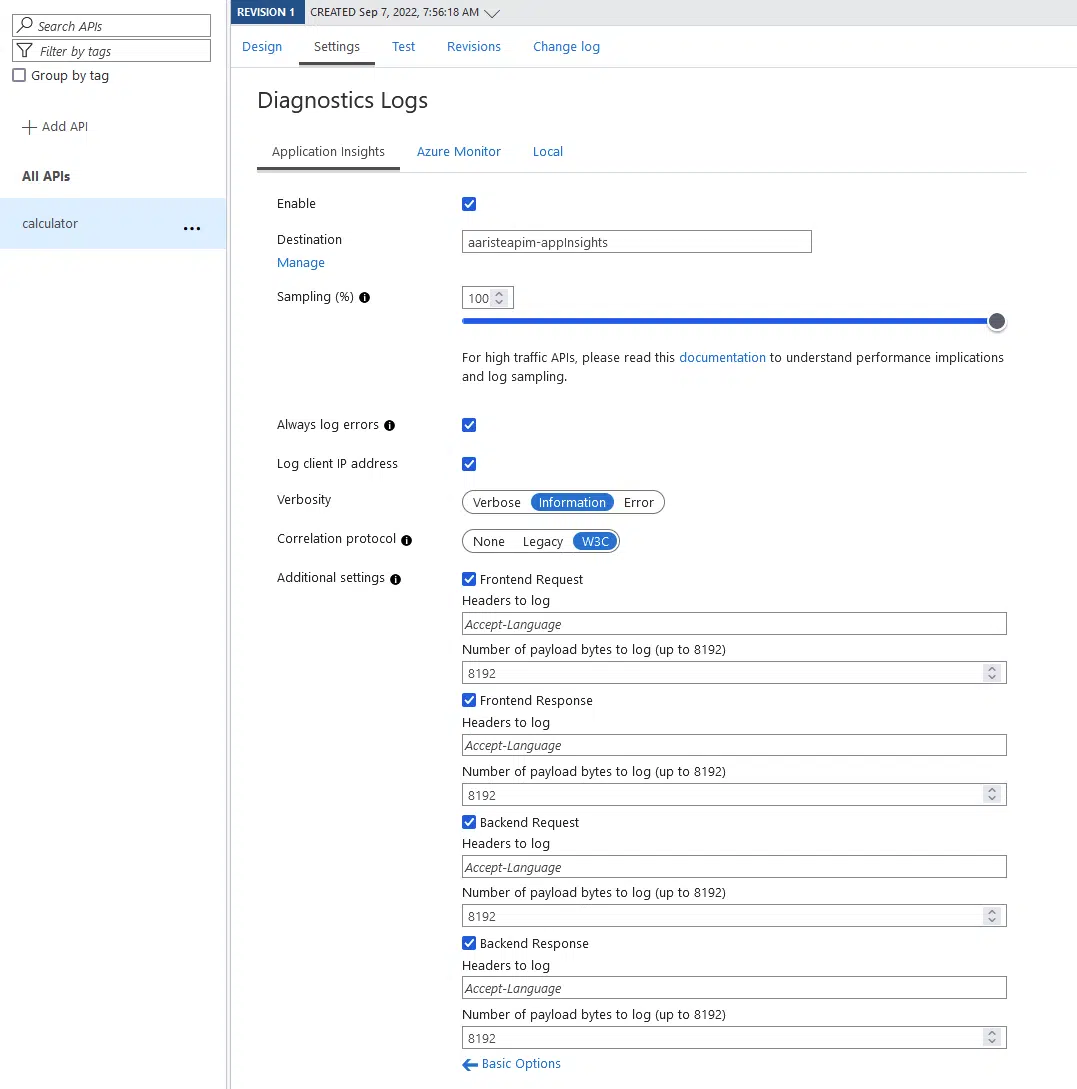

If we go to the “Settings” tab in our API and scroll down, and click on “Advanced settings” we will see this:

In order for the APIM to log the request and response bodies, we need to change some default values.

First we need to change the correlation protocol to W3C, and after that we must check the checkboxes in Additional settings and enter 8192 in the “Number of payload bytes to log”.

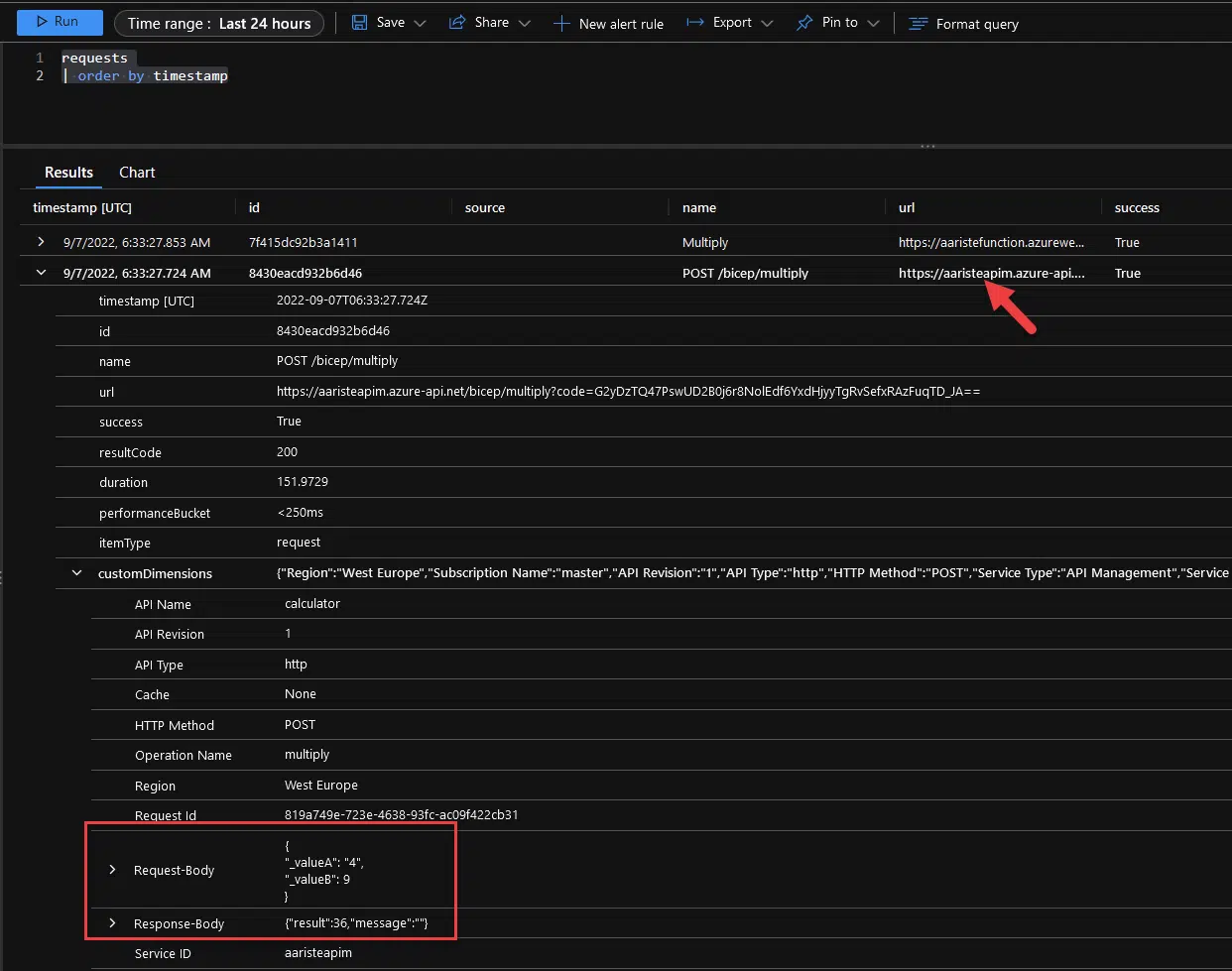

After this is saved, we can send a request to our API and, after some minutes, we can go to Application Insights, Logs and select the requests, ordered by the timestamp for our convenience with this query:

requests

| order by timestampWe will see a list of requests, the top one is the request done to the Azure Function (which is our backend in this case) and the one below that is the request to the APIM. Expand it and you’ll see the bodies:

And thanks to marking four checks, we can spare the cost of the Event Hub and Logic App!

The Azure resources

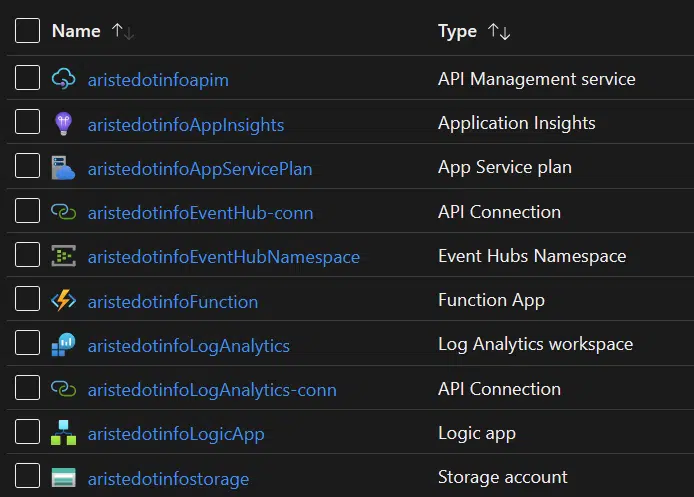

And this is possible thanks to Azure and these resources:

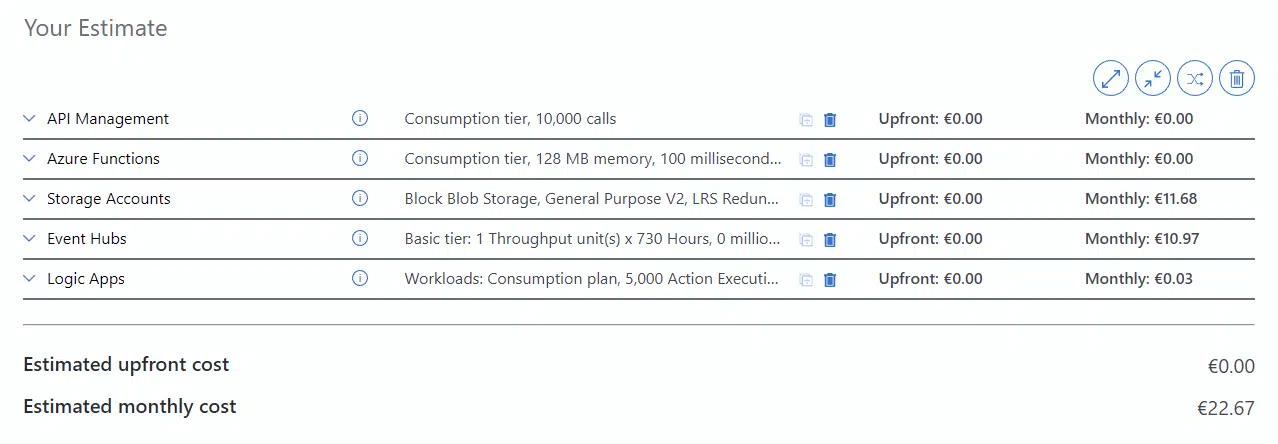

And as I said at the beginning, the cost of all this shouldn’t be very high. Let’s go to the Azure Pricing Calculator and check it.

And, even we’re missing the App Insights and Log Analytics resources, which are not in the calculator, the monthly cost in Euros and for the West Europe region is €22.67, which should be perfectly justifiable in an ERP implementation project.

UPDATE: if you’re going for the nice logging solution, not using Event Hub and the Logic App, the price goes down to €11.67.

This price can go up depending on the resources consumed, but I’ve done the numbers using the basic tiers for everything, and you can see the most expensive elements are the Event Hub and Storage, to which I’ve assigned the out-of-the-box values from the calculator.

How do I deploy all of these!?

That’s a good question, because I’ve just explained what’s in the solution I propose and more or less showed how it works and how can it help us.

But deploying all of these by hand each time we need it can be time-consuming. And in the next post we’ll see how to solve this issue and deploy all the resources with a few clicks.