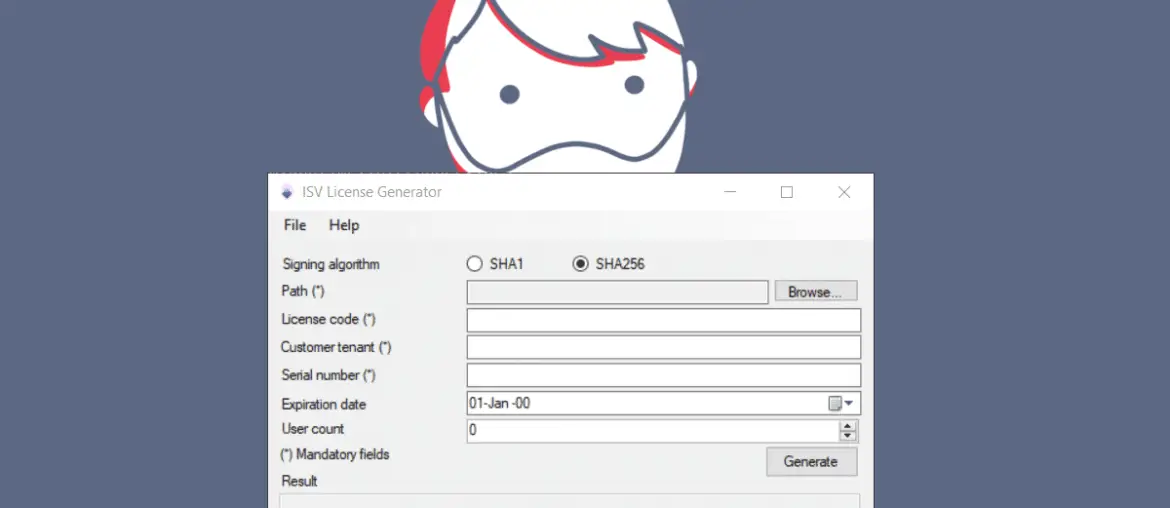

Yesterday I released a new version of ISV License Generator with full support for the SHA-256 hash function after fixing an issue with it.

What’s new in ISV License Generator?

In this new version the support to generate Dynamics 365 Finance and Operations licenses using the SHA-1 hash has been removed, and it will use SHA-256 by default as the AXUtil tool does.