The new LCS DB API endpoint to create a database export has been published! With it we now have a way of automating and scheduling a database refresh from your Dynamics 365 FnO production environment to a developer or Tier 1 VM.

You can learn more about the LCS DB REST API by reading these posts I wrote some time ago. You might want to read them because I’m skipping some steps which are already explained there:

You can also read the full guide on MSDyn365FO & Azure DevOps ALM.

And remember: this is currently in private preview. If you want to join the preview you first need to be part of the Dynamics 365 Insider Program where you can join the “Dynamics 365 for Finance and Operations Insider Community“. Once invited to the Yammer organization you can ask to join the “Self-Service Database Movement / DataALM” group where you’ll get the information to add yourself to the preview and enable it on LCS.

The bacpac issue

One of the main setbacks we currently have with prod DB refreshes is that it’s not a quick thing to do because you need to:

- Refresh a Tier 2+ environment with prod’s DB

- Export a bacpac from the Tier 2+ environment

- Restore the bacpac on a Tier 1 VM.

This happens because Tier 2+ environments use Azure SQL as the DB engine and Tier 1 VMs use SQL Server.

The time it takes to complete the process depends on the size of the database and the performance of the VM you’ll restore it to. But it’s not a fast process at all. For a 60GB database you’ll get a bacpac around 7GB that will take:

- 1 to 2 hours to refresh to UAT

- 2 to 4 hours for the bacpac to be exported

- At least 4 hours to restore it to a Tier 1 VM.

That’s between 7 and 11 hours until you have the DB on a developer machine. Once it’s there you can quickly get a BAK and share it. But you might need the time of a full working day to have that data available.

Save us LCS DB API!

Thanks to the new LCS DB API’s endpoint we can perform all these steps automatically, and with the help of d365fo.tools it’ll be even easier. But first…

Due to the extensive time it takes to complete all the process, we first have to decide a schedule (daily, weekly, etc.) and then this schedule must be compatible with the release cadence to UAT/Prod, because only one operation at a time can be done.

There’s still another problem but I’ll talk about it after seeing the scripts.

My proposal

To do the last part of the LCS DB API flow from prod to dev, we need a Tier 1 VM where the bacpac will be restored. My idea is using the build VM on Microsoft’s subscription and an Azure DevOps pipeline to run all the scripts that will restore the DB in that VM. It’s an underused machine and it fits perfectly to this purpose.

UPDATE: after this comment on Twitter by Volker Deuss I want to clarify why I’ve thought about doing this using the build VM. In most cases this VM will be doing nothing during the night, maybe only running some tests, and it’s during that period of time when I suggest doing all this. But be aware that depending on your DB size this won’t be possible or you’ll run out of space after 2 o 3 restores.

So think about deploying an extra VM and install an agent there to do this, whatever you do don’t mess with the build VM if you don’t know what you’re doing! Try this on a dev VM or anywhere else if you’re afraid of breaking something. Remember you’ll lose the capacity to generate DPs and run pipelines if this environments breaks!

This post is just an example of a possible solution, you need to decide what suits you best! End of the update.

As I said before I’ll be using Mötz Jensen’s d365fo.tools, we could do everything without them but that would be a bit stupid because using the tools is easier, faster and makes everything clearer.

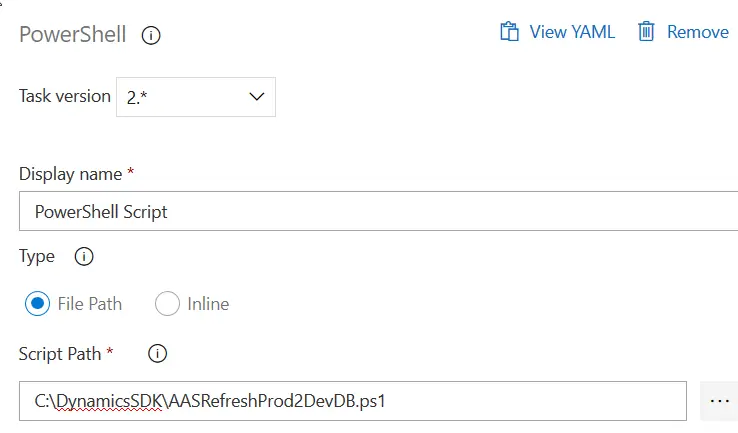

I’ve separated all the steps in 3 Powershell scripts: execute the refresh, export the bacpac and restore the bacpac.

Refresh database

This will refresh the prod environmnet to a Tier 2+:

$clientId = "ab12345-6220-4566-896a-19a4ad41783f"

$userName = "admin@tenant"

$passWord = "admin123456"

$projectId = "1234567"

$sourceEnvId = "958bc863-f089-4811-abbd-c1190917eaae"

$targetEnvId = "13aa6872-c13b-4ea3-81cd-2d26fa72ec5e"

Get-D365LcsApiToken -ClientId $clientId -Username $userName -Password $passWord -LcsApiUri "https://lcsapi.lcs.dynamics.com" -Verbose | Set-D365LcsApiConfig -ProjectId $projectId

Invoke-D365LcsDatabaseRefresh -SourceEnvironmentId $sourceEnvId -TargetEnvironmentId $targetEnvId -SkipInitialStatusFetch

Export database

This part will trigger the bacpac export from the Tier 2+ environment which we’ve just refreshed:

$sourceEnvId = "958bc863-f089-4811-abbd-c1190917eaae"

$targetEnvId = "13aa6872-c13b-4ea3-81cd-2d26fa72ec5e"

Get-D365LcsApiConfig | Invoke-D365LcsApiRefreshToken | Set-D365LcsApiConfig

Invoke-D365LcsDatabaseExport -SourceEnvironmentId $targetEnvId -BackupName $bacpacNameRestore bacpac

And the final step will download the bacpac and restore it to a new database:

$currentDate = Get-Date -Format yyyymmdd

$bacpacName = "UAT{0}" -f $currentDate

$downloadPath = "D:\UAT{0}.bacpac" -f $currentDate

$newDBName = "AxDB_{0}" -f $currentDate

Get-D365LcsApiConfig | Invoke-D365LcsApiRefreshToken | Set-D365LcsApiConfig

$backups = Get-D365LcsDatabaseBackups

$fileLocation = $backups[0].FileLocation

Invoke-D365AzCopyTransfer -SourceUri $fileLocation -DestinationUri $downloadPath

Import-D365Bacpac -ImportModeTier1 -BacpacFile $downloadPath -NewDatabaseName $newDBNameUsing it in an Azure DevOps pipeline

This is it. Create a Powershell script, place it in the Build VM and call it in your pipeline. This is only valid for the agent hosted in the build VM. Everything can probably be run in an Azure hosted agent, but I’ll not cover it here because I think that using the build VM, where we can restore the DB, is more useful to us.

Timing

These 3 scripts will call the LCS DB API to refresh, export and restore the DB. But there’s the timing issue.

Refreshing the database takes some time and exporting it too. You need to find a way to control the status of the operations. The LCS DB API offers an operation you can use to get the status of the ongoing operation. Using d365fo.tools:

Get-D365LcsDatabaseRefreshStatus -OperationActivityId 123456789 -EnvironmentId "99ac6587-c13b-4ea3-81cd-2d26fa72ec5e"You can choose to control that inside your Powershell scripts, but if we use the agent on the build VM that means we cannot use it for anything else until everything is done.

That’s why I separated the process in 3 steps. You can manually schedule 3 pipelines, one for each step at the times you know each stage ends. Then you can choose the order: export, restore, refresh or refresh, export, restore.

You could also use Windows Task Scheduler and forget about AZDO Pipelines, but we’re not doing that because we love pipelines.

And that’s all, we finally have a way of moving data without having to do it manually, we can schedule it, but we need to take some decisions on how we’ll do things. And I’ll leave that up to you 🙂

31 Comments

I’m so grateful that you took the time to write a blog post where you share all the steps needed to fully automate the PROD data refresh down to a Tier1 environment.

On top of that, I’m very pleased that you actually are using my tools to complete the work 🤘

They really help, installing them it’s one of the first things I do when deploying new VMs. The life of a 365 developer is complicated enough not to use them xD

Thanks for the wonderful post! I’m in the process of setting up the automation for database movement and have a few questions.

1. Dumb question but I’m assuming I can run all three in one PS1 script like you have there and that each step will start when the previous one ends.

2. I like the idea of splitting them up. You mention to run them when “YOU KNOW each stage ends”. I see where I can have different PowerShell tasks and have them run in an order on the condition the previous one finishes? Is that what you are referring to?

3. How would you handle getting multiple Tier 1 DEV VMs updated in parallel? With this setup, I guess I could install agents on each DEV VM and then setup download/import pipelines for each (pointed to their respective Agents) and figure out how to put a pipeline completion trigger to start them after my main Export pipeline completes?

Hope that makes sense.

Thanks again,

Ford

Hi Ford,

Thank you, I’m glad you liked the post!

Let me answer points 1 and 2 together. You cannot run the 3 scripts in a single powershell script because the refresh and export steps are just calling the API without awaiting a result. That’s why I wrote the “knowing when each step ends” part, you need to wait until the refresh operation is done to call the export one. There’s an operation in the API to get the status of an operation which you can call with the d365fo.tools cmdlet Get-D365LcsDatabaseRefreshStatus. I think you could call it every X minutes to check when it’s done and then call the export operation, this should be easy to be done on PowerShell but the pipeline might timeout.

The idea was having 3 pipelines with 3 different schedules, knowing step 1 takes 1h, I’d schedule step 2 1h 30m after step 1 and so on.

Regarding step 3, I wouldn’t refresh all the dev VMs using the bacpac because it takes so much time. I’d prefer restoring just one, extracting a BAK and use it to refresh the data on other VMs. But of course you could install the agent on as many VMs you want and do what you say, one pipeline at a time, without any issue. But again you would need to control when the export finishes with the operation status endpoint.

Regards!

Thank you for your quick response. I had not had my first cup of coffee when asking the first post :). You are absolutely correct on pushing the BAK file around instead of bacpac. It will be much faster. I imagine I could kick off another process when your bacpac gets imported to then execute a SQL backup, upload that bak file to an Azure Storage, then have each DEV VM download / restore it……

Thanks for the help! Brain is spinning now..

Ford

You can even restore the bak from the storage account!

Thanks for your help Adria! I finally go an end to end (PROD –> Tier2+ –> LCS –> Tier1 –> bak in Azure Storage) to complete with one mouse click (using a DevOps Pipeline) in 3.5 hours… Once I clean it all up and figure out how to put the sensitive stuff in an Azure Key Vault, I’ll publish my findings and make sure to give you your due credit!

You’re welcome! Thank you, I’ve seen in Yammer you managed all the automation in the pipeline 🙂 I wrote a blog post about using Azure Key Vault in the pipelines, maybe it helps!

Good afternoon,

Well, I was able to go end to end (PROD –> Tier 2 –>LCS –> Tier 1 –> BAK of PROD in Azure Blob –> DEV Tier 1 VM and restore PROD data to it. Thank you again for your help. I am in the process of cleaning it up and decided to REALLY clean it up and put all my variables into an Azure Key Vault. I used your blog to help get it created. I have one question, I have been using PS1 files in my DevOps Pipeline up till now but it seems that the KeyVault stuff does not like the $(ClientID) type variable when it is in an actual PS1 file. I’ve copied the text out of the file and put it “Inline” and it seems to work. Have you tried running the same command (get-D365LcsApiToken) using the Key Vault variables from within an actual PS1 file in a Pipeline? It basically says that “clientId : The term ‘clientId’ is not recognized as the name of a cmdlet, function, script file, or operable program.”

Hi Ford,

I just have used the Key Vault in inline scripts, not in PS1 ones sorry. I really suck at Power Shell, have you tried using the variables without the ( )?

Hi,

[Invoke-Process] Exit code from AzCopy.exe indicated an error happened. Will output both standard stream and error stream.

[Invoke-Process] Standard output was: \r\n

[Invoke-Process] Error output was: \r\n [2021/04/12 16:07:37][ERROR] The syntax of the command is incorrect. Error parsing the argument “copy”: parameter name is required.

WARNING: [16:07:37][Invoke-Process] Stopping because of Exit Code. | Stopping because an Exit Code from AzCopy.exe wasn’t 0 (zero) like expected.

I am getting the error when running the 3rd PowerShell script. Why this is happening?

Thankyou!

Are d365fo.tools installed on the build VM? Is AzCopy installed? If it isn’t install it using the invoke-d365installazcopy cmdlet. Also you can try running that step manually inside the VM with -Verbose to see more detail.

When tried to export DB from Tire2 to LCS I get 500 error (Error status code InternalServerError in starting a new database export in LCS. Internal Server Error. | The remote server returned an error: (500) Internal Server Error.

WARNING: [08:35:16][Start-LcsDatabaseExportV2] Stopping because of errors).

I used below code for it :

$clientId = “######”

$clientSecret = “##########”

$userName = “########”

$passWord = “#####”

$projectId = “######”

$sourceEnvIdUAT = “586682e0-##### b4e9b07e”

$targetEnvIdSIT = “65a32f94-######### f-ea413679ba91”

$LCSUri = “https://lcsapi.lcs.dynamics.com”

$currentDate = Get-Date -Format yyyymmdd

$bacpacName = “TestPowerShellBackup”

$tokenUrl = “https://login.microsoftonline.com/common/oauth2/token”

$tokenBody = @{

grant_type = “password”

client_id = $clientId

client_secret = $clientSecret

resource = $LCSUri

username = $userName

password = $passWord

}

$tokenResponse = Invoke-RestMethod -Method ‘POST’ -Uri $tokenUrl -Body $tokenBody

$token = $tokenResponse.access_token

Write-Host “##vso[task.setvariable variable=TOKEN] $token”

Invoke-D365LcsDatabaseExport -SourceEnvironmentId “586682e0-59c7-442b-ae75-cf72b4e9b07e” -BearerToken $token -BackupName $bacpacName -LcsApiUri “https://lcsapi.lcs.dynamics.com” -Verbose | Set-D365LcsApiConfig -ProjectId $projectId

Hi Manish,

have you checked if the environment is performing another operation when the script is run?

Thanks a lot.. This helped me on the way.. I ran into one confusing problem.. When -Format is set to yyyymmdd it seems to be using minutes instead of month.. I got it right by using capital MM instead..

$currentDate = Get-Date -Format yyyyMMdd

instead of

$currentDate = Get-Date -Format yyyymmdd

Have a nice day everyone..

Hi Adrià,

Thank you for the post. Generally, here is the process we follow:

1. Refresh from PROD -> UAT.

2. Run SQL scripts in UAT.

3. Export database from UAT.

4. Import database into Tier 1 Environment.

Do you have anything for step 2 here?

Thank you.

Hello Mohammed,

there’s currently no way of enabling access to a Tier2+ environment and getting the JIT credentials at the moment. The easiest way of doing it would be creating a class that does all that the SQL script would do, and call it using d365fo.tools cmdlet Invoke-d365sysrunnerclass in a pipeline. With all the changecompany needed, enabling users, changing web services URLs, etc… in this class. You can even block the execution of this class in the prod environment, for example using the URLUtility::getUrl() method and comparing it with the one of the production environment, and throwing an error if it is.

This requires a lot of hard-coding values, but it’s for a good purpose! Hope it helps!

Regards!

Makes sense. Thank you!

Hi Sir,

I need your help we need to restore one bacpac file to a tier 1 environment the bacpac is on my local machine but didn’t find any thing to describe tier1 environment on our code , Can you pls help us and guide how we can achieve the same however we tried to follow your restore bacpac script we found an error and unfortunately we couldn’t execute it.

Thanks in advance.

Hi Syed,

if you only want to import a bacpac into a dev environment check this link: https://learn.microsoft.com/en-us/dynamics365/fin-ops-core/dev-itpro/database/dbmovement-scenario-exportuat#import-the-database

Hi

I’m running the Refresh Database and getting this error, any idea why?

[09:16:41][] Unable to locate the %ServiceDrive% environment variable. It could indicate that the machine is either not configured with D365FO or that you have domain joined a local Tier1. We have defaulted to c:\

2023-10-20T09:16:41.1837376Z [09:16:41][] This message will show every time you load the module. If you want to silence this message, please add the ServiceDrive environment variable by executing this command (remember to restart the console afterwards):

2023-10-20T09:16:41.1922961Z [Environment]::SetEnvironmentVariable(“ServiceDrive”, “C:”, “Machine”)

2023-10-20T09:16:41.9762091Z [09:16:41][Invoke-Authorization] Something went wrong while working against Azure Active Directory (AAD) | The remote server returned an error: (400) Bad Request.

2023-10-20T09:16:42.0598691Z WARNING: [09:16:42][Invoke-Authorization] Stopping because of errors

2023-10-20T09:16:42.0725715Z VERBOSE: [09:16:42][Get-D365LcsApiToken] Total time spent inside the function was 00:00:00.5333386

2023-10-20T09:16:42.1797300Z Invoke-D365LcsDatabaseRefresh : Cannot bind argument to parameter ‘LcsApiUri’ because it is an empty string.

Hi Carsten,

make sure the LcsApiUrl parameter has a value or that it’s a correct one (if you’re using any of the regional LCS instances).

I have the same problem. Yes, I have a value on LcsApiUrl.

The thing is that it works when I run the script locally, but not in a pipeline (Azure Devops, windows-2019 agent).

Any suggestions?

Which error is the pipeline returning?

[Invoke-Authorization] Something went wrong while working against Azure Active Directory (AAD) | The remote server returned an error: (400) Bad Request.

Have you checked if the LCS endpoint is the right one for your region and LCS project?

Hi Sir,

Iam also facing the same error, any idea on this issue.

[Invoke-Authorization] Something went wrong while working against Azure Active Directory (AAD) | The remote server returned an error: (401) Unauthorized.

2023-12-30T03:03:01.3570163Z WARNING: [03:03:01][Invoke-Authorization] Stopping because of errors

2023-12-30T03:03:01.3710758Z VERBOSE: [03:03:01][Get-D365LcsApiToken] Total time spent inside the function was 00:00:00.5161573

2023-12-30T03:03:01.4887418Z Invoke-D365LcsDatabaseRefresh : Cannot bind argument to parameter ‘LcsApiUri’ because it is an empty string.

Is your LCS project in the US or any other of the regional instances? Make sure if it’s on a region to use the right API URL.

LCS project is in US region only

I am getting the same error as Samhitha while performing the Refresh. Could you please share the fix if it was resolved.

Double check if you’re using one of the regional LCS, and also check that the app registration has the Allow public client flows option enabled under the “Authentication” tab.