You can visit the new Dev ALM Guide here!

Intro

X++ developers have been working without a version-control system for most of our careers. We had MorphX VCS for AX 2009 and the option to use TFVC in AX 2009 and AX 2012 but it wasn’t mandatory. Actually, and always from my experience, most of the projects used no VCS other than comments in the code. I’m not saying all, but in 10 years I’ve seen only one AX 2009 project using it.

If we told this to a non-X++ software developer, he would think we’re nuts and probably being foolish by not using a VCS. Who would risk at losing their ongoing work due to a stupid mistake? Because we were that, do you know someone who lost all the work done in a day after pressing the wrong button? I’m sure you do, I do!

One of the major changes we got with Finance and Operations has been the mandatory use of a version-control system.

What follows here is the product of several blog posts written during over a year at ariste.info. I’ve rewritten the content to adequate it to the changes and new features we’ve gotten in Azure DevOps for Microsoft Dynamics 365 FnO and tried to reorder it for an easier reading.

I will also try to keep this document updated if something changes, but I can’t guarantee that. If you find an error, you can contact me at adria (at) ariste (dot) info.

I hope this guide helps all the developers out there. We need a strong developer community which can make a correct use of these tools to make our Dynamics 365 projects successful.

Dynamics 365 for Finance & Operations and Azure DevOps

Package and model planning

Creating a package or a model is one of the first things we’ll do when starting to code in a new project.

It’s something very basic, but I’m still seeing some issues around this, bad practices that can lead to problems in the future.

Packages or models?

Is a package something different as a model? Well, more or less, it’s something conceptual more than anything else.

A package is a group of one or more models. And models are the substructures of packages. So if you have a package with a single model, that model is also a package. The main point here is that packages can be… packaged and distributed to other environments.

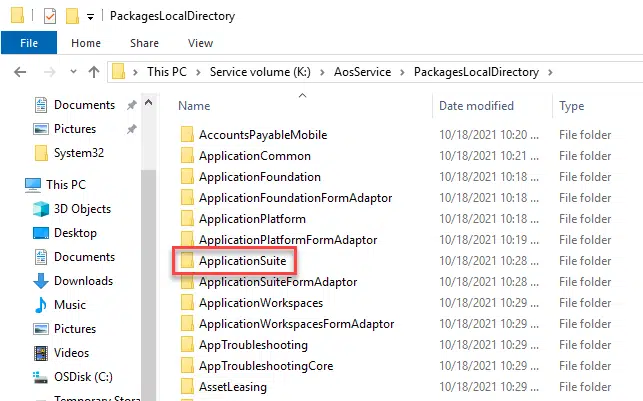

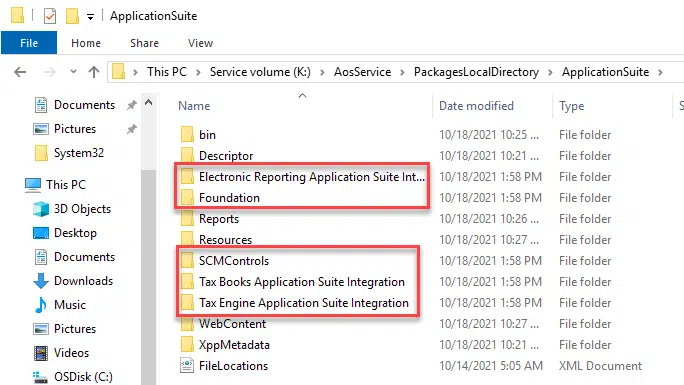

To be more clear: everything that’s inside K:\AosService\PackagesLocalDirectory is a package. For example ApplicationSuite is a package:

And inside this package there are several models:

And after this short explanation, let me get to the important part of this post.

How should we plan around packages and models?

Let me start with this, and let me write it in capital letters, and in bold, and in color red: DO NOT CREATE A PACKAGE PER EACH DEVELOPMENT YOU’RE DOING.

We need to think about packages as a container for our work, and models as a way of organizing some objects inside packages. So create a package for your org, and inside it create as many models you need, for example a model for each Dynamics 365 module.

And of course you can just put all of our customizations inside a single package with only one model. These two should be the way to go.

But we will not, in any case, never, create a package for each development we need to do. And I’ve seen it! More than once! Why shouldn’t we do this? Because that’s a recipe for disaster, and for a future first-class ticket right into hell. Let’s see some reasons why you shouldn’t do this.

You’ll hate the azure-hosted pipelines

It’s not the most important, but it’s the first one that always comes to my mind. Imagine you have 20 packages for 20 different developments.

When you create the solution that will be used in your Azure-hosted pipelines to build your code, you’ll need to create a solution with 20 projects in it! Oh, of course it’s only 20 projects, but what if it’s over 100?

And what about the maintenance of that solution? You’ll be updating it for each new development you do! And then you’ll need to create branches with versions of that solution, to be able to run pipelines for each branch, until you move all your changes to the branch that goes to prod or to a sandbox environment!

Circular references

This is really the main reason we shouldn’t be creating a package per development.

Imagine we create ModelA, that contains a table called TableA. Then we create the ModelB and add a reference to ModelA because we want to use the TableA in a class that will run in a batch. No problem! Done!

Then we create an EDT in ModelB, and someone needs to use it in ModelA. Now we cannot add a reference to ModelB because there’s already a reference to ModelA in ModelB, and that would be a circular reference.

With just two models this is easy, if you have 50 you’ll want to die.

I love extensions!

Sure you do! And if you do the one development, one package thing, you might end up with a million extensions of the same table or form. And when it comes the time you need to modify a control you added… you’ll spend a nice day looking for it through all your lovely extensions!

Build times

This one’s maybe not important, but with so many models, building all your models in VS will take longer.

Your future you, will hate you

Seriously, this might be even more important than the circular references one. If you chop your packages and models, and one day you realize what you did, and want to move everything to a single package, there’s self-hate guaranteed.

Azure DevOps

Azure DevOps will be the service we will use for source control. Microsoft Dynamics 365 for Finance and Operations supports TFVC out of the box as its version-control system.

But Azure DevOps does not only offer a source control tool. Of course, developers will be the most benefited of using it, but from project management to the functional team and customers, everybody can be involved in using Azure DevOps. BPM synchronization and task creation, team planning, source control, automated builds and releases, are some of the tools it offers. All these changes will need some learning from the team, but in the short-term all of this will help to better manage implementations.

As I said it looks like the technical team is the most affected by the addition of source control to Visual Studio, but it’s the most benefited too…

First steps

To use all the features described in this guide we need to create an Azure DevOps project and connect it to LCS. This will be the first step and it’s mandatory so let’s see how we have to do everything.

Create an Azure DevOps organization

You might or might not have to do this. If you or your customer already have an account, you can use it and just create a new project in it. Otherwise head to https://dev.azure.com and create a new organization:

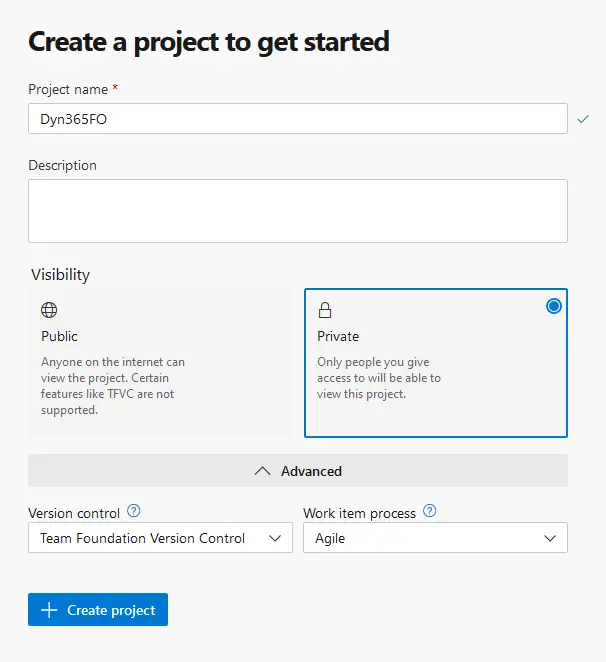

After creating it you need to create a new project with the following options:

Press the “Create project” button and you’re done. Now let’s connect this Azure DevOps project to our LCS project.

When a customer signs up for Finance and Operations the LCS project is of type “Implementation project” is created automatically. Your customers need to invite you to their project. If you’re an ISV you can use the “Migrate, create solutions, and learn” projects.

In any of both cases you need to go to “Project settings” and select the “Visual Studio Team Services” Tab. Scroll down and you should see two fields. Fill the field with your DevOps URL without the project part. If you got a https://dev.azure.com/YOUR_ORG URL type you need to change it to https://YOUR_ORG.visualstudio.com:

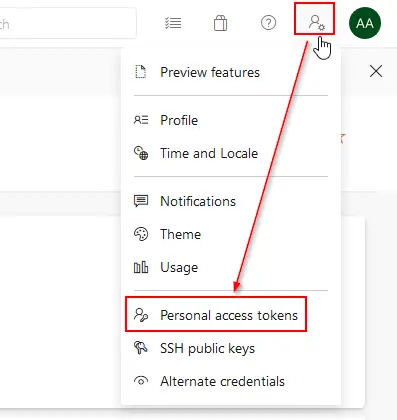

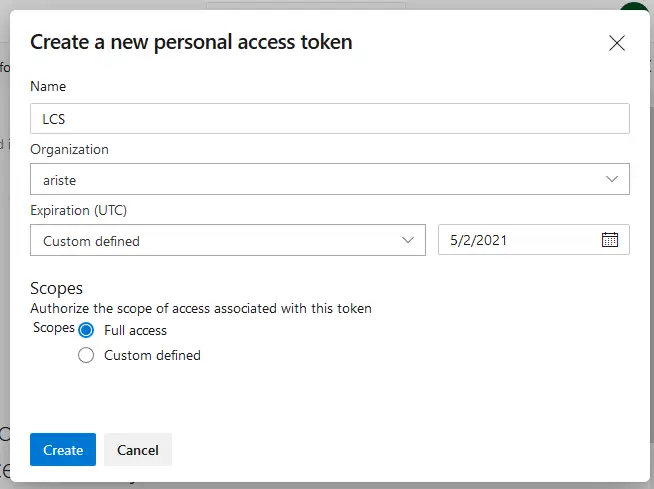

And to get the “Personal access token” we go back to our Azure DevOps project, click on the user settings icon, and then select “Personal access tokens”:

We add a new token, set its expiration and give it full access. Finally press the “Create” button and a new dialog will appear with your token, copy it, and paste it in LCS.

Back to LCS, once you’ve pasted the token press the “Continue” button. On the next step just select your project, press “Continue” and finally “Save” on the last step.

If you have any problem you can take a look at the docs where everything is really well documented.

The build server

Once we’ve linked LCS and Azure DevOps we’ll have to deploy the build server. This will be the heart of our CI/CD processes.

Even though the build virtual machine has the same topology as a developer box, it really isn’t a developer VM and should never be used as one, do not use it as a developer VM! It has Visual Studio installed in it, the AosService folder with all the standard packages and SQL Server with an AxDB, just like all other developer machines, but that’s not its purpose.

We won’t be using any of those features. The “heart” of the build machine is the build agent, an application which Azure DevOps uses to execute the build definition’s tasks from Azure DevOps.

We can also use Azure hosted build agents. Azure hosted agents allow us to run a build without a VM, the pipeline runs on Azure. We’ll see this later.

The build VM

This VM is usually the dev box on Microsoft’s subscription but you can also use a regular cloud-hosted environment as a build VM.

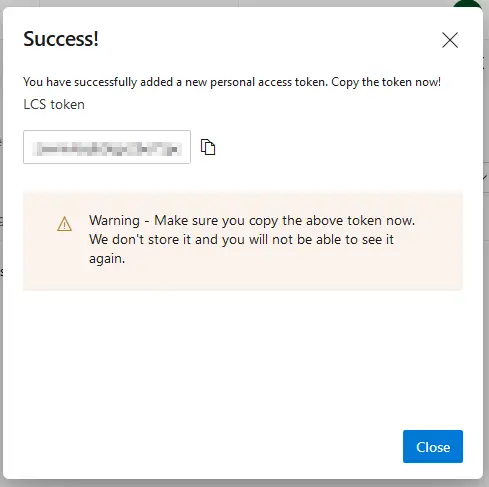

When this VM is deployed there’s two things happening: the basic source code structure and the default build definition are created.

Visual Studio

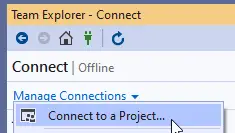

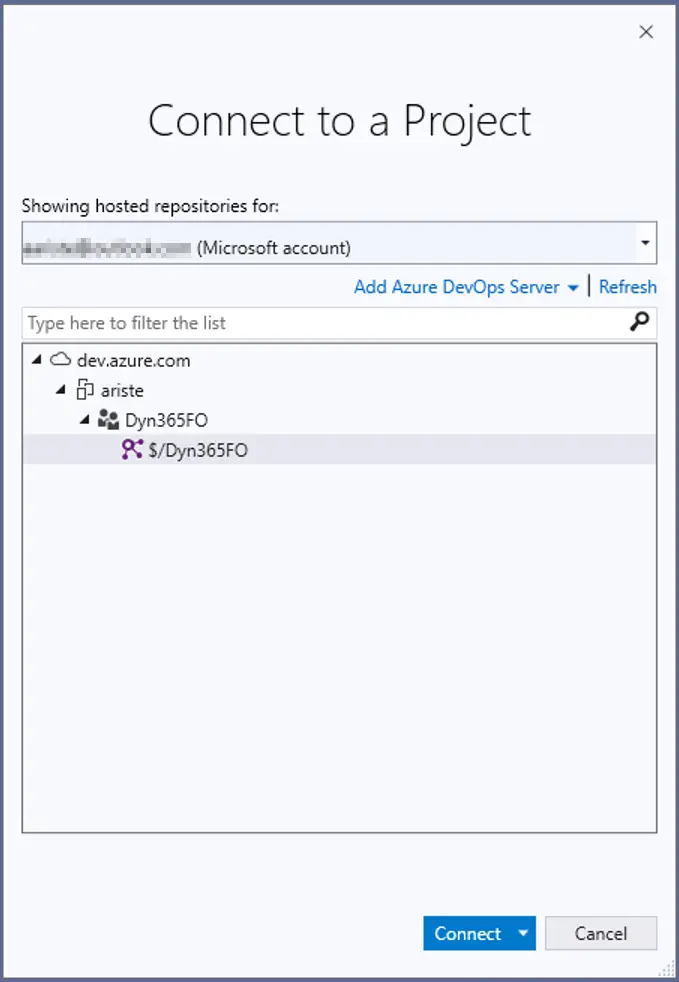

We have the basics to start working. Log into your dev VM and start Visual Studio, we must map the Main folder to the development machine’s packages folder. Open the team explorer and select “Connect to a Project…”:

It will ask for your credentials and then show all projects available with the account you’ve used. Select the project we have created in the steps earlier and click on “Connect”:

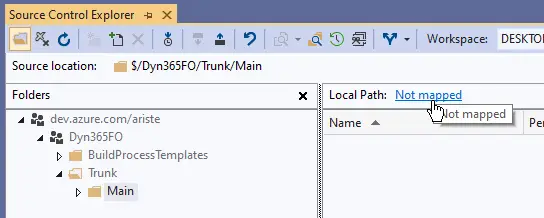

Now open the “Source Control Explorer”, select the Main folder and click on the “Not mapped” text:

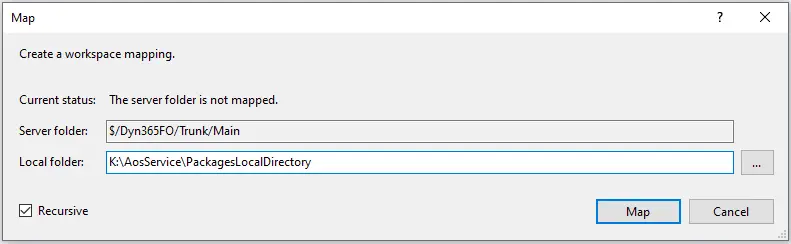

Map the Main folder to the K:\AosService\PackagesLocalDirectory folder on your service drive (this could be drive C if you’re using a local VM instead of a cloud-hosted environment):

What we’ve done in this step is telling Visual Studio that what’s in our Azure DevOps project, inside the Main folder, will go into the K:\AosService\PackagesLocalDirectory folder of our development VM.

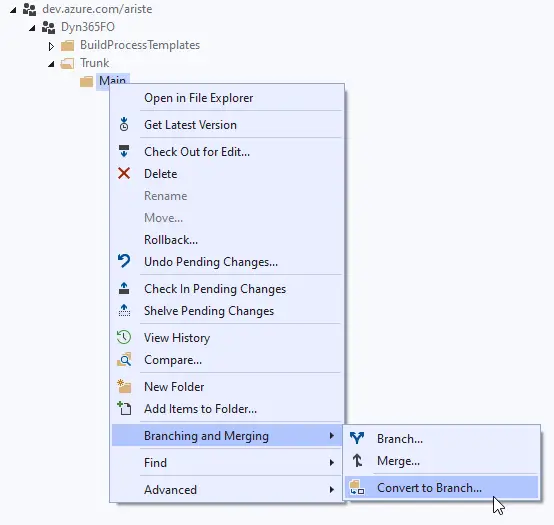

The Main folder we have in our source control tree is a regular folder, but we can convert it into a branch if we need it.

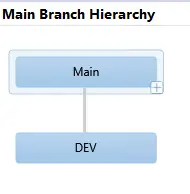

In the image above, you can see the icon for Main changes when it’s converted to a branch. Branches allow us to perform some actions that aren’t available to folders. Some differences can be seen in the context menu:

For instance, branches can display the hierarchy of all the project branches (in this case it’s only Main and Dev so it’s quite simple).

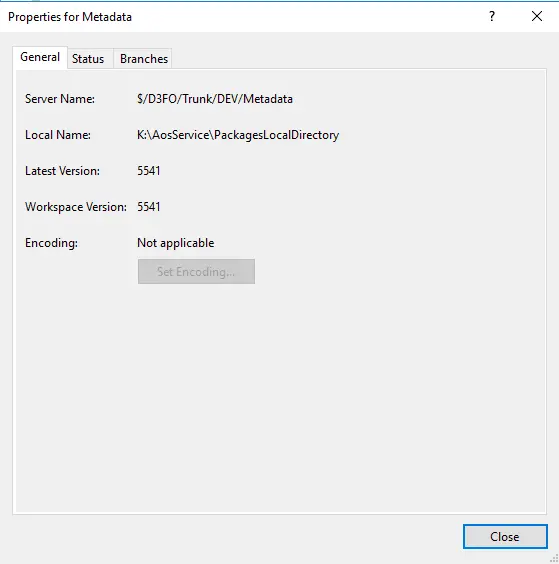

Properties dialogs are different too. The folder one:

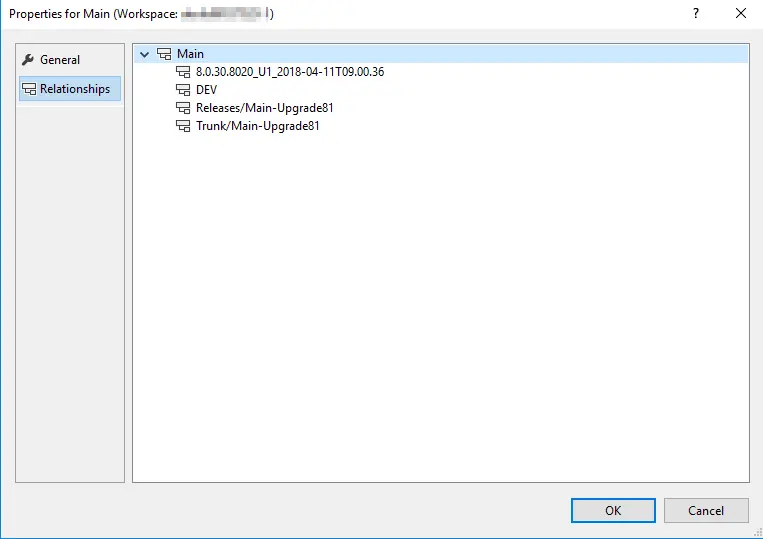

And the branch one, where we can see the different relationships between the other branches created from Main:

This might be not that interesting or useful, but one of the things converting a folder into a branch is seeing where has a changeset been merge into.

Some advice

I strongly recommend moving the Projects folder out of the Main branch (or whatever you call it) into the root of the project, at the same level as BuildProcessTemplates and Trunk. In fact, and this is my personal preference, I would keep anything that’s not code outside of a branch. By doing this you only need to take care of the code when merging and branching.

Those who have been working with AX for several years were used to not use version-control systems. MSDyn365FO has taken us to uncharted territory, so it is not uncommon for different teams to work in different ways, depending on their experience and what they’ve found in the path. Each team will need to invest some time to discover what’s better for them regarding code, branching and methodologies. Many times, this will be based on experimentation and trial and error, and with the pace of implementation projects trial and error turns out bad.

Branching strategies

I want to make it clear in advance: I’m not an expert in managing code nor Azure DevOps, at all. All that I’ve written here is product of my experience (good and bad) of over 4 years working with Finance and Operations. In this article on branching strategies from the docs there’s more information regarding branching and links to articles of the DevOps team. And there’s even more info in the DevOps Rangers’ Library of tooling and guidance solutions!

Main-Release

One possible strategy is using a Main and a Release branch. We have already learnt that the Main branch is created when the Build VM is deployed. The usual is that in an implementation project all development will be done on that branch until the Go Live, and just before that a new Release branch will be created.

We will keep development work on the Main branch, and when that passes validation, we’ll move it to Release. This branching strategy is really simple and will keep us mostly worry-free.

Dev – Main – Release

This strategy is similar to the Main – Release one but includes a Dev branch for each developer. This dev branch must be maintained by the developer using it. He can do as many check-ins as he wants during a development, and when it’s done merge all these changes to the Main branch in a single changeset. Of course, this adds some bureaucracy because we also need to forward integrate changes from Main into our Dev branch, but it will allow us to have a cleaner list of changesets when merging them from Main to the Release branch.

Whatever branching strategy you choose try to avoid having pending changesets to be merged for a long time. The amount of merge conflicts that will appear is directly proportional to the time the changeset has been waiting to be merged.

I wrote all of this based on my experience. It’s obviously not the same working for an ISV than for an implementation partner. An ISV has different needs, it must maintain different code versions to support all their customers and they don’t necessarily need to work in a Main – Release manner. They could have one (or more) branch for each version. However, since the end of overlayering this is not necessary. More ideas about this can be found in the article linked at the beginning.

Azure Pipelines

Builds

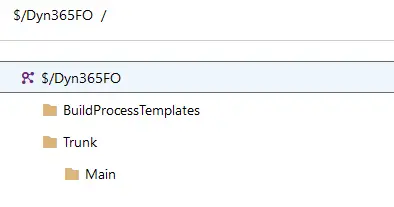

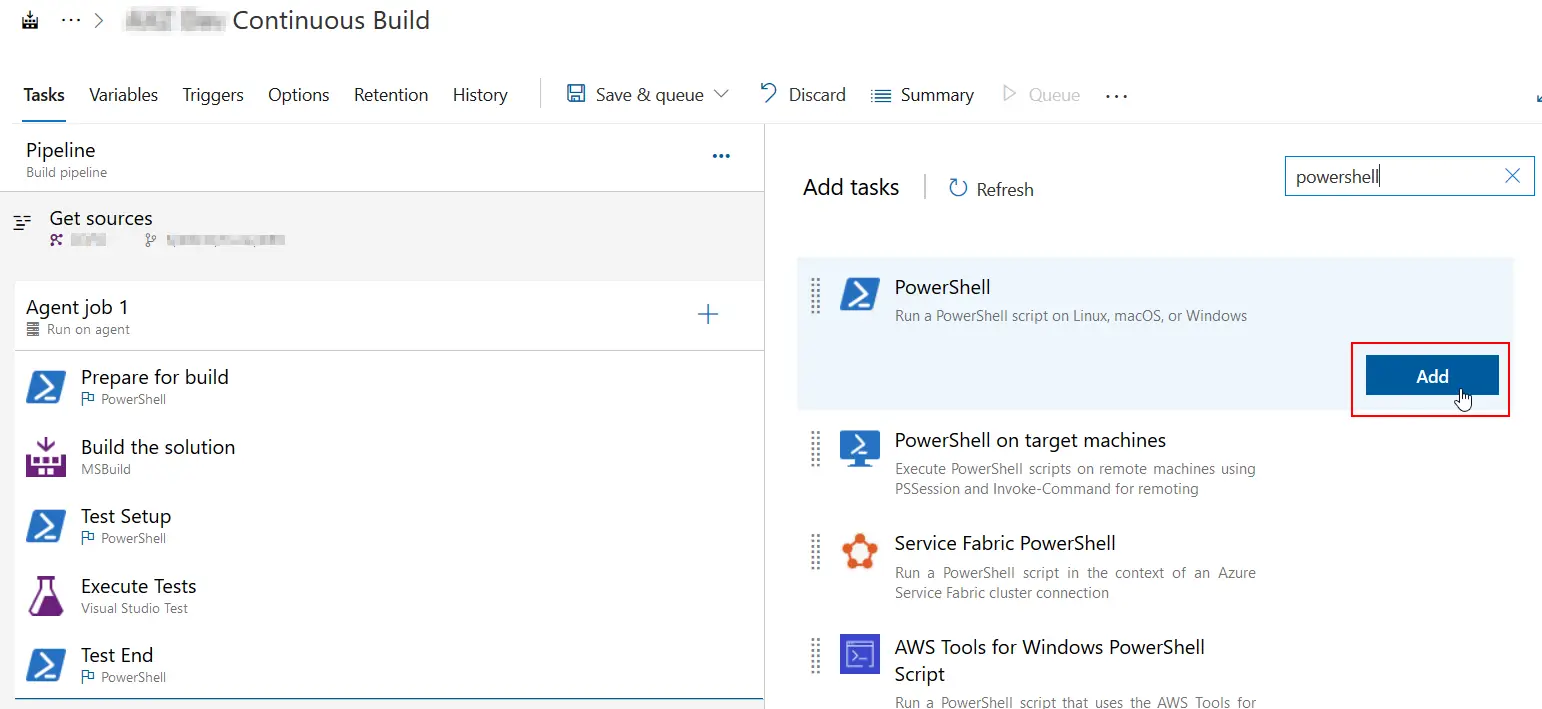

We’ve already seen that the default build definition has all the default steps active. We can disable (or remove) all the steps we’re not going to use. For example, the testing steps can be removed if we have no unit testing. We can also create new build definitions from scratch, however it’s easier to clone the default one and modify it to other branches or needs.

Since version 8.1 all the X++ hotfixes are gone, the updates are applied in a single deployable package as binaries. This implies that the source-controlled Metadata folder will only contain our custom packages and models, no standard packages anymore.

Continuous Integration

Continuous Integration (CI) is the process of automating the build and testing of code every time a team member commits changes to version control. (source)

Should your project/team use CI? Yes, yes, yes. This is one of the key feature of using an automated build process.

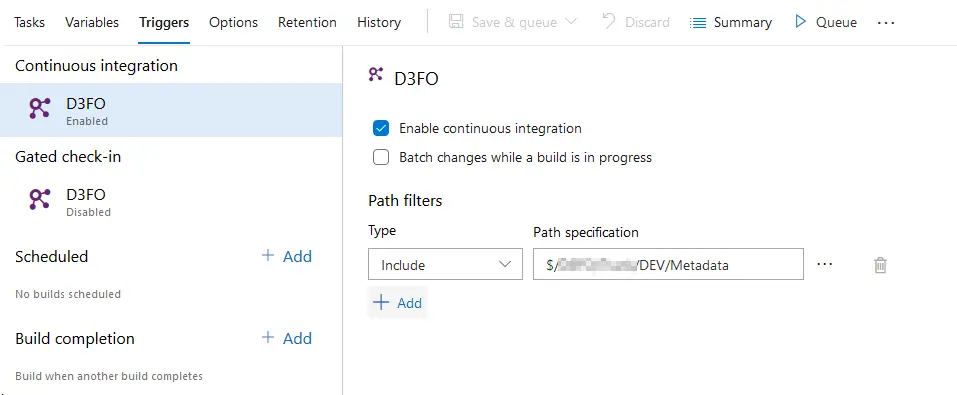

This is how a build definition for CI that will only compile our codebase looks like:

Only the prepare and build steps. Then we need to go to the “Triggers” tab and enable the CI option:

Right after each developer check-in, a build will be queued, and the code compiled. In case there’s a compilation error we’ll be notified about it. Of course, we all build the solutions before checking them in and don’t need this CI build. Right?

And because we all know that “Slow and steady wins the race”, but at some point during a project that’s not possible, so this kind of build definition can help us out. Especially when merging code between branches. This will allow us to be 100% sure when creating a DP to release to production that it’ll work. I can tell you that having to do a release to prod in a hurry and seeing the Main build failing is not nice.

Gated check-ins

A gated check-in is a bit different than a CI build. The gated check-in will trigger an automated build BEFORE checking-in the code. If it fails, the changeset is not cheked-in until the errors are fixed and checked-in again.

This option might seem perfect for the merge check-ins to the Main branch. I’ve found some issues trying to use it, for example:

- If multiple merges & check-ins from the same development are done and the first fails but the second doesn’t, you’ll still have pending merges to be done. You can try batching the builds, but I haven’t tried that.

- Issues with error notifications and pending code on dev VMs.

- If many check-ins are made, you’ll end up with lots of queued builds (and we only have one available agent per DevOps project). This can also be solved using the “Batch changes while a build is in progress”.

I think the CI option is working perfectly to validate code. As I’ve already said several times, choose the strategy that better suits your team and your needs. Experiment with CI and Gated check-in builds and decide what is better for you.

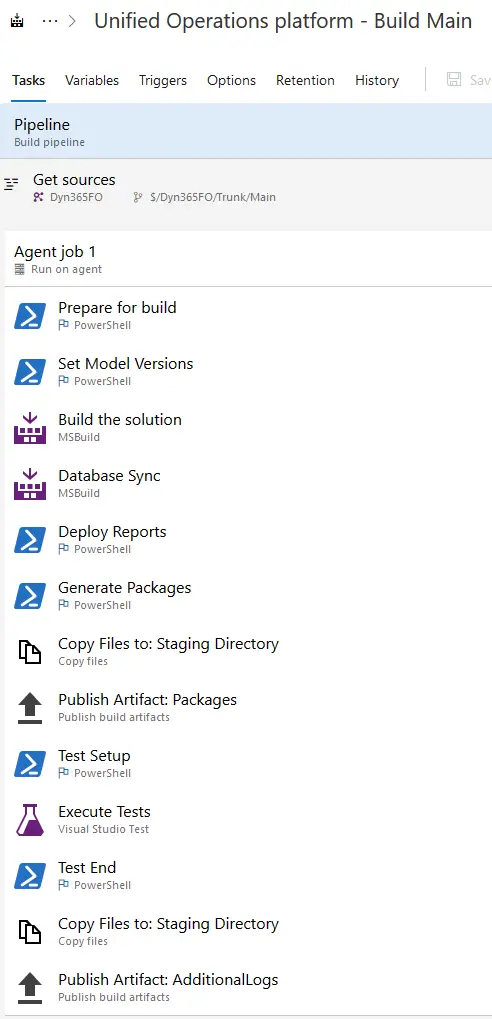

Set up the new Azure DevOps tasks for Packaging and Model Versioning

Almost all the tasks of the default build definition use PowerShell scripts that run on the Build VM. We can change 3 of those steps for newer tasks. In order to use these newer tasks, we need to install the “Dynamics 365 Unified Operations Tools”. We’ll be using them to set up our release pipeline too so consider doing it now.

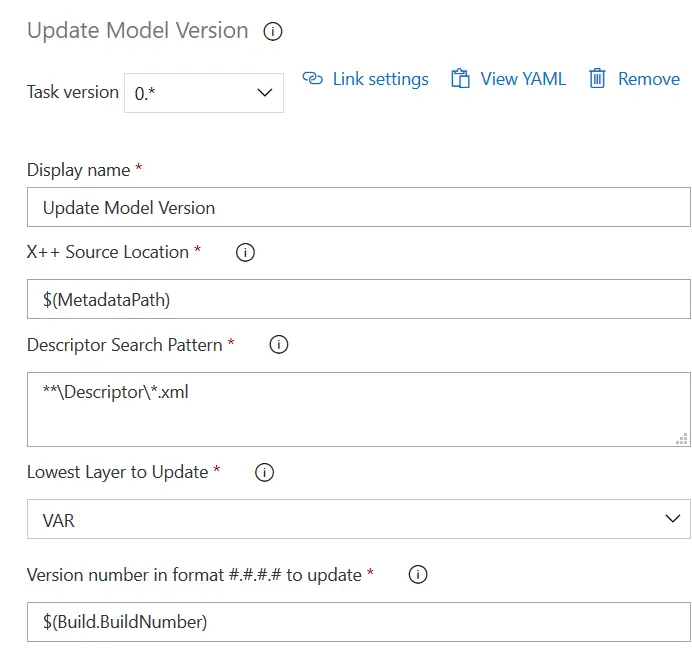

Update Model Version task

This one is the easiest, just add it to your build definition under the current model versioning task, disable the original one and you’re done. If you have any filters in your current task, like excluding any model, you must add the filter in the Descriptor Search Pattern field using Azure DevOps pattern syntax.

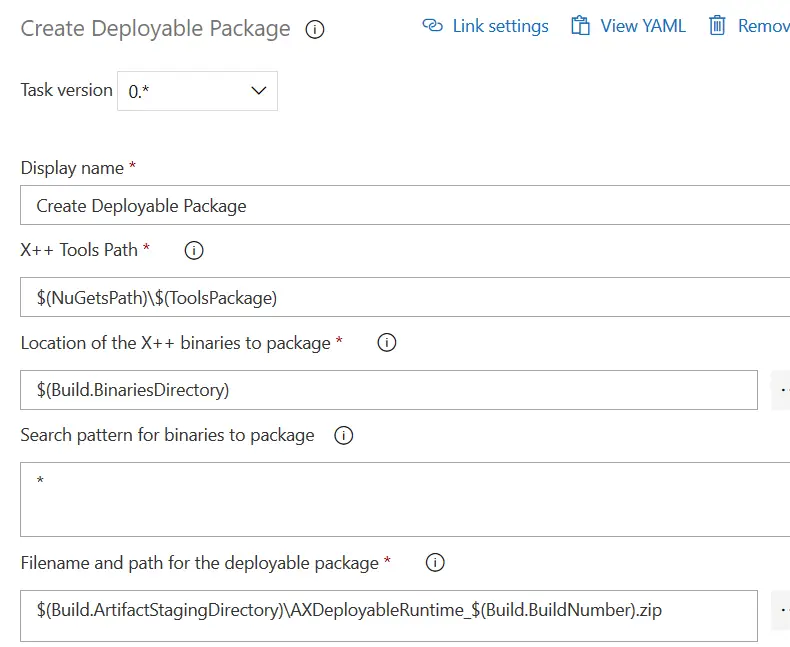

Create Deployable Package task

This task will replace the Generate packages from the current build definitions. To set it up we just need to do a pair of changes to the default values:

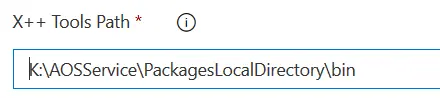

X++ Tools Path

This is your build VM’s physical bin folder, the AosService folder is usually on the unit K for cloud-hosted VMs. I guess this will change when we go VM-less to do the builds.

Update!: the route to the unit can be changed for $(ServiceDrive), getting a path like $(ServiceDrive)\AOSService\PackagesLocalDirectory\bin.

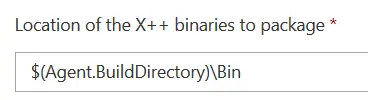

Location of the X++ binaries to package

The task comes with this field filled in as $(Build.BinariesDirectory) but this didn’t work out for our build definitions, maybe the variable isn’t set up on the proj file. After changing this to $(Agent.BuildDirectory)\Bin the package is generated.

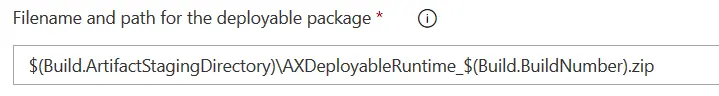

Filename and path for the deployable package

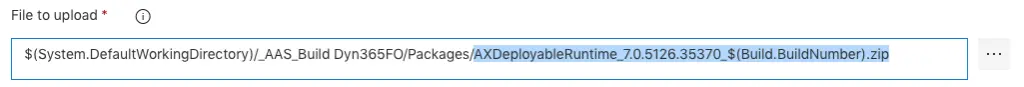

The path on the image should be changed to $(Build.ArtifactStagingDirectory)\Packages\AXDeployableRuntime_$(Build.BuildNumber).zip. You can leave it without the Packages folder in the path, but if you do that you will need to change the Path to Publish field in the Publish Artifact: Package step of the definition.

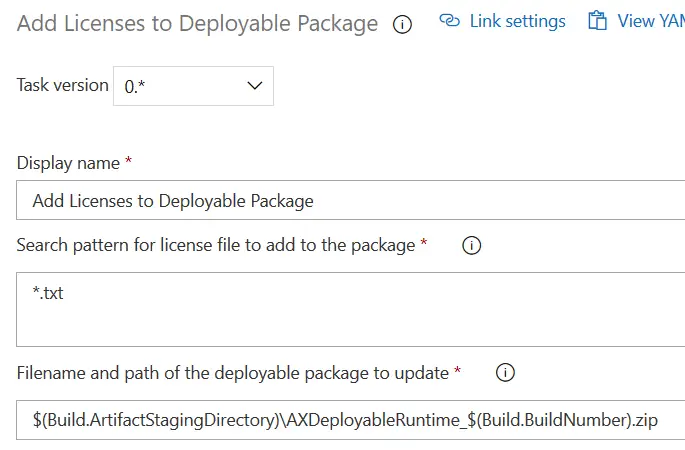

Add Licenses to Deployable Package task

This task will add the license files to an existing Deployable Package. Remember that the path of the deployable package must be the same as the one in the Create Deployable Package task.

Azure hosted build for Dynamics 365 Finance & SCM

The day we’ve been waiting for has come! The Azure hosted builds are in public preview since PU35!! We can now stop asking Joris when will this be available, because it already is! Check the docs!

I’ve been able to write this because I’ve been testing this for a few months with access to the private preview. And of course thanks to Joris for inviting us to the preview!

What does this mean? We no longer need a VM to run the build pipelines! Nah, we still need! If you’re running tests or synchronizing the DB as a part of your build pipeline you still need the VM. But we can move CI builds to the Azure hosted agent!

You can also read my full guide on MSDyn365FO & Azure DevOps ALM.

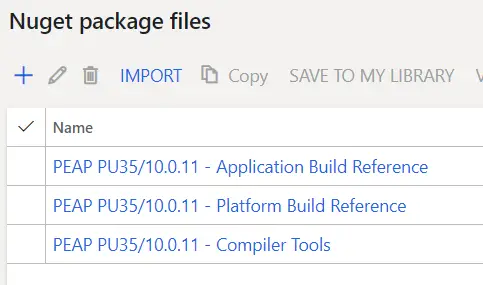

Remember this is a public preview. If you want to join the preview you first need to be part of the Dynamics 365 Insider Program where you can join the “Dynamics 365 for Finance and Operations Insider Community“. Once invited you should see a new LCS project called PEAP Assets, and inside its Asset Library you’ll find the nugets in the Nuget package section.

Azure agents

With the capacity to run an extra Azure-hosted build we get another agent to run a pipeline and can run multiple pipelines at the same time. But it still won’t be parallel pipelines, because we only get one VM-less agent. This means we can run a self-hosted and azure hosted pipeline at the same time, but we cannot run two of the same type in parallel. If we want that we need to purchase extra agents.

With a private Azure DevOps project we get 2GB of Artifacts space (we’ll see that later) and one self-hosted and one Microsoft hosted agent with 1800 free minutes:

We’ll still keep the build VM, so it’s difficult to tell a customer we need to pay extra money without getting rid of its cost. Plus we’ve been doing everything with one agent until now and it’s been fine, right? So take this like extra capacity, we can divide the build between both agents and leave the MS hosted one for short builds to squeeze the 1800 free minutes as much as possible.

How does it work?

There’s really no magic in this. We move from a self-hosted agent in the build VM to a Microsoft-hosted agent.

The Azure hosted build relies on nuget packages to compile our X++ code. The contents of the PackagesLocalDirectory folder, platform and the compiler tools have basically been put into nugets and what we have in the build VM is now on 3 nugets.

When the build runs it downloads & installs the nugets and uses them to compile our code on the Azure hosted build along the standard packages.

What do I need?

To configure the Azure hosted build we need:

- The 3 nuget packages from LCS: Compiler tools, Platform X++ and Application X++.

- A user with rights at the organization level to upload the nugets to Azure DevOps.

- Some patience to get everything running 🙂

So the first step is going to the PEAP LCS’ Asset Library and downloading the 3 nuget packages:

Azure DevOps artifact

All of this can be done on your PC or in a dev VM, but you’ll need to add some files and a VS project to your source control so you need to use the developer box for sure.

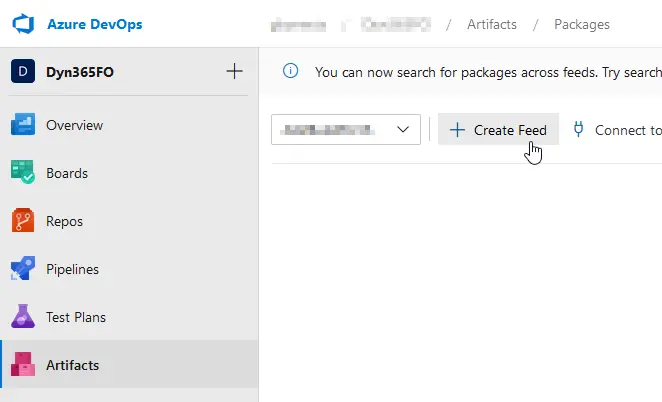

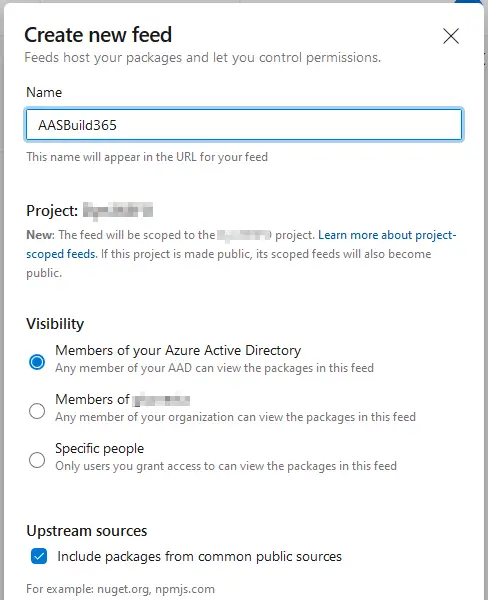

Head to your Azure DevOps project and go to the Artifacts section. Here we’ll create a new feed and give it a name:

You get 2GB for artifacts, the 3 nuget packages’ size is around 500MB, you should have no issues with space unless you have other artifacts in your project.

Now press the “Connect to feed” button and select nuget.exe. You’ll find the instructions to continue there but I’ll explain it anyway.

Then you need to download nuget.exe and put it in the Windows PATH. You can also get the nugets and nuget.exe in the same folder and forget about the PATH. Up to you. Finally, install the credential provider: download this Powershell script and run it. If the script keeps asking for your credentials and fails try adding -AddNetfx as a parameter. Thanks to Erik Norell for finding this and sharing in the comments of the original post!

Create a new file called nuget.config in the same folder where you’ve downloaded the nugets. It will have the content you can see in the “Connect to feed” page, something like this:

<?xml version="1.0" encoding="utf-8"?>

<configuration>

<packageSources>

<clear />

<add key="AASBuild" value="https://pkgs.dev.azure.com/aariste/aariste365FO/_packaging/AASBuild/nuget/v3/index.json" />

</packageSources>

</configuration>This file’s content has to be exactly the same as what’s displayed in your “Connect to feed” page.

And finally, we’ll push (upload) the nugets to our artifacts feed. We have to do this for each one of the 3 nugets we’ve downloaded:

nuget.exe push -Source "AASBuild" -ApiKey az <packagePath>You’ll get prompted for the user. Remember it needs to have enough rights on the project.

Of course, you need to change “AASBuild” for your artifact feed name. And we’re done with the artifacts.

Prepare Azure DevOps

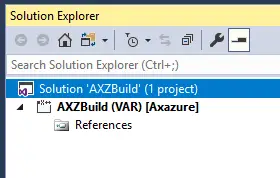

This new agent needs a solution to build our packages. This means we have to create an empty solution in Visual Studio and set the package of the project to our main package. Like this:

If you have more than one package or models, you need to add a project to this solution for each separate model you have.

We have to create another file called packages.config with the following content:

<?xml version="1.0" encoding="utf-8"?>

<packages>

<package id="Microsoft.Dynamics.AX.Platform.DevALM.BuildXpp" version="7.0.5644.16778" targetFramework="net40" />

<package id="Microsoft.Dynamics.AX.Application.DevALM.BuildXpp" version="10.0.464.13" targetFramework="net40" />

<package id="Microsoft.Dynamics.AX.Platform.CompilerPackage" version="7.0.5644.16778" targetFramework="net40" />

</packages>The version tag will depend on when you’re reading this, but the one above is the correct one for PU35. We’ll need to update this file each time a new version of the nugets is published.

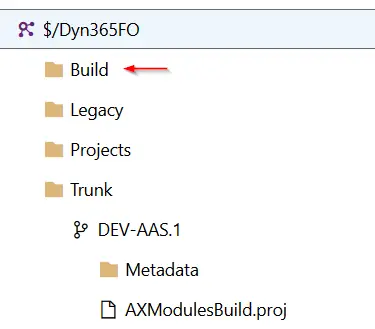

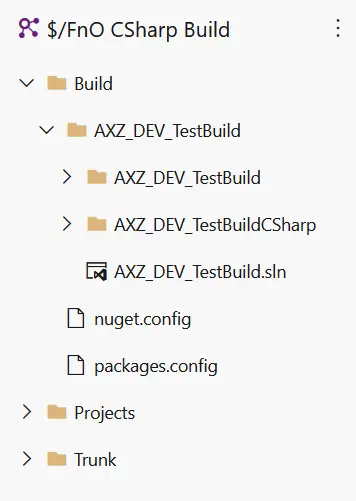

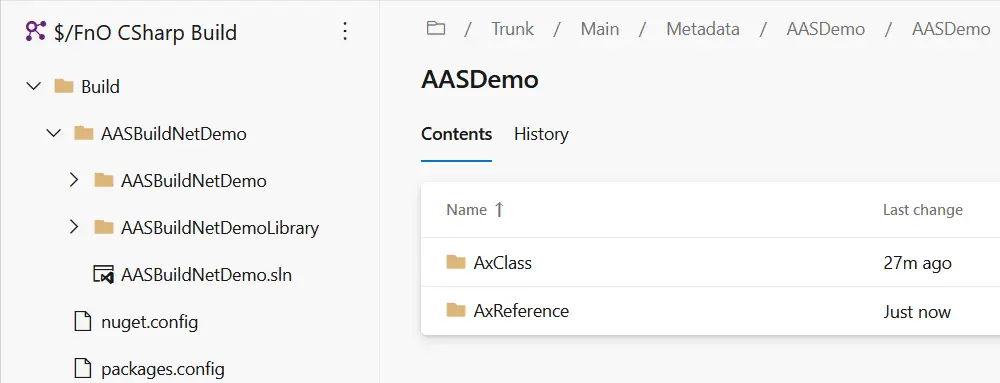

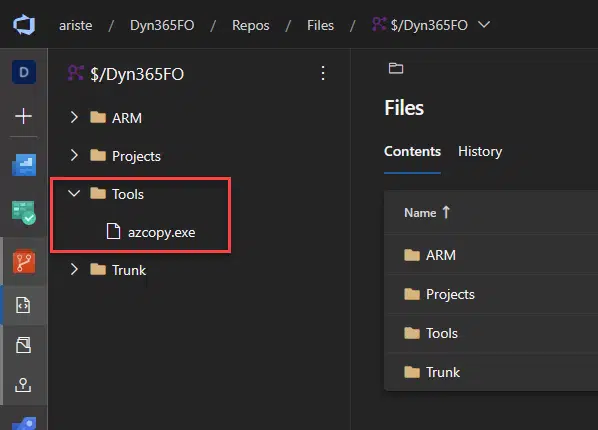

And, to end with this part, we need to add the solution, the nuget.config and the packages.config files to TFVC. This is what I’ve done:

You can see I’ve created a Build folder in the root of my DevOps project. That’s only my preference, but I like to only have code in my branches, even the projects are outside of the branches, I only want the code to move between merges and branches. Place the files and solution inside the Build folder (or wherever you decide).

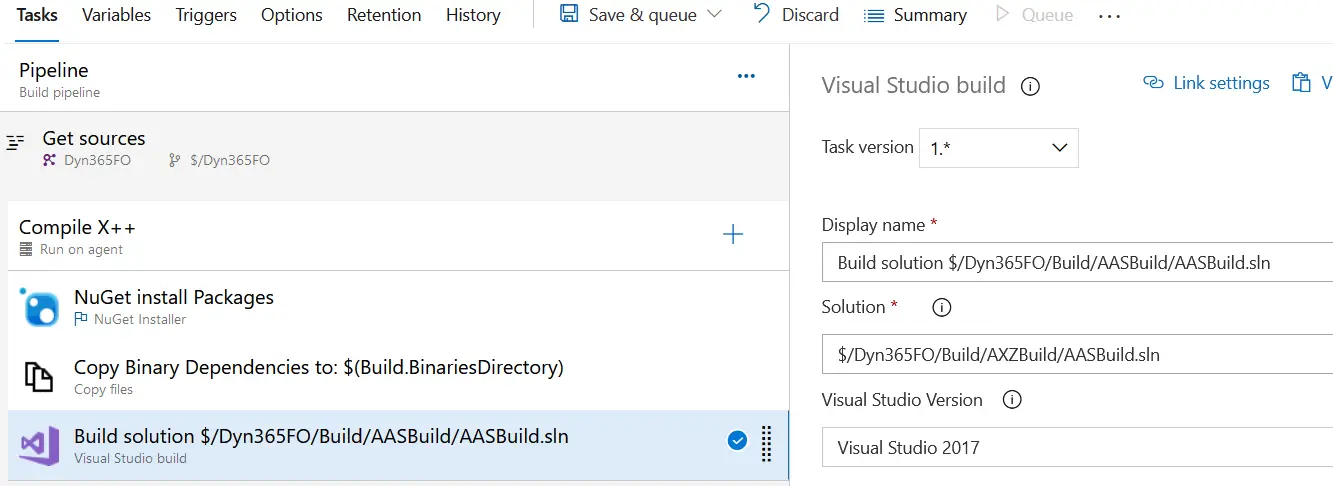

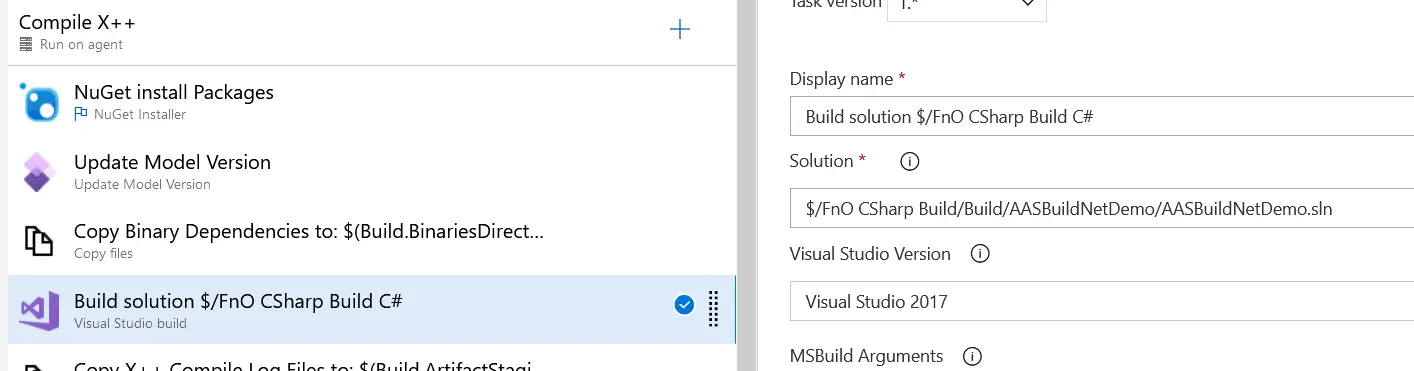

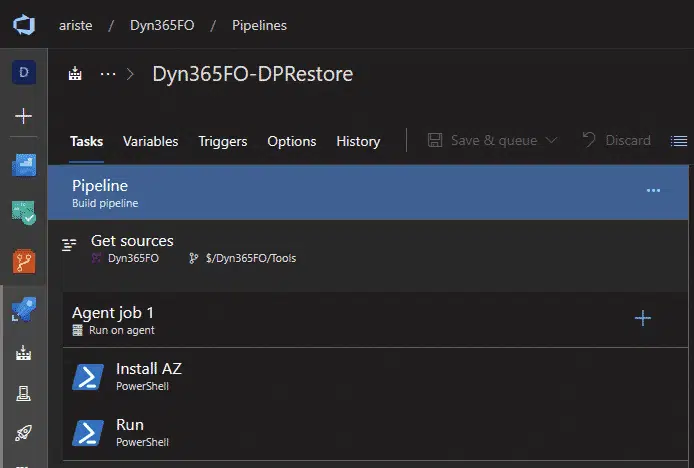

Configure pipeline

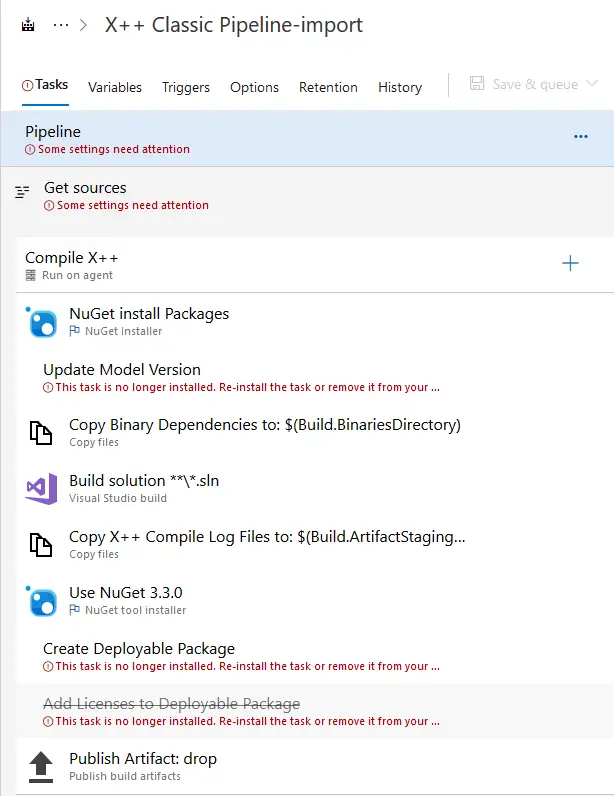

Now we need to create a new pipeline, you can just import this template from the newly created X++ (Dynamics 365) Samples and Tools Github project. After importing the template we’ll modify it a bit. Initially, it will look like this:

As you can see the pipeline has all the steps needed to generate the DP, but some of them, the ones contained in the Dynamics 365 tasks, won’t load correctly after the import. You just need to add those steps to your pipeline manually and complete its setup.

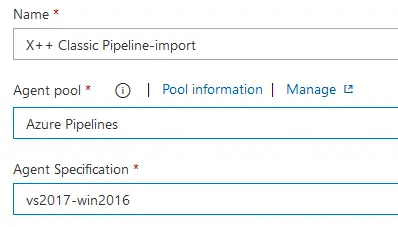

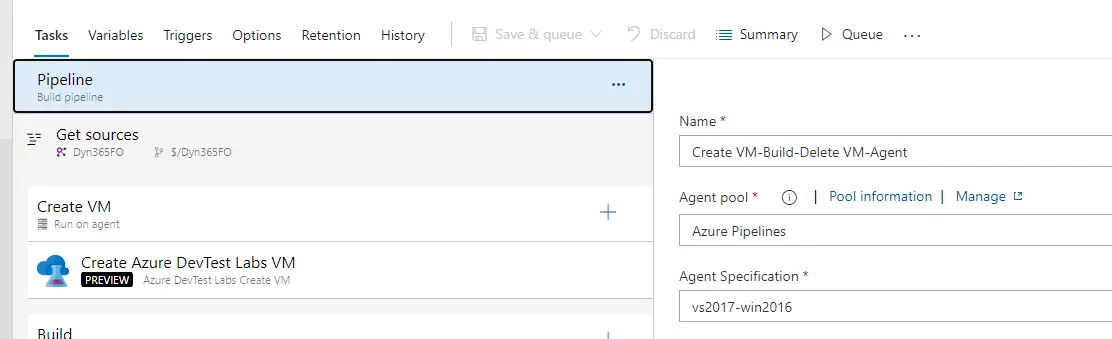

Pipeline root

You need to select the Hosted Azure Pipelines for the Agent pool, and vs2017-win2016 as Agent Specification.

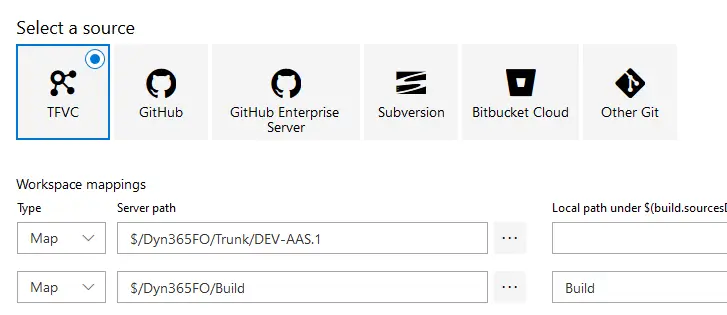

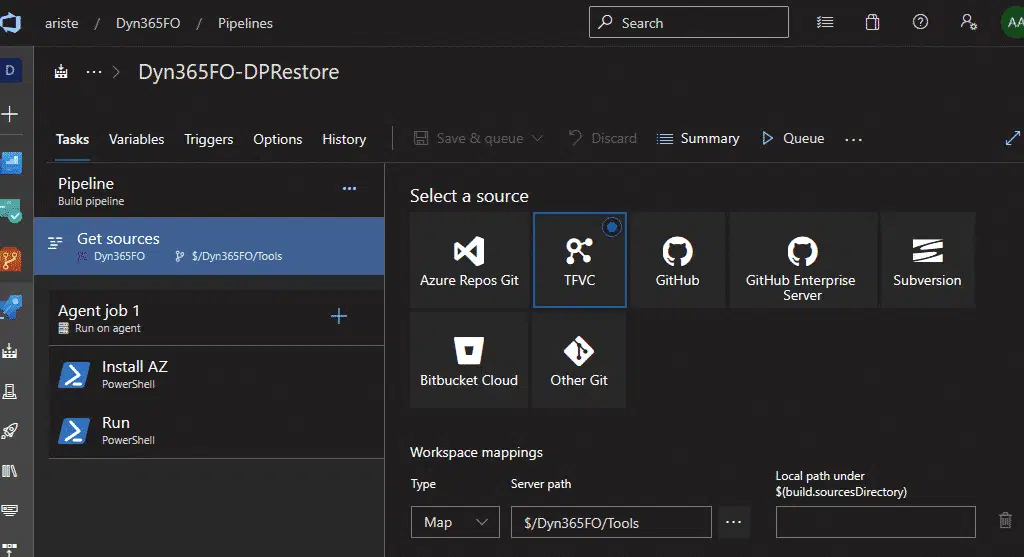

Get sources

I’ve mapped 2 things here: our codebase in the first mapping and the Build folder where I’ve added the solution and config files. If you’ve placed these files inside your Metadata folder you don’t need the extra mapping.

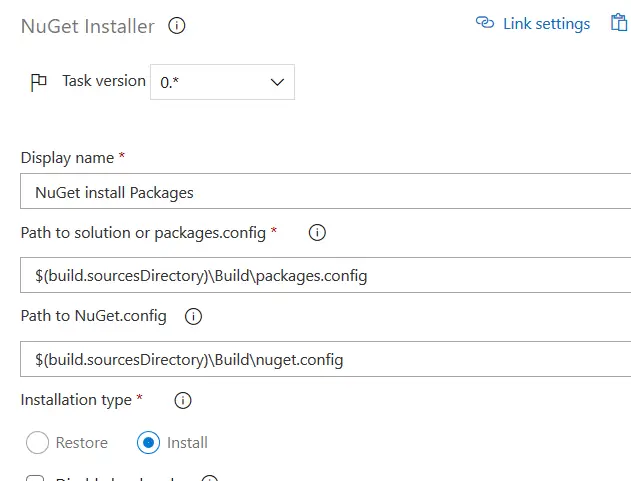

NuGet install Packages

This step gets the nugets from our artifacts feeds and the installs to be used in each pipeline execution.

The command uses the config files we have uploaded to the Build folder, and as you can see it’s fetching the files from the $(build.sourcesDirectory)\Build directory we’ve configured in the Get sources step. If you’ve placed those files in a diferent place you need to change the paths as needed.

Update Model Version

This is one of the steps that are displaying issues even though I got the Dynamics 365 tools installed from the Azure DevOps marketplace. If you got it right you probably don’t need to change anything. If you have the same issue as me, just add a new step and select the “Update Model Version” task and change the fields so it looks like this:

Build solution

In the build solution step, you have a wildcard in the solution field: **\\*.sln. If you leave this wildcard it will build all the projects you have in the repo and, depending on the number of projects you have, the build could time out.

I solve this by selecting a solution, that contains all the models I have, that I have placed in the Build folder in my repo, and update that solution if you add or remove any model.

Thanks to Ievgen Miroshnikov for pointing this out!

There could be an additional issue with the rnrproj files as Josh Williams points out in a comment. If your project was created pre-PU27 try creating a new solution to avoid problems.

Create Deployable Package

This is another one of the steps that are not loading correctly for me. Again, add it and change as needed:

Add Licenses to Deployable Package

Another step with issues. Do the same as with the others:

And that’s all. You can queue the build to test if it’s working. For the first runs you can disable the steps after the “Build solution” one to see if the nugets are downloaded correctly and your code built. After that try generating the DP and publishing the artifact.

You’ve configured your Azure hosted build, now it’s your turn to decide in which cases will you use the self-hosted or the azure hosted build.

Update for version 10.0.18

Since version 10.0.18 we’ll be getting 4 NuGet packages instead of 3 because of the Microsoft.Dynamics.AX.Application.DevALM.BuildXpp NuGet size is getting near or over the max size which is 500MB and will come as 2 NuGet packages from now on.

You can read about this in the docs.

There just 2 small changes we need to do to the pipeline if we’re already using it, one to the packages.config file and another one to the pipeline.

packages.config

The packages.config file will have an additional line for the Application Suite NuGet.

<?xml version="1.0" encoding="utf-8"?>

<packages>

<package id="Microsoft.Dynamics.AX.Platform.DevALM.BuildXpp" version="7.0.5968.16973" targetFramework="net40" />

<package id="Microsoft.Dynamics.AX.Application.DevALM.BuildXpp" version="10.0.793.16" targetFramework="net40" />

<package id="Microsoft.Dynamics.AX.ApplicationSuite.DevALM.BuildXpp" version="10.0.793.16" targetFramework="net40" />

<package id="Microsoft.Dynamics.AX.Platform.CompilerPackage" version="7.0.5968.16973" targetFramework="net40" />

</packages>Pipeline

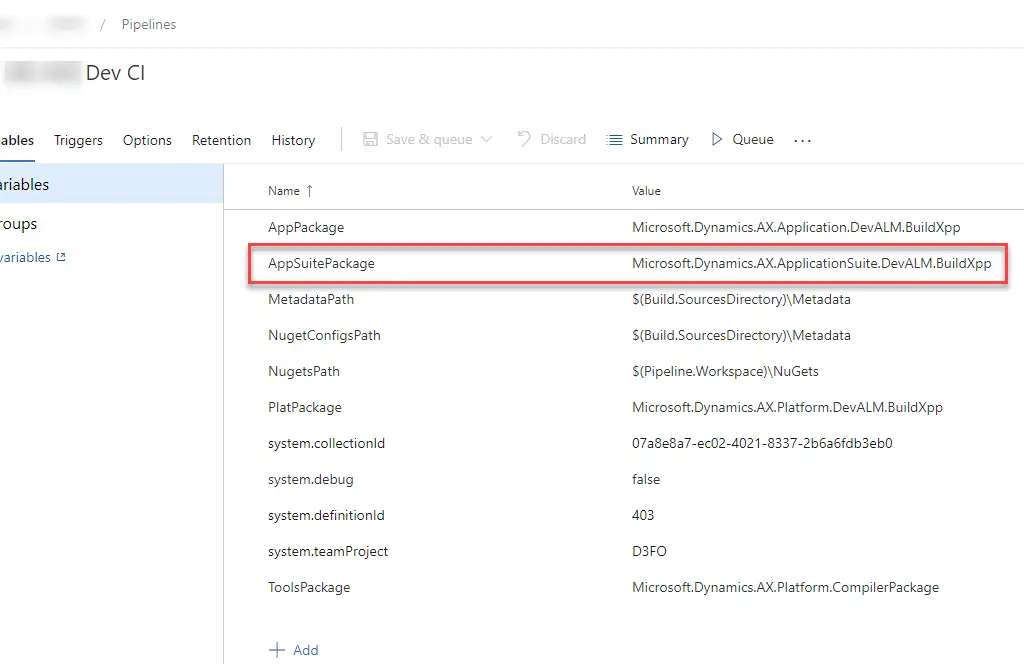

We need to add a new variable to the pipeline variables called AppSuitePackage with the value Microsoft.Dynamics.AX.ApplicationSuite.DevALM.BuildXpp.

And then use it in the build step and change it to:

/p:BuildTasksDirectory="$(NugetsPath)\$(ToolsPackage)\DevAlm" /p:MetadataDirectory="$(MetadataPath)" /p:FrameworkDirectory="$(NuGetsPath)\$(ToolsPackage)" /p:ReferenceFolder="$(NuGetsPath)\$(PlatPackage)\ref\net40;$(NuGetsPath)\$(AppPackage)\ref\net40;$(MetadataPath);$(Build.BinariesDirectory);$(NuGetsPath)\$(AppSuitePackage)\ref\net40" /p:ReferencePath="$(NuGetsPath)\$(ToolsPackage)" /p:OutputDirectory="$(Build.BinariesDirectory)"Azure DevTest Labs powered builds

The end of Tier-1 Microsoft-managed build VMs is near, and this will leave us without the capacity to synchronize the DB or run tests in a pipeline, unless we deploy a new build VM in our, or our customer’s, Azure subscription. Of course, there might be a cost concern with it, and there’s where Azure DevTest Labs can help us!

This post has been written thanks to Joris de Gruyter’s session in the past DynamicsCon: Azure Devops Automation for Finance and Operations Like You’ve Never Seen! And there’s also been some investigation and (a lot of) trial-and-error from my side until everything has been working.

If you want to know more about builds, releases, and the Dev ALM of Dynamics 365 you can read my full guide on MSDyn365 & Azure DevOps ALM.

But first…

What I’m showing in this post is not a perfect blueprint. There’s a high probability that if you try exactly the same as I do here, you won’t get the same result. But it’s a good guide to get started and do some investigation on your own and learn.

Azure DevTest Labs

Azure DevTest Labs is an Azure tool/service that allows us to deploy virtual machines and integrate them with Azure DevOps pipelines, and many other things, but what I’m going to explain is just the VM and pipeline part.

What will I show in this post? How to prepare a Dynamics 365 Finance and Operations VHD image to be used as the base to create a build virtual machine from an Azure DevOps pipeline, build our codebase, synchronize the DB, run tests, even deploy the reports, generate the deployable package and delete the VM.

Getting and preparing the VHD

This is by far the most tedious part of all the process because you need to download 11 ZIP files from LCS’ Shared Asset Library, and we all know how fast things download from LCS.

And to speed it up we can create a blob storage account on Azure and once more turn to Mötz Jensen’s d365fo.tools and use the Invoke-D365AzCopyTransfer cmdlet. Just go to LCS, click on the “Generate SAS link” button for each file, use it as the source parameter in the command and your blob SAS URL as the destination one. Once you have all the files in your blob you can download them to your local PC at a good speed.

Once you’ve unzipped the VHD you need to change it from Dynamic to Fixed using this PowerShell command:

Convert-VHD –Path VHDLOCATION.vhd –DestinationPath NEWVHD.vhd –VHDType FixedThe reason is you can’t create an Azure VM from a dynamically-sized VHD. And it took me several attempts to notice this 🙂

Create a DevTest Labs account

To do this part you need an Azure account. If you don’t have one you can sign up for a free Azure account with a credit of 180 Euros (200 US Dollars) to be spent during 30 days, plus many other free services during 12 months.

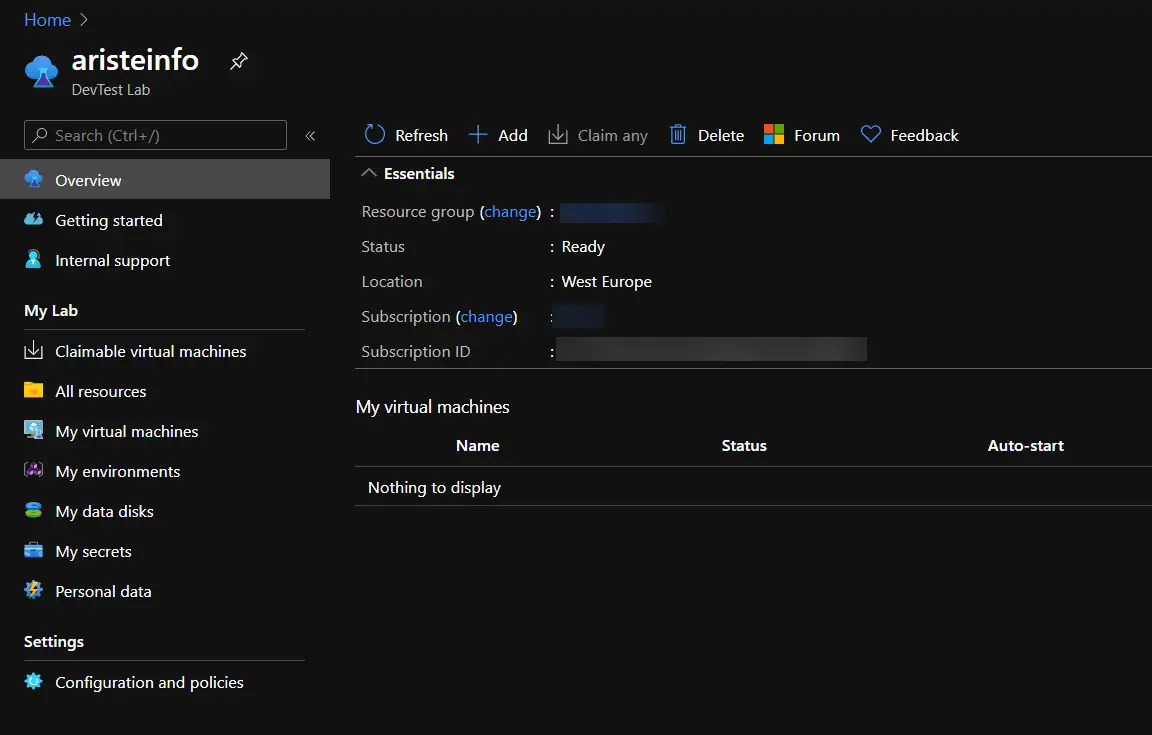

Search for DevTest Labs in the top bar and create a new DevTest Lab. Once it’s created open the details and you should see something like this:

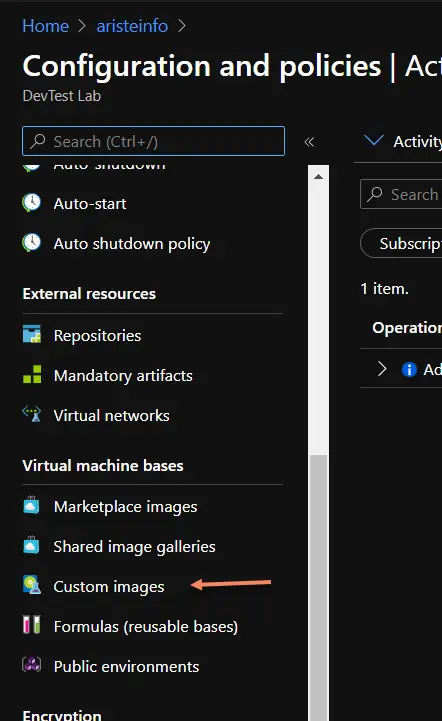

Click on the “Configuration and policies” menu item at the bottom of the list and scroll down in the menu until you see the “Virtual machine bases” section:

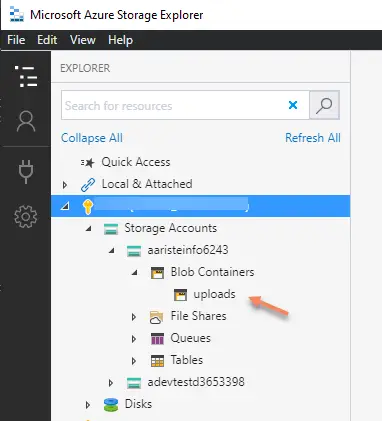

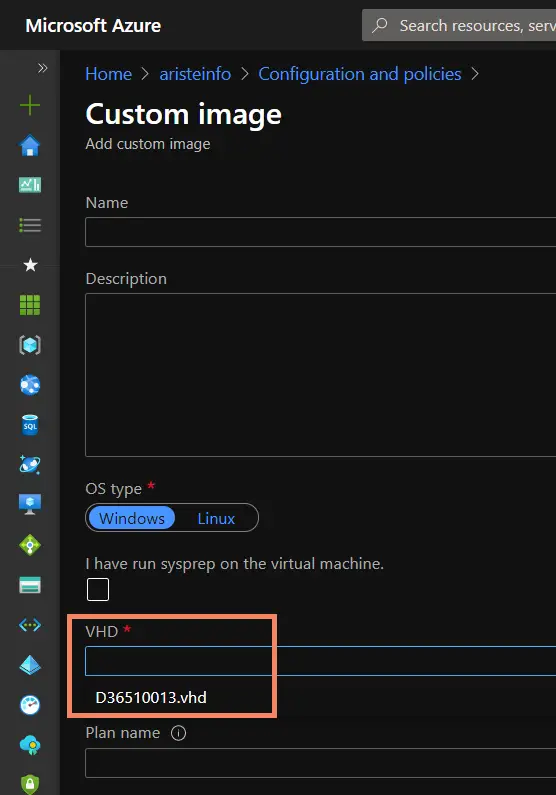

And now comes the second funniest part of the process: we need to upload the 130GB VHD image to a blob storage account! So, click the “Add” button on top and in the new dialog that will open click the “Upload a VHD using PowerShell”. This will generate a PowerShell script to upload the VHD to the DevTest Labs blob. For example:

<#

Generated script to upload a local VHD to Azure.

WARNING: The destination will be publicly available for 24 hours, after which it will expire.

Ensure you complete your upload by then.

Run the following command in a Azure PowerShell console after entering

the LocalFilePath to your VHD.

#>

Add-AzureRmVhd -Destination "https://YOURBLOB.blob.core.windows.net/uploads/tempImage.vhd?sv=2019-07-07&st=2020-12-27T09%3A08%3A26Z&se=2020-12-28T09%3A23%3A26Z&sr=b&sp=rcw&sig=YTeXpxpVEJdSM7KZle71w8NVw9oznNizSnYj8Q3hngI%3D" -LocalFilePath "<Enter VHD location here>"

An alternative to this is using the Azure Storage Explorer as you can see in the image on the left.

You should upload the VHD to the uploads blob.

Any of these methods is good to upload the VHD and I don’t really know which one is faster.

Once the VHD is uploaded open the “Custom images” option again and you should see the VHD in the drop-down:

Give the image a name and click OK.

What we have now is the base for a Dynamics 365 Finance and Operations dev VM which we need to prepare to use it as a build VM.

Creating the VM

We’ve got the essential, a VHD ready to be used as a base to create a virtual machine in Azure. Our next step is finding a way to make the deployment of this VM predictable and automated. We will attain this thanks to Azure ARM templates.

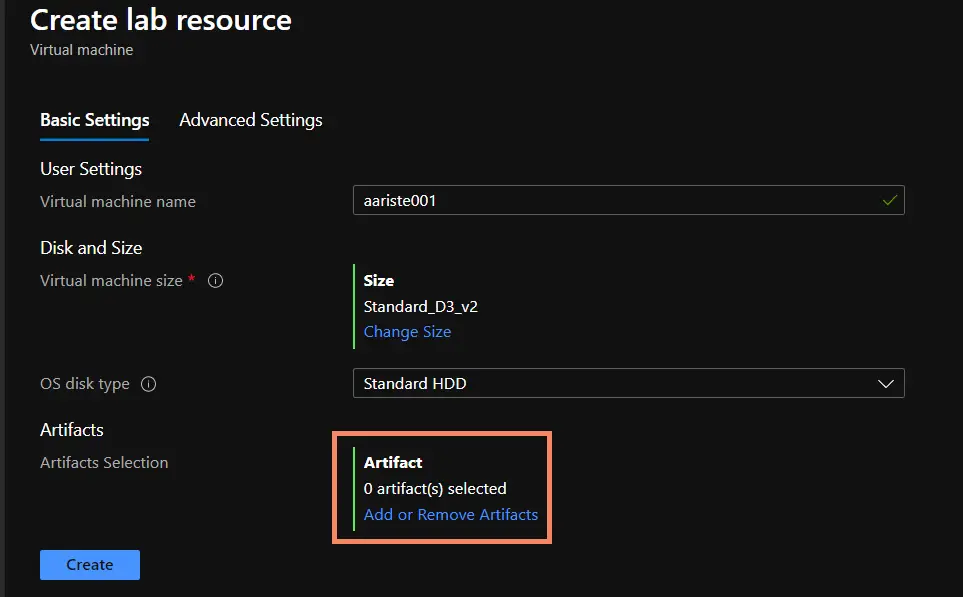

Go back to your DevTest Labs overview page and click the “Add” button, on the “Choose base” page select the base you’ve just created, and on the next screen click on the “Add or Remove Artifacts” link:

Search for WinRM, select “Configure WinRM”, and on the next screen enter “Shared IP address” as the hostname box and click “Add”.

Note: if when the VM runs the artifacts can’t be installed check whether the Azure VM Agent is installed on the base VHD. Thanks to Joris for pointing this out!

Configure Azure DevOps Agent Service

Option A: use an artifact

Update: thanks to Florian Hopfner for reminding me this because I forgot… If you choose Option A to install the agent service you need to do some things first!

The first thing we need to do is running some PowerShell scripts that create registry entries and environment variables in the VM, go to C:\DynamicsSDK and run these:

Import-Module $(Join-Path -Path "C:\DynamicsSDK" -ChildPath "DynamicsSDKCommon.psm1") -Function "Write-Message", "Set-AX7SdkRegistryValues", "Set-AX7SdkEnvironmentVariables"

Set-AX7SdkEnvironmentVariables -DynamicsSDK "C:\DynamicsSDK"

Set-AX7SdkRegistryValues -DynamicsSDK "c:\DynamicsSDK" -TeamFoundationServerUrl "https://dev.azure.com/YOUR_ORG" -AosWebsiteName $AosWebsiteName "AosService"

The first one will load the functions and make them available in the command-line and the other two create the registry entries and environment variables.

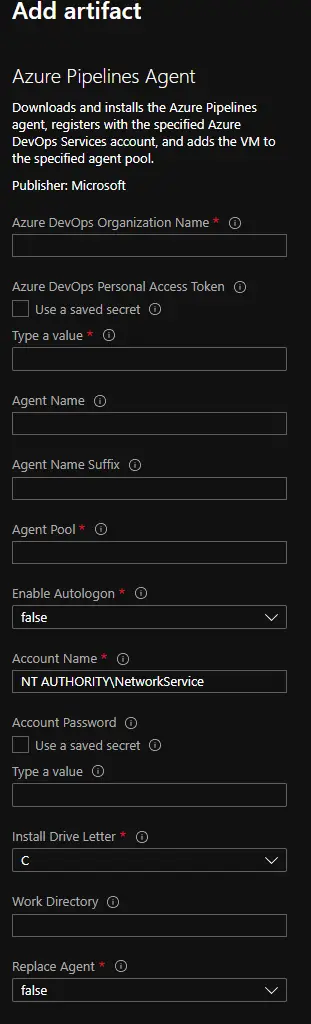

Now we need to add an artifact for the Azure DevOps agent service. This will configure the agent service on the VM each time the VM is deployed. Search for “Azure Pipelines Agent” and click it. You will see this:

We need to fill some information:

On “Azure DevOps Organization Name” you need to provide the name of your organization. For example if your AZDO URL is https://dev.azure.com/blackbeltcorp you need to use blackbeltcorp.

On “AZDO Personal Access Token” you need to provide a token generated from AZDO.

On “Agent Name” give your agent a name, like DevTestAgent. And on “Agent Pool” a name for your pool, a new like DevTestPool or an existing one as Default.

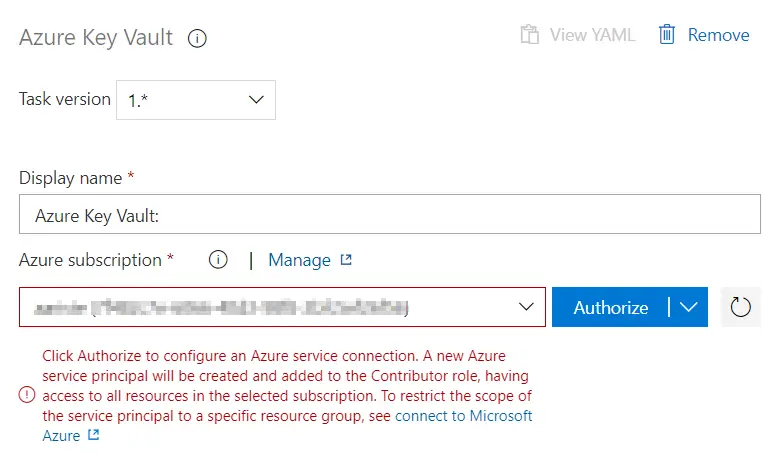

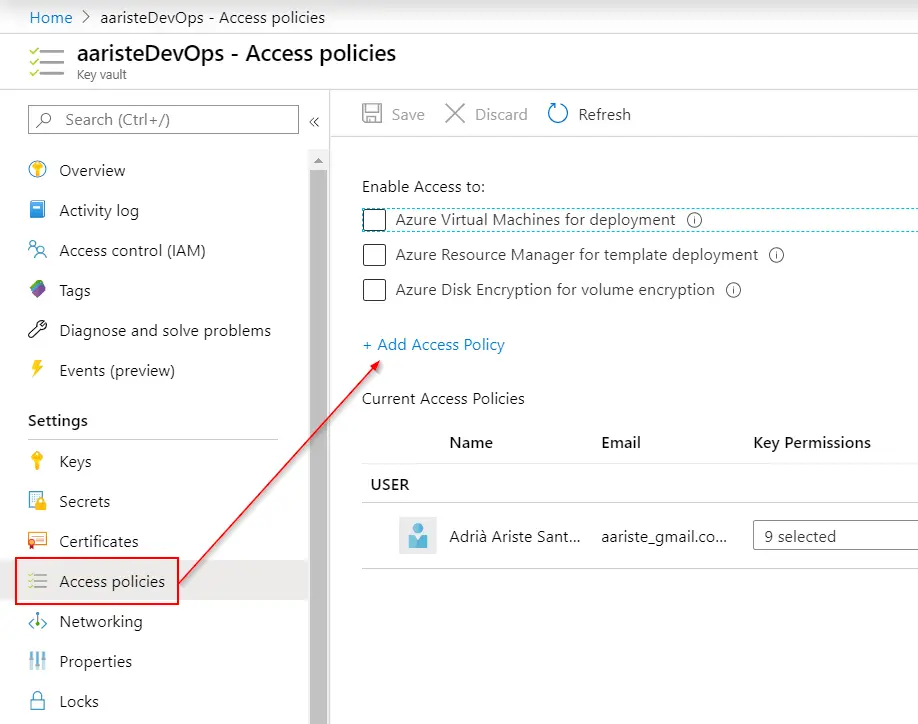

On “Account Name” use the same user that we’ll use in our pipeline later. Remember this. And on “Account Password” its password. Using secrets with a KeyVault is better, but I won’t explain this here.

And, finally, set “Replace Agent” to true.

Option B: Configure Azure DevOps Agent in the VM

To do this you have to create a VM from the base image you created before and then go to C:\DynamicsSDK and run the SetupBuildAgent script with the needed parameters:

SetupBuildAgent.ps1 -VSO_ProjectCollection "https://dev.azure.com/YOUR_ORG" -ServiceAccountName "myUser" -ServiceAccountPassword "mYPassword" -AgentName "DevTestAgent" -AgentPoolName "DevTestPool" -VSOAccessToken "YOUR_VSTS_TOKEN"WARNING: If you choose option B you must create a new base image from the VM where you’ve run the script. Then repeat the WinRM steps to generate the new ARM template which we’ll see next.

ARM template

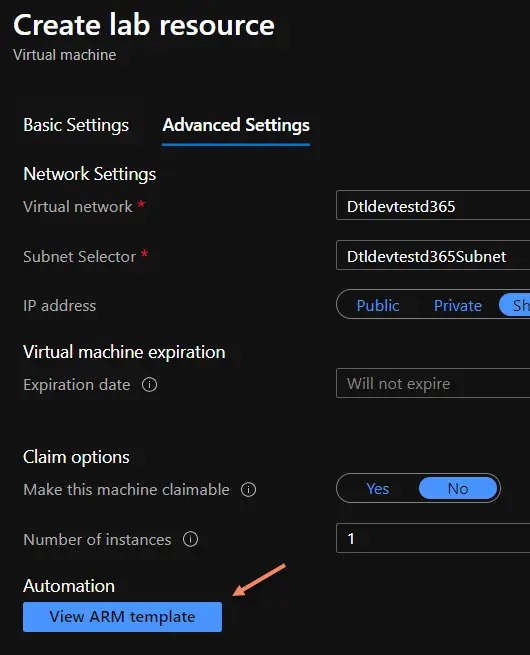

Then go to the “Advanced Settings” tab and click the “View ARM template” button:

This will display the ARM template to create the VM from our pipeline. It’s something like this:

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json",

"contentVersion": "1.0.0.0",

"parameters": {

"newVMName": {

"type": "string",

"defaultValue": "aariste001"

},

"labName": {

"type": "string",

"defaultValue": "aristeinfo"

},

"size": {

"type": "string",

"defaultValue": "Standard_B4ms"

},

"userName": {

"type": "string",

"defaultValue": "myUser"

},

"password": {

"type": "securestring",

"defaultValue": "[[[VmPassword]]"

},

"Configure_WinRM_hostName": {

"type": "string",

"defaultValue": "Public IP address"

},

"Azure_Pipelines_Agent_vstsAccount": {

"type": "string",

"defaultValue": "ariste"

},

"Azure_Pipelines_Agent_vstsPassword": {

"type": "securestring"

},

"Azure_Pipelines_Agent_agentName": {

"type": "string",

"defaultValue": "DevTestAgent"

},

"Azure_Pipelines_Agent_agentNameSuffix": {

"type": "string",

"defaultValue": ""

},

"Azure_Pipelines_Agent_poolName": {

"type": "string",

"defaultValue": "DevTestPool"

},

"Azure_Pipelines_Agent_RunAsAutoLogon": {

"type": "bool",

"defaultValue": false

},

"Azure_Pipelines_Agent_windowsLogonAccount": {

"type": "string",

"defaultValue": "aariste"

},

"Azure_Pipelines_Agent_windowsLogonPassword": {

"type": "securestring"

},

"Azure_Pipelines_Agent_driveLetter": {

"type": "string",

"defaultValue": "C"

},

"Azure_Pipelines_Agent_workDirectory": {

"type": "string",

"defaultValue": "DevTestAgent"

},

"Azure_Pipelines_Agent_replaceAgent": {

"type": "bool",

"defaultValue": true

}

},

"variables": {

"labSubnetName": "[concat(variables('labVirtualNetworkName'), 'Subnet')]",

"labVirtualNetworkId": "[resourceId('Microsoft.DevTestLab/labs/virtualnetworks', parameters('labName'), variables('labVirtualNetworkName'))]",

"labVirtualNetworkName": "[concat('Dtl', parameters('labName'))]",

"vmId": "[resourceId ('Microsoft.DevTestLab/labs/virtualmachines', parameters('labName'), parameters('newVMName'))]",

"vmName": "[concat(parameters('labName'), '/', parameters('newVMName'))]"

},

"resources": [

{

"apiVersion": "2018-10-15-preview",

"type": "Microsoft.DevTestLab/labs/virtualmachines",

"name": "[variables('vmName')]",

"location": "[resourceGroup().location]",

"properties": {

"labVirtualNetworkId": "[variables('labVirtualNetworkId')]",

"notes": "Dynamics365FnO10013AgentLessV2",

"customImageId": "/subscriptions/6715778f-c852-453d-b6bb-907ac34f280f/resourcegroups/devtestlabs365/providers/microsoft.devtestlab/labs/devtestd365/customimages/dynamics365fno10013agentlessv2",

"size": "[parameters('size')]",

"userName": "[parameters('userName')]",

"password": "[parameters('password')]",

"isAuthenticationWithSshKey": false,

"artifacts": [

{

"artifactId": "[resourceId('Microsoft.DevTestLab/labs/artifactSources/artifacts', parameters('labName'), 'public repo', 'windows-winrm')]",

"parameters": [

{

"name": "hostName",

"value": "[parameters('Configure_WinRM_hostName')]"

}

]

},

{

"artifactId": "[resourceId('Microsoft.DevTestLab/labs/artifactSources/artifacts', parameters('labName'), 'public repo', 'windows-vsts-build-agent')]",

"parameters": [

{

"name": "vstsAccount",

"value": "[parameters('Azure_Pipelines_Agent_vstsAccount')]"

},

{

"name": "vstsPassword",

"value": "[parameters('Azure_Pipelines_Agent_vstsPassword')]"

},

{

"name": "agentName",

"value": "[parameters('Azure_Pipelines_Agent_agentName')]"

},

{

"name": "agentNameSuffix",

"value": "[parameters('Azure_Pipelines_Agent_agentNameSuffix')]"

},

{

"name": "poolName",

"value": "[parameters('Azure_Pipelines_Agent_poolName')]"

},

{

"name": "RunAsAutoLogon",

"value": "[parameters('Azure_Pipelines_Agent_RunAsAutoLogon')]"

},

{

"name": "windowsLogonAccount",

"value": "[parameters('Azure_Pipelines_Agent_windowsLogonAccount')]"

},

{

"name": "windowsLogonPassword",

"value": "[parameters('Azure_Pipelines_Agent_windowsLogonPassword')]"

},

{

"name": "driveLetter",

"value": "[parameters('Azure_Pipelines_Agent_driveLetter')]"

},

{

"name": "workDirectory",

"value": "[parameters('Azure_Pipelines_Agent_workDirectory')]"

},

{

"name": "replaceAgent",

"value": "[parameters('Azure_Pipelines_Agent_replaceAgent')]"

}

]

}

],

"labSubnetName": "[variables('labSubnetName')]",

"disallowPublicIpAddress": true,

"storageType": "Premium",

"allowClaim": false,

"networkInterface": {

"sharedPublicIpAddressConfiguration": {

"inboundNatRules": [

{

"transportProtocol": "tcp",

"backendPort": 3389

}

]

}

}

}

}

],

"outputs": {

"labVMId": {

"type": "string",

"value": "[variables('vmId')]"

}

}

}NOTE: if you’re using option B you won’t have the artifact node for the VSTS agent.

This JSON file will be used as the base to create our VMs from the Azure DevOps pipeline. This is known as Infrastructure as Code (IaC) and it’s a way of defining our infrastructure in a file as it were code. It’s another part of the DevOps practice that should solve the “it works on my machine” issue.

If we take a look to the JSON’s parameters node there’s the following information:

- newVMName and labName will be the name of the VM and the DevTest Labs lab we’re using. The VM name is not really important because we’ll set the name later in the pipeline.

- size is the VM size, a D3 V2 in the example above, but we can change it and will do it later.

- userName & passWord will be the credentials to access the VM and must be the same we’ve used to configure the Azure DevOps agent.

- Configure_WinRM_hostName is the artifact we added to the VM template that will allow the pipelines to run in this machine.

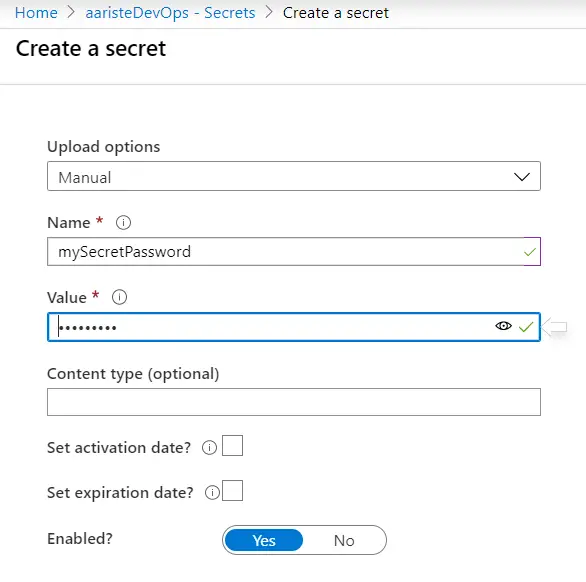

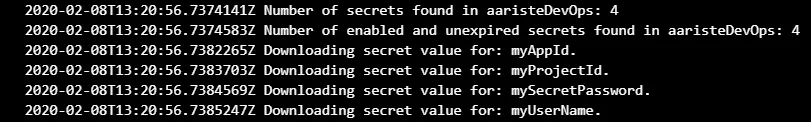

To do it faster and for demo purposes I’m using a plain text password in the ARM template, changing the password node to something like this:

"password": {

"type": "string",

"defaultValue": "yourPassword"

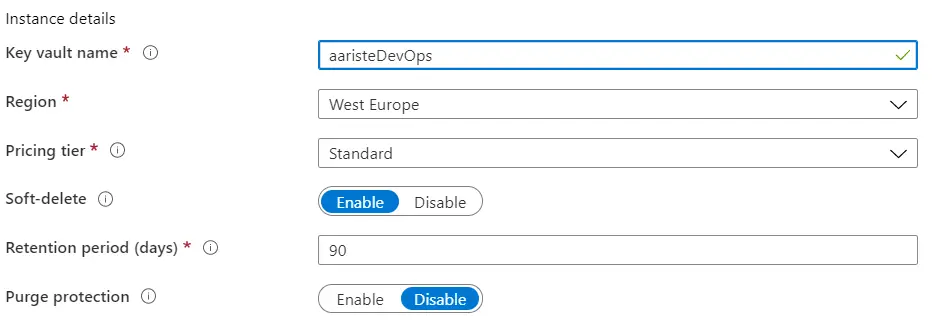

},I will do the same with all the secureString nodes, but you shouldn’t and should instead use an Azure KeyVault which comes with the DevTest Labs account.

Of course you would never upload this template to Azure DevOps with a password in plain text. There’s plenty of resources online that teach how to use parameters, Azure KeyVault, etc. to accomplish this, for example this one: 6 Ways Passing Secrets to ARM Templates.

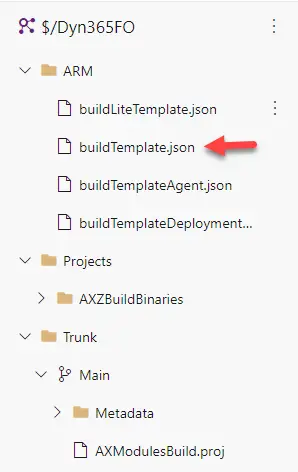

OK, now grab that file and save it to your Azure DevOps repo. I’ve created a folder in my repo’s root called ARM where I’m saving all the ARM templates:

Preparing the VM

The VHD image you download can be used as a developer VM with no additional work, just run Visual Studio, connect it to your AZDO project and done. But if you want to use it as a build box you need to do several things first.

Remember that the default user and password for these VHDs are Administrator and Pass@word1.

Disable services

First of all we will stop and disable services like the Batch, Management Reporter, SSAS, SSIS, etc. Anything you see that’s not needed to run a build.

Create a new SQL user

Open SSMS (as an Administrator) and create a new SQL user as a copy of the axdbadmin one. Then open the web.config file and update the DB user and password to use the one you’ve just created.

Prepare SSRS (optional)

If you want to deploy reports as part of your build pipeline you need to go to SSMS again (and as an Admin again), and open a new query in the reporting DB to execute the following query:

exec DeleteEncryptedContentPowerShell Scripts

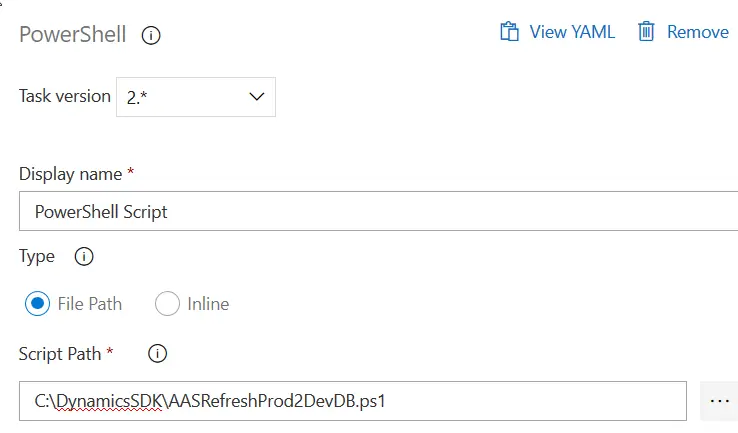

The default build definition that runs on a build VM uses several PowerShell scripts to run some tasks. I’m adding an additional script called PrepareForAgent.

The scripts can be found in the C:\DynamicsSDK folder of the VM.

PrepareForBuild

This script comes with the VM and we need to modify it to avoid one thing: the PackagesLocalDirectory backup which is usually done in the first build. We need to get rid of this or we’ll waste around an hour per run until the files are copied.

We don’t need this because our VM will be new each time we run the pipeline!

So open the script, go to line 696 and look for this piece of code:

# Create packages backup (if it does not exist).

$NewBackupCreated = Backup-AX7Packages -BackupPath $PackagesBackupPath -DeploymentPackagesPath $DeploymentPackagesPath -LogLocation $LogLocation

# Restore packages backup (unless a new backup was just created).

if (!$NewBackupCreated)

{

Restore-AX7Packages -BackupPath $PackagesBackupPath -DeploymentPackagesPath $DeploymentPackagesPath -LogLocation $LogLocation -RestoreAllFiles:$RestorePackagesAllFiles

}

if (!$DatabaseBackupToRestore)

{

$DatabaseBackupPath = Get-BackupPath -Purpose "Databases"

Backup-AX7Database -BackupPath $DatabaseBackupPath

}

else

{

# Restore a database backup (if specified).

Restore-AX7Database -DatabaseBackupToRestore $DatabaseBackupToRestore

}We need to modify it until we end up with this:

if ($DatabaseBackupToRestore)

{

Restore-AX7Database -DatabaseBackupToRestore $DatabaseBackupToRestore

}We just need the DB restore part and skip the backup, otherwise we’ll be losing 45 minutes in each run for something we don’t need because the VM will be deleted when the build is completed.

Optional (but recommended): install d365fo.tools

Just run this:

Install-Module -Name d365fo.toolsWe can use the tools to do a module sync, partial sync or deploy just our reports instead of all.

Create a new image

Once we’ve done all these prepare steps we can log out of this VM and stop it. Do not delete it yet! Go to “Create custom image”, give the new image a name, select “I have not generalized this virtual machine” and click the “OK” button.

This will generate a new image that you can use as a base image with all the changes you’ve done to the original VHD.

Azure DevOps Pipelines

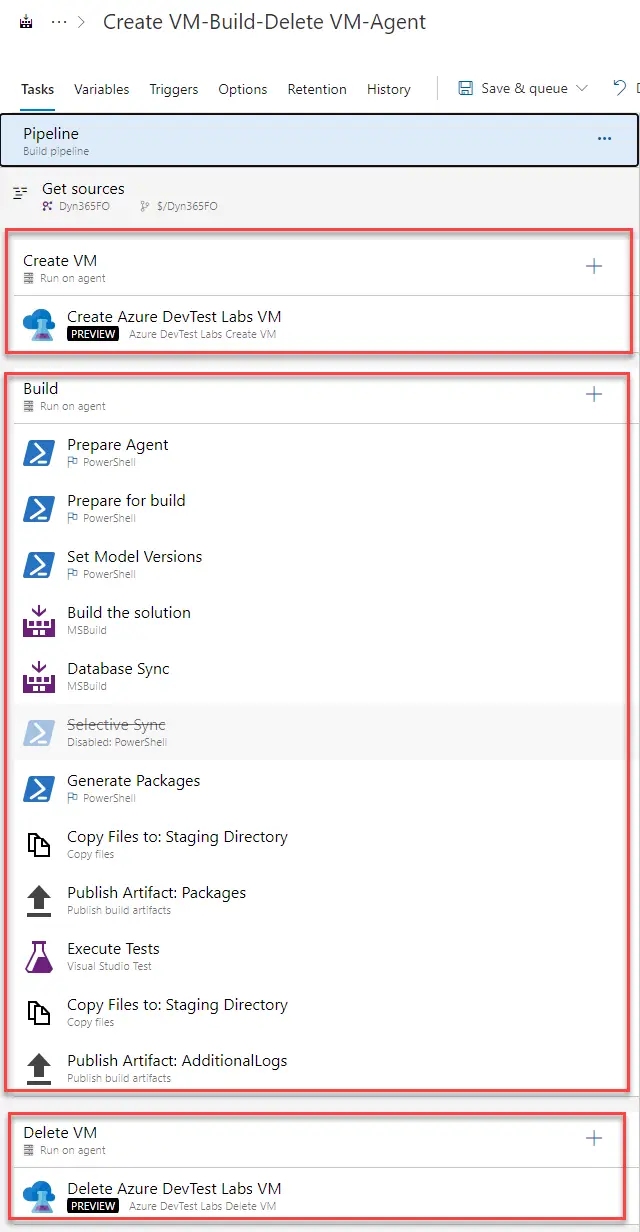

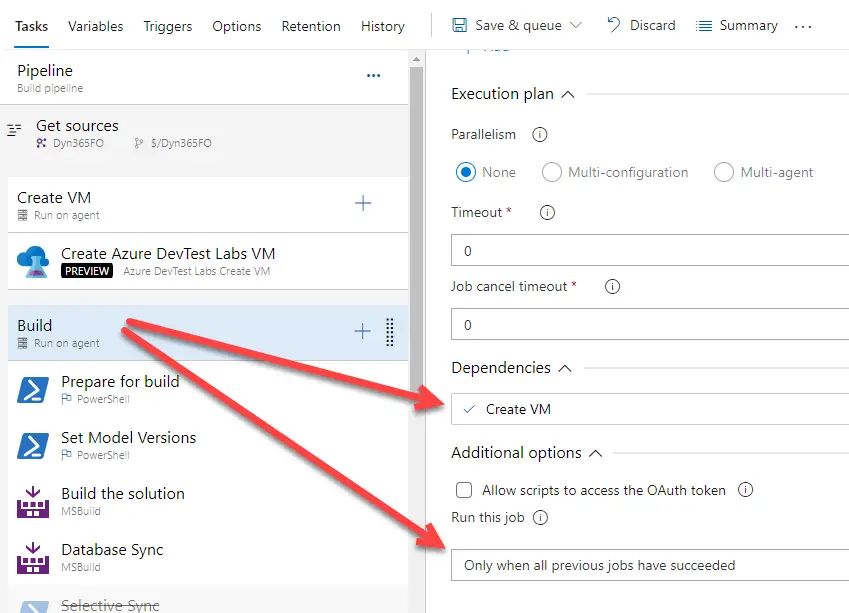

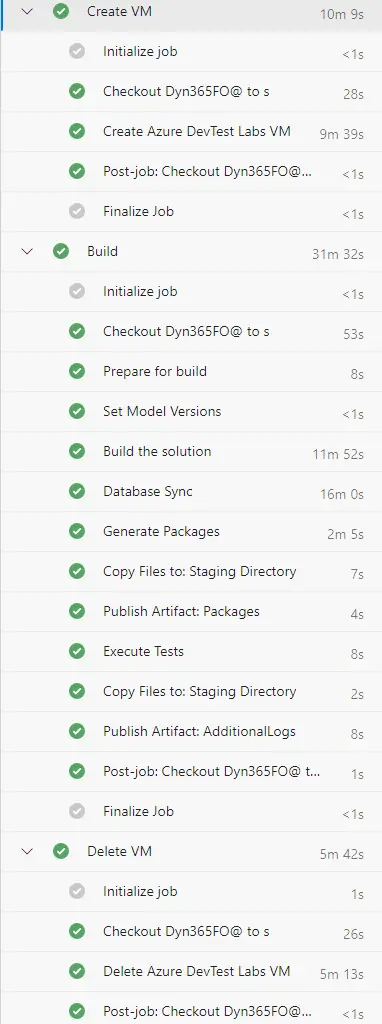

We’re ready to setup our new build pipeline in Azure DevOps. This pipeline will consist of three steps: create a new VM, run all the build steps, and delete the VM:

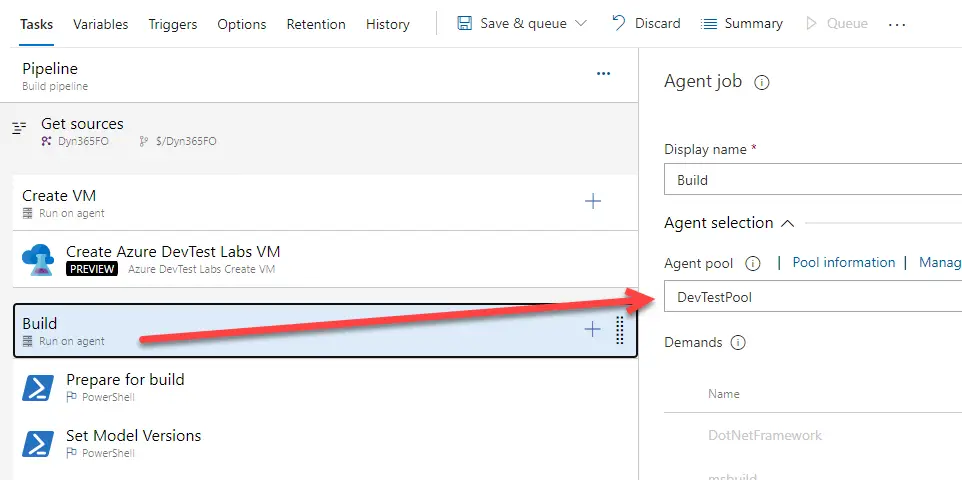

First of all check that your pipeline runs on Azure pipelines (aka Azure-hosted):

The create and delete steps will run on the Azure Pipelines pool. The build step will run on our DevTestLabs pool, or the name you gave it when configuring the artifact on DevTest Labs or the script on the VM.

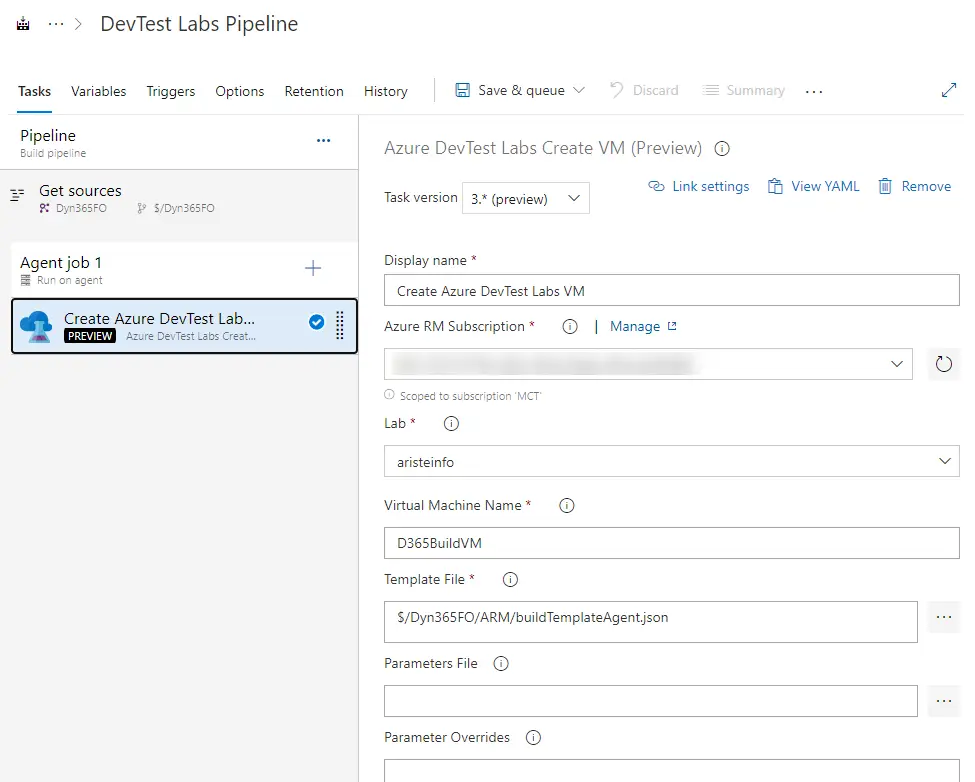

Create Azure DevTest Labs VM

Create a new pipeline and choose the “Use the classic editor” option. Make sure you’ve selected TFVC as your source and click “Continue” and “Empty job”. Add a new task to the pipeline, look for “Azure DevTest Labs Create VM”. We just need to fill in the missing parameters with our subscription, lab, etc.

Remember this step must run on the Azure-hosted pipeline.

Build

This is an easy one. Just export a working pipeline and import it. And this step needs to run on your self-hosted pool:

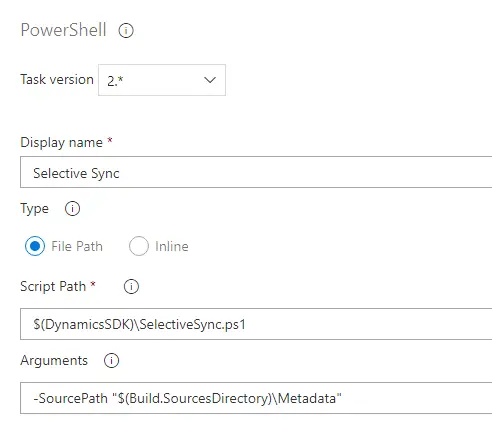

Optional: use SelectiveSync (not recommended, see next option)

You can replace the Database Sync task for a PowerShell script that will only sync the tables in your models:

Thanks Joris for the tip!

Optional: use d365fo.tools to sync your packages/models

This is a better option than the SelectiveSync above. You can synchronize your packages or models only to gain some time. This cmdlet uses sync.exe like Visual Studio does and should be better than SelectiveSync.

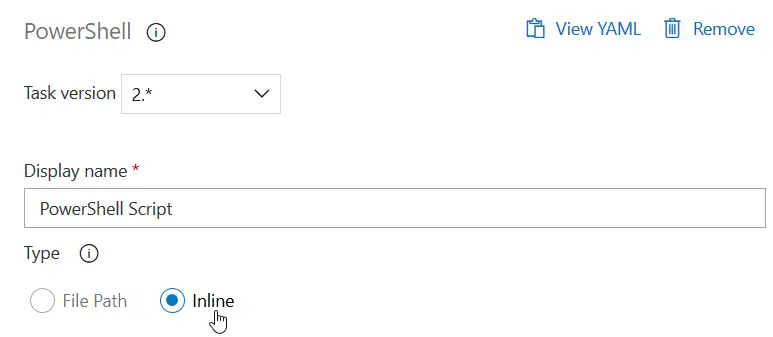

Add a new PowerShell task, select Inline Script and this is the command:

Invoke-D365DbSyncModule -Module "Module1", "Module2" -ShowOriginalProgress -VerboseOptional: use d365fo.tools to deploy SSRS reports

If you really want to add the report deployment step to your pipeline you can save some more extra time using d365fo.tools and just deploy the reports in your models like we’ve done with the DB sync.

Run this in a new PowerShell task to do it:

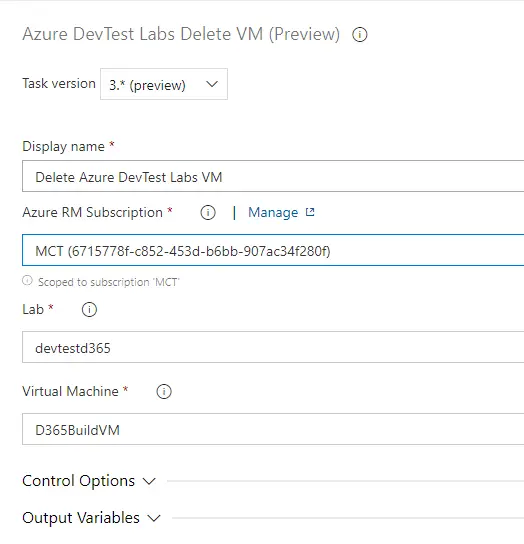

Publish-D365SsrsReport -Module YOUR_MODULE -ReportName *Delete Azure DevTest Labs VM

It’s almost the same as the create step, complete the subscription, lab and VM fields and done:

And this step, like the create one, will run on the Azure-hosted agent.

Dependencies and conditions

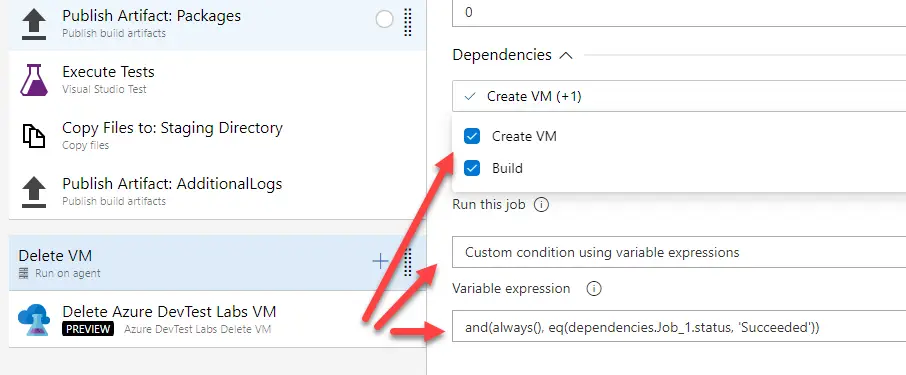

When all three steps are configured we need to add dependencies and conditions to some of them. For example, to make sure that the delete VM step runs when the build step fails, but it doesn’t when the create VM step fails.

Build

The build step depends on the create VM step, and will only run if the previous step succeeds:

Delete VM

The delete step depends on all previous steps and must run when the create VM step succeeds. If the create step fails there’s no VM and we don’t need to delete it:

This is the custom condition we’ll use:

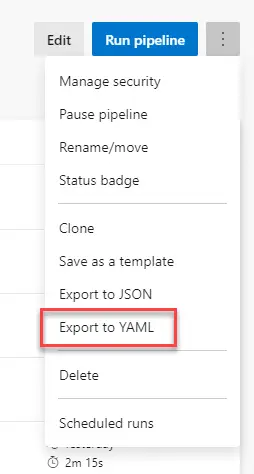

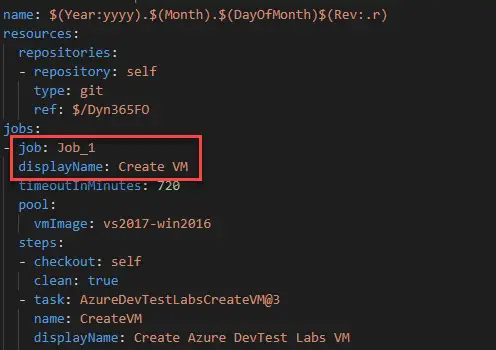

and(always(), eq(dependencies.Job_1.status, 'Succeeded'))If you need to know your first step’s job name just export the pipeline to YAML and you’ll find it there:

If this step fails when the pipeline is run, wait to delete the VM manually, first change the VM name in the delete step, save your pipeline and then use the dropdown to show the VMs in the selected subscription, and save the pipeline.

Run the build

And, I think, we’re done and ready to run our Azure DevTest Labs pipeline for Dynamics 365 Finance and Operations… click “Run pipeline” and wait…

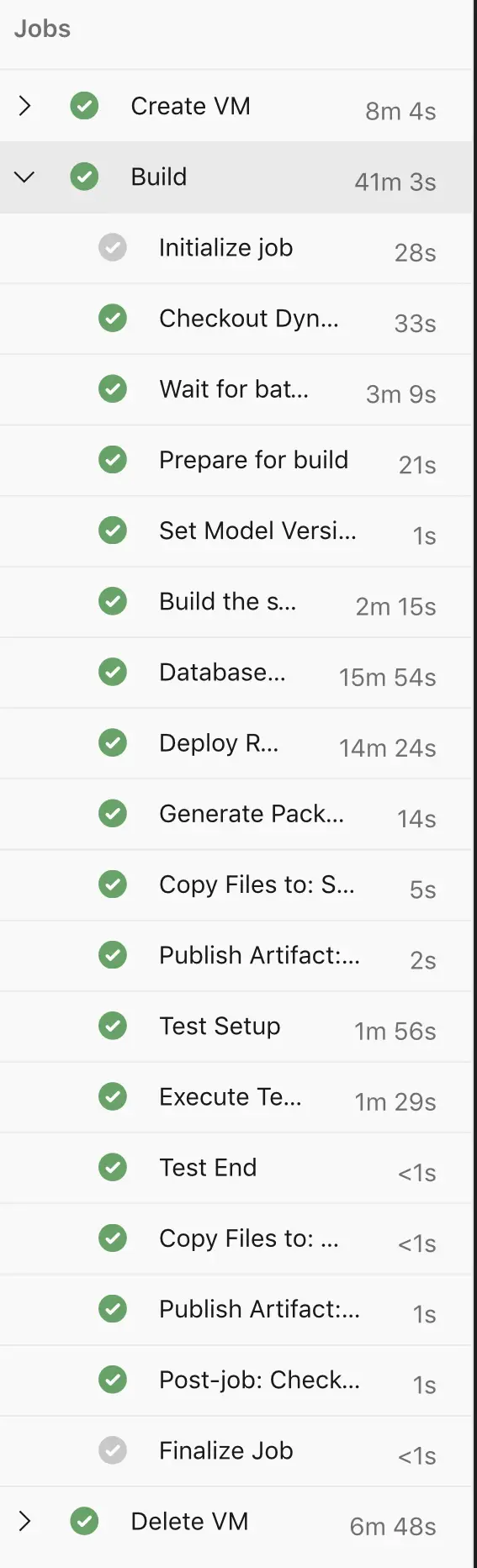

Times

The pipeline from the image above is one with real code from a customer but I can’t compare the times with the Azure-hosted builds because there’s no sync, or tests there. Regarding the build time the Azure-hosted takes one minute less, but it needs to install the nugets first.

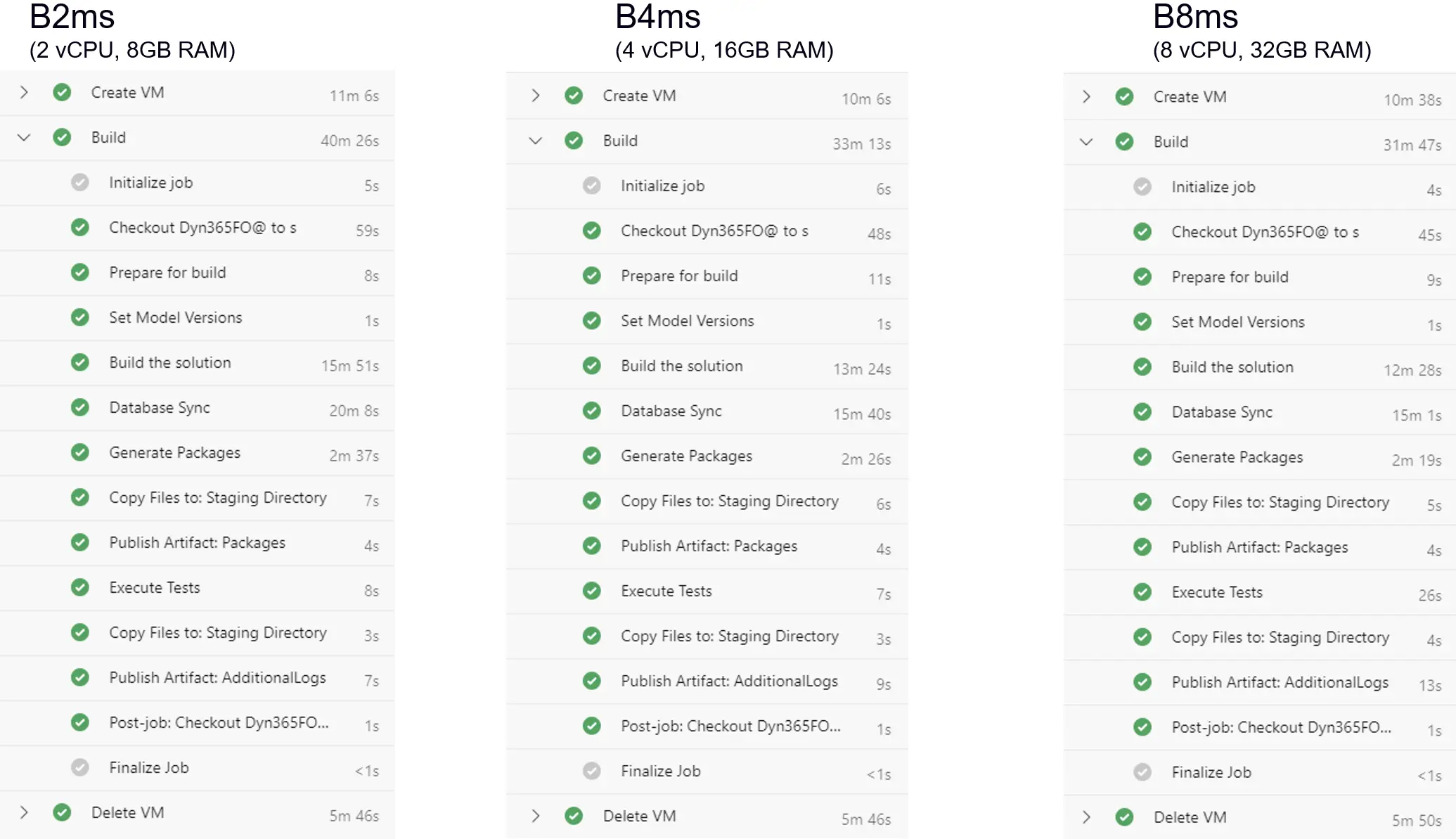

But for example this is a comparison I did:

It takes around 1 hour to create the VM, build, do a full DB synch, deploy reports, run tests, generate a Deployable Package and, finally, delete the VM:

If you skip deploying the SSRS reports your build will run in 15 minutes less, that’s around 45 minutes.

If you use the partial sync process instead of a full DB sync it’ll be 5-7 minutes less.

This would leave us with a 35-40 minutes build.

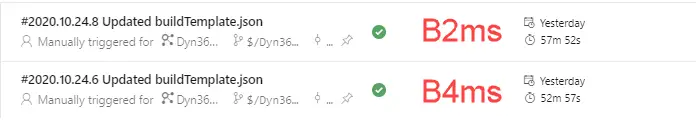

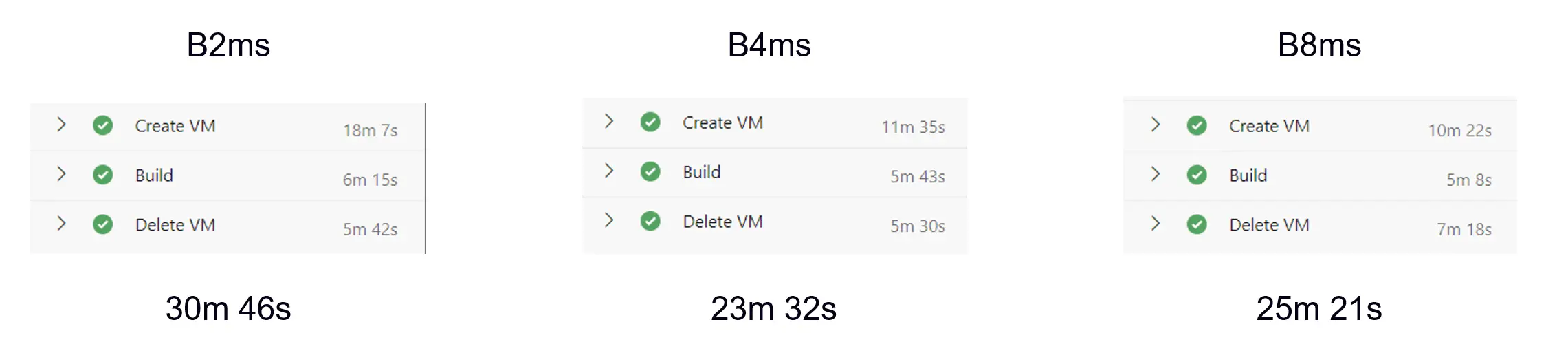

Comparison 1

The image above shows a simple package being compiled, without any table, so the selective sync goes really fast. The build times improve with VM size.

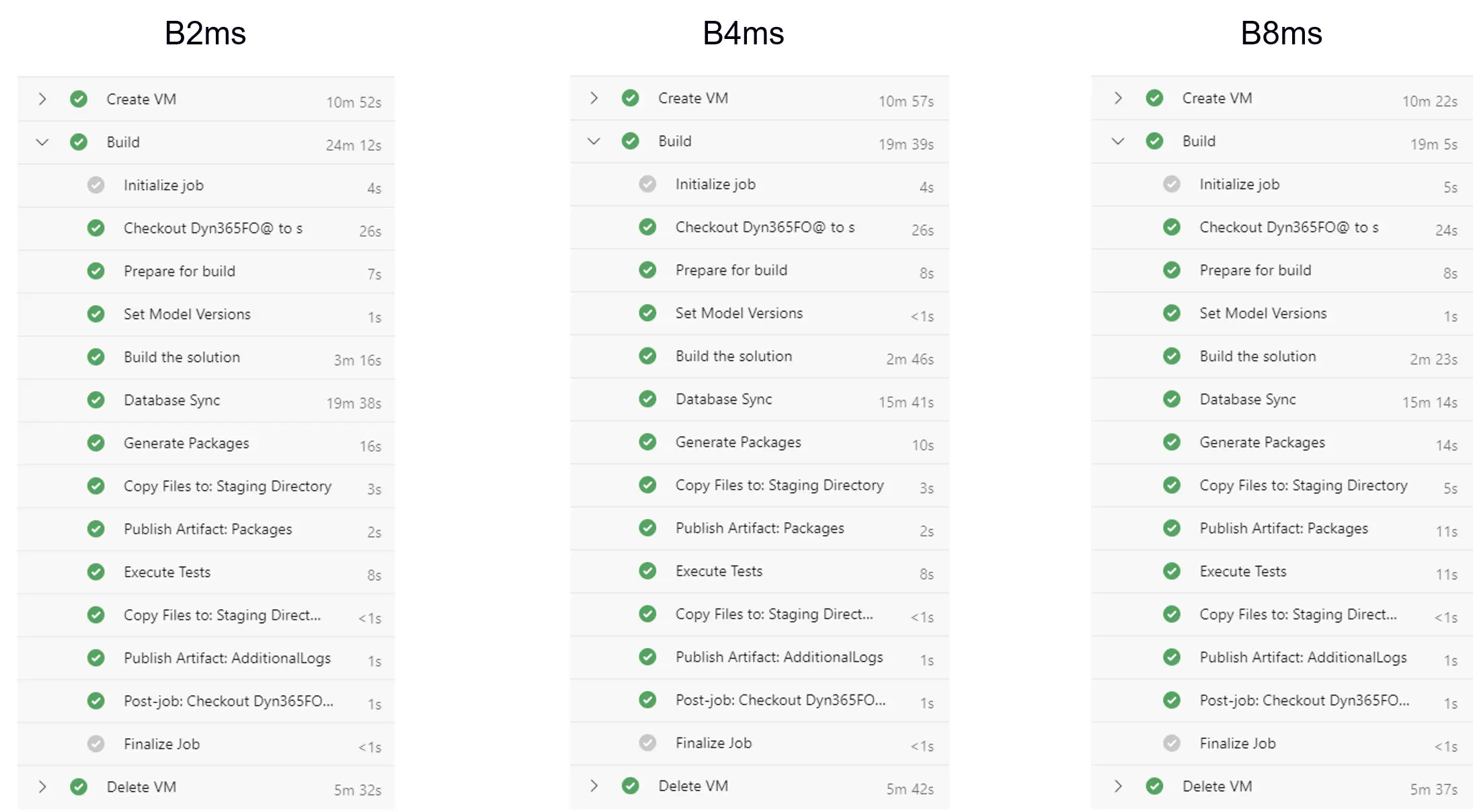

Comparison 2

This one is compiling the same codebase but is doing a full DB sync. The sync time improves in the B4ms VM compared to the B2ms but it’s almost the same in the B8ms. Build times are better for larger VM sizes.

Comparison 3

And in the image above we see a more realistic build. The codebase is larger and we’re doing a full DB sync.

Similar as the comparison before there a good enhancement between a B2ms and a B4ms, but not between a B4ms and B8ms.

Show me the money!

I think this is the interesting comparison. How did a Tier-1 MS-hosted build VM cost? Around 400€? How does it compare to using the Azure DevTest Labs alternative?

There’s only one fix cost when using Azure DevTest Labs: the blob storage where the VHD is uploaded. The VHD’s size is around 130GB and this should have a cost of, more or less, 5 euros/month. Keep in mind that you need to clean up your custom images when yours is prepared, the new ones are created as snapshots and also take space in the storage account.

Then we have the variable costs that come with the deployment of a VM each build but it’s just absurd. Imagine we’re using a B4ms VM, with a 256GB Premium SSD disk, we would pay 0.18€/hour for the VM plus the proportional part of 35.26€/month of the SSD disk, which would be like 5 cents/hour?

But this can run on a B2ms VM too which is half the compute price of the VM, down to 9 cents per hour.

If we run this build once a day each month, 30 times, the cost of a B4ms would be like… 7€? Add the blob storage and we’ll be paying 12€ per month to run our builds with DB sync and tests.

Is it cheaper than deploying a cloud-hosted environment, and starting and stopping it using the new d365fo.tools Cmdlets each time we run the build? Yes it is! Because if we deploy a CHE we’ll be paying the price of the SSD disk for the whole month!

Some final remarks

- I have accomplished this mostly through trial-and-error. There’s lots of enhancements and best practices to be applied to all the process, specially using an Azure Key Vault to store all the secrets to be used in the Azure DevOps Agent artifact and the pipeline.

- This in another clear example that X++ developers need to step outside of X++ and Dynamics 365 FnO. We’re not X++ only developers anymore, we’re very lucky to be working on a product that is using Azure.

- I’m sure there’s scenarios where using DevTest Labs to create a build VM is useful. Maybe not for an implementation partner, but maybe it is for an ISV partner. It’s just an additional option.

- The only bad thing to me is that we need to apply the version upgrades manually to the VHDs because they’re published only twice a year.

- As I said at the beginning of the post, it may have worked to me with all these steps, but if you try you maybe need to change some things. But it’s a good way to start.

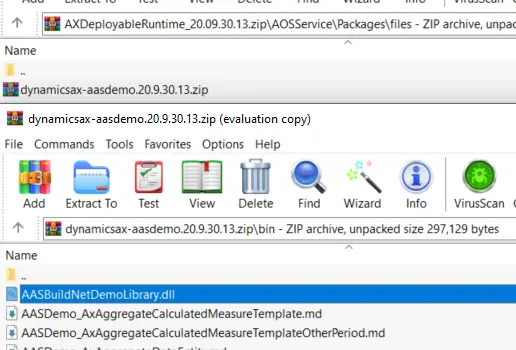

Add and build .NET projects

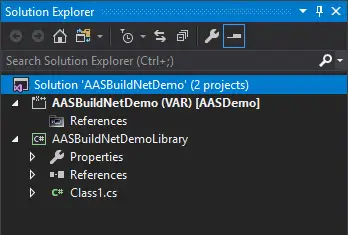

I bet that most of us have had to develop some .NET class library to solve something in Dynamics 365 Finance and Operations. You create a C# project, build it, and add the DLL as a reference in your FnO project. Don’t do that anymore! You can add the .NET project to source control, build it in your pipeline, and the DLL gets added to the deployable package!

I’ve been trying this during the last days after a conversation on Yammer, and while I’ve managed to build .NET and X++ code in the same pipeline, I’ve found some issues or limitations.

Build .NET in your pipeline

Note: what I show in this post is done using the Azure-hosted pipeline but it should also be possible to do it using a self-hosted agent (aka old build VM).

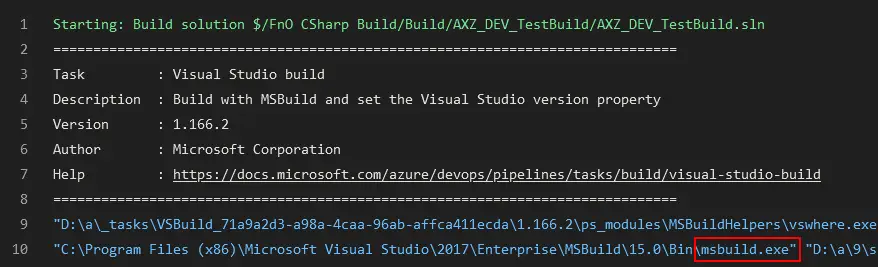

The build step of the pipeline invokes msbuild.exe which can build .NET code. If we check the logs of the build step we will see it:

Remember that X++ is part of the .NET family after all… a second cousin or something like it.

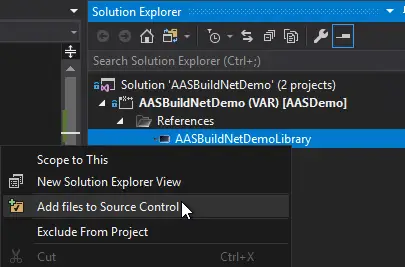

If you’ve read the blog post about Azure-hosted builds you must’ve seen I’m putting the solution that references all my models in a folder called Build at the root of my source control tree (left image).

That’s just a personal preference that helps me keep the .config files and the solution I use to build all the models in a single, separate place.

By using a solution and pointing the build process to use it I also keep control of what’s being built in a single place.

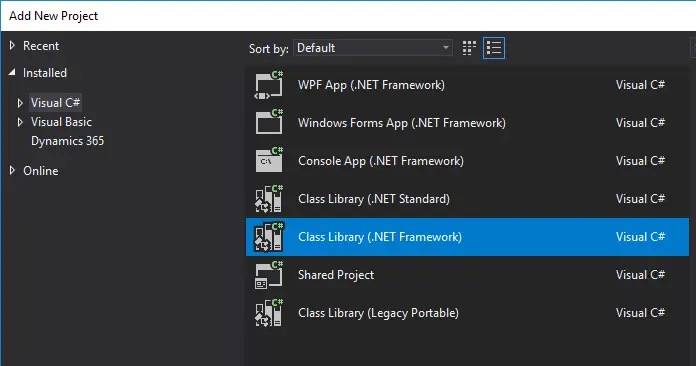

Add a C# project to FnO

Our first step will usually be creating a Finance and Operations project. Once it’s created we right-click on the solution and select “Add new project”. Then we select a Visual C# Class Library project:

Now we should have a solution with a FnO Project and a C# project (right image).

To demo this I’ll create a class called Calculator with a single method that accepts two decimal values as parameters and returns it’s sum. An add method.

public class Calculator

{

public decimal Add(decimal a, decimal b)

{

return a + b;

}

}

Now compile the C# project alone, not the whole solution. This will create the DLL in the bin folder of the project. We have to do this before adding the C# project as a reference to the FnO project.

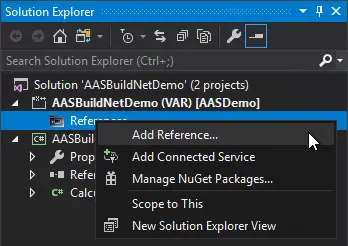

Right click on the References node of the FnO project and select “Add Reference…”:

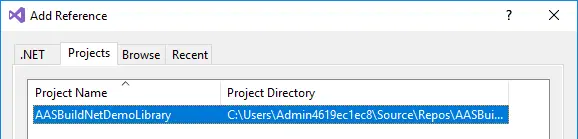

A window will open and you should see the C# project in the “Projects” tab:

Select it and click the Ok button. That will add the C# project as a reference to our FnO project, but we still need to do something or this won’t compile in our pipeline. We have to manually add the reference to the project that has been created in the AOT. So, right-click on the reference and select “Add to source control”:

In the FnO project add a Runnable Class, we’ll call the C# library there:

using AASBuildNetDemoLibrary;

class AASBuildNetTest

{

public static void main(Args _args)

{

var calc = new Calculator();

calc.Add(4, 5);

}

}

Add the solution to source control if you haven’t, make sure all the objects are also added and check it in.

Build pipeline

If I go to my Azure DevOps repo we’ll see the following:

You can see I’ve checked-in the solution under the Build folder, as I said earlier this is my personal preference and I do that to keep the solutions I’ll use to build the code under control.

In my build pipeline I make sure I’m using this solution to build the code:

Run the pipeline and when it’s done you can check the build step and you’ll see a line that reads:

Copying file from "D:\a\9\s\Build\AASBuildNetDemo\AASBuildNetDemoLibrary\bin\Debug\AASBuildNetDemoLibrary.dll" to "D:\a\9\b\AASDemo\bin\AASBuildNetDemoLibrary.dll".

And if you download the DP, unzip it, navigate to AOSService\Packages\files and unzip the file in there, then open the bin folder, you’ll see our library’s DLL there:

Things I don’t like/understand/need to investigate

I’ve always done this with a single solution and only one C# project. I have some doubts about how this will work with many C# projects, models, solutions, etc.

For example, if a model has a dependency on the DLL but it’s built before the DLL the build will fail. I’m sure there’s a way to set an order to solve dependencies like there is for FnO projects within a solution.

Or maybe I could try building all the C#/.NET projects before, pack them in a nuget and use the DLLs later in the FnO build, something similar to what Paul Heisterkamp explained in his blog.

Anyway, it’s your choice how to manage your C# projects and what solution fits your workflow the best, but at least you’ve got an example here 🙂

Setup Release Pipelines

We’ve seen how the default build definition is created and how we can modify it. Now we’ll see how to configure our release pipelines!

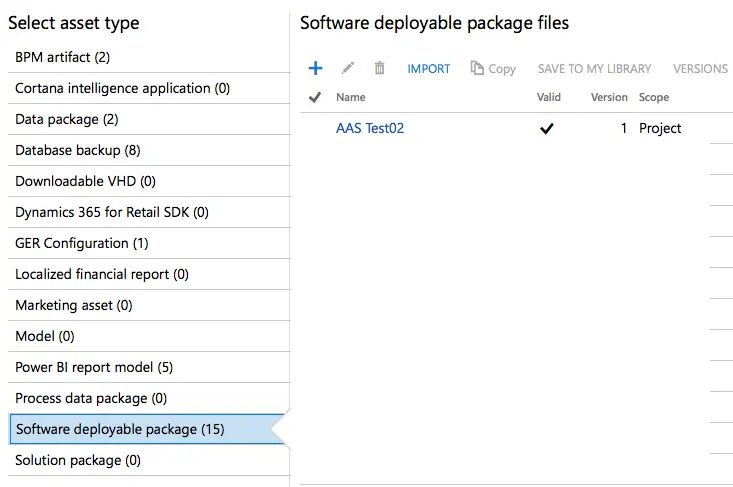

The release pipelines allow us to automatically deploy our Deployable Packages to a Tier 2+ environment. This is part of the Continuous Delivery (CD) strategy. We can only do this for the UAT environments, it’s not possible to automate the deployment to the production environment.

Setting up Release Pipeline in Azure DevOps for Dynamics 365 for Finance and Operations

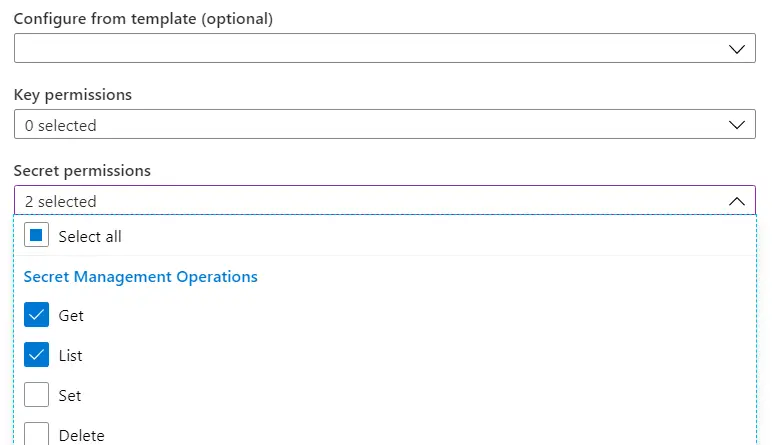

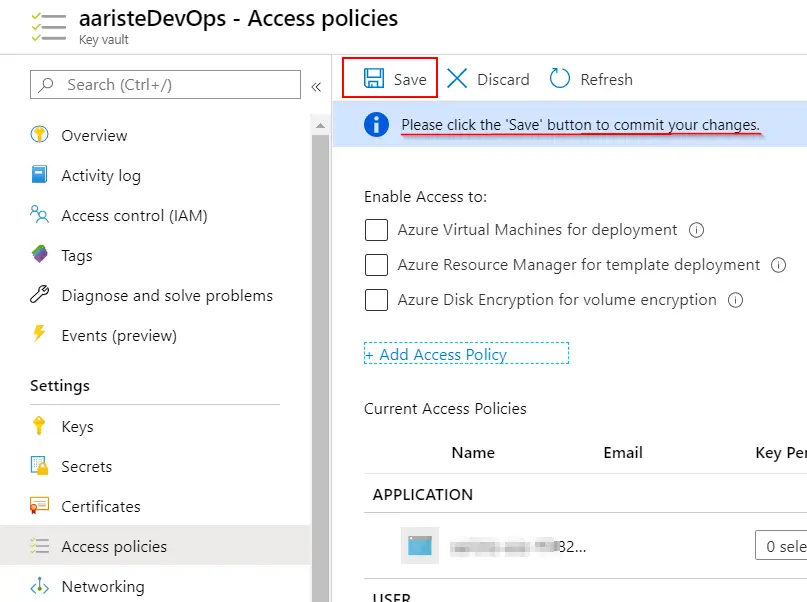

To configure the release pipeline, we need:

- AAD app registration

- LCS project

- An Azure DevOps project linked to the LCS project above

- A service account

I recommend a service account to do this, with a non-expiring password and no MFA enabled. It must have enough privileges on LCS, Azure and Azure DevOps too. This is not mandatory and can be done even with your user (if it has enough rights) for testing purposes, but if you’re setting this up don’t use your user and go for a service account.

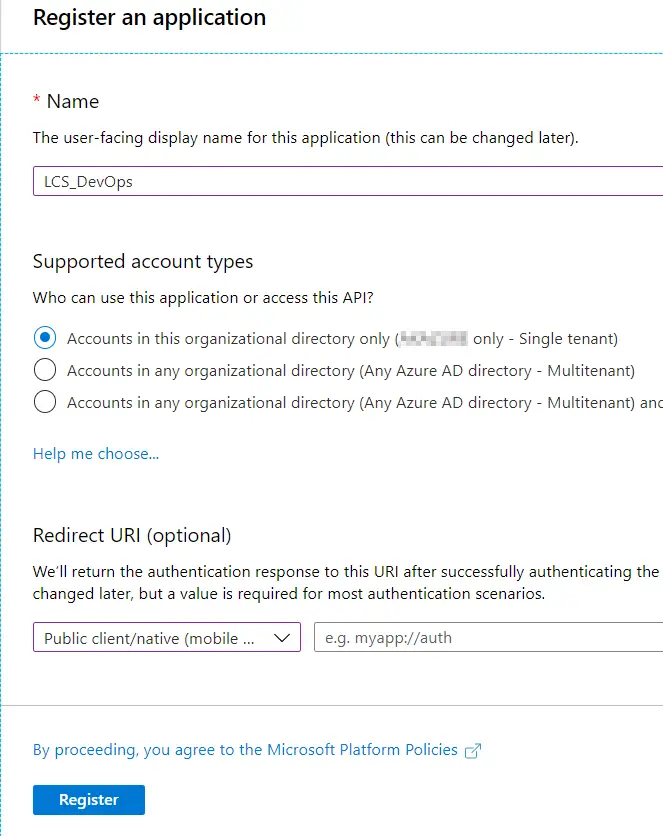

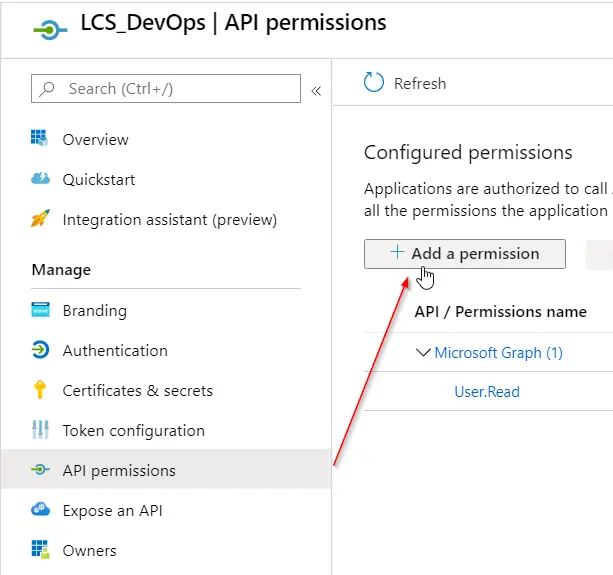

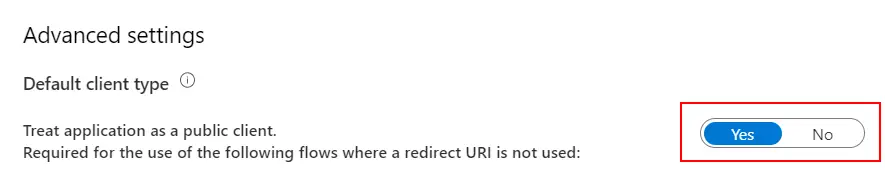

AAD app creation

The first step to take is creating an app registration on Azure Active Directory to upload the generated deployable package to LCS. Head to Azure portal and once logged in go to Azure ActiveDirectory, then App Registrations and create a new Native app:

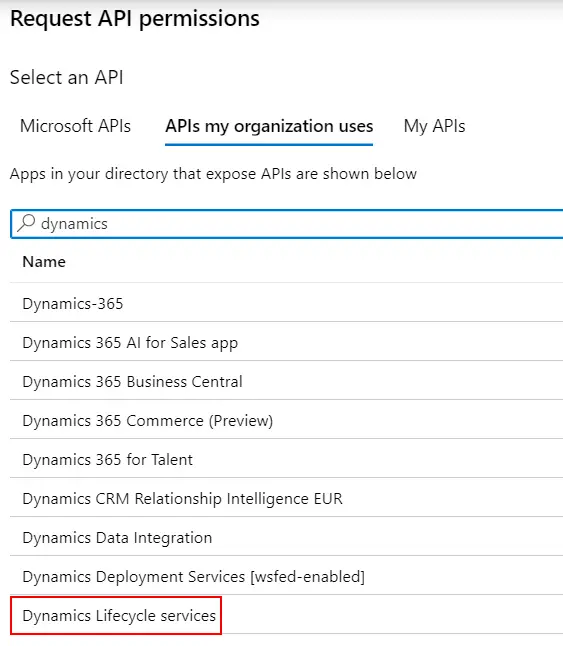

Next go to “Settings” and “Required permissions” to add the Dynamics Lifecycle Services API:

In the dialog that will open change to the “APIs my organization uses” tab and select “Dynamics Lifecycle Services”:

Select the only available permission in the next screen and click on the “Add permissions” button. Finally press the “Grant admin consent” button to apply the changes. This last step can be easily forgotten and the package upload to LCS cannot be done if not granted. Once done take note of the Application ID, we’ll use it later.

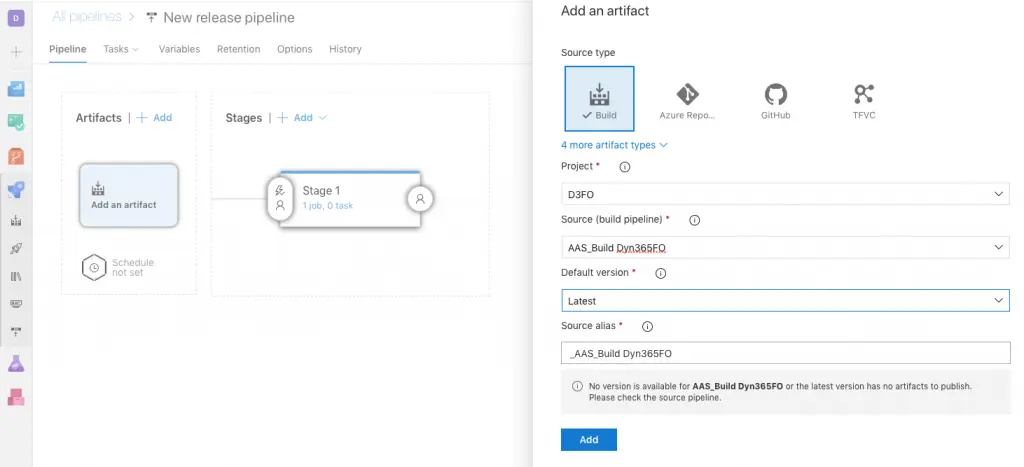

Create the release pipeline in DevOps

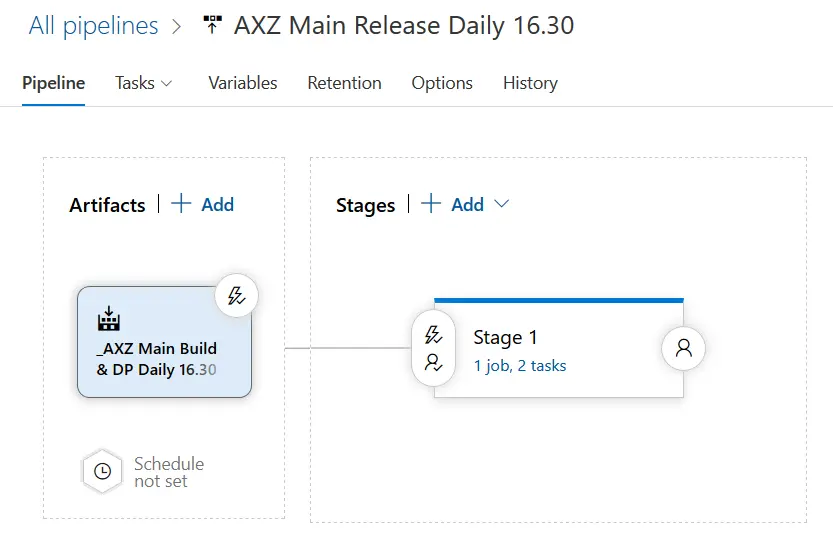

Go to Azure DevOps, and to Pipelines -> Releases to create the new release. Select “New release pipeline” and choose “Empty job” from the list.

On the artifact box select the build which will be used for this release definition:

Pick the build definition you want to use for the release in “Source”, “Latest” in “Default version” and push “Add”.

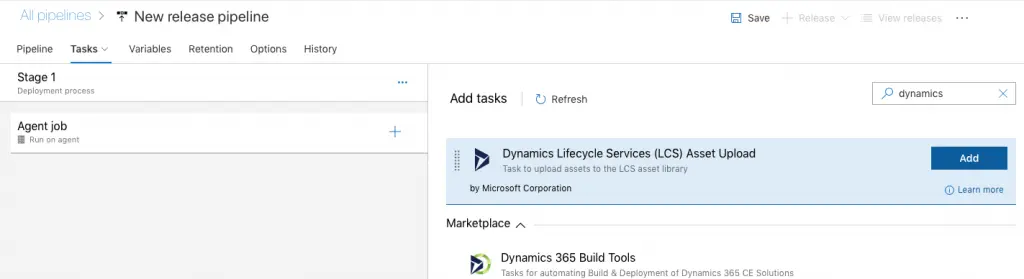

Upload to LCS

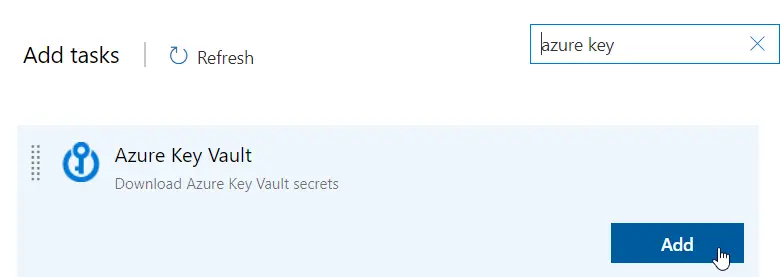

The next step we’ll take is adding a Task with the release pipeline for Dynamics. Go to the Tasks tab and press the plus button. A list with extension will appear, look for “Dynamics 365 Unified Operations Tools”:

If the extension hasn’t been added previously it can be done in this screen. In order to add it, the user used to create the release must have admin rights on the Azure DevOps account, not only in the project in which we’re creating the pipeline.

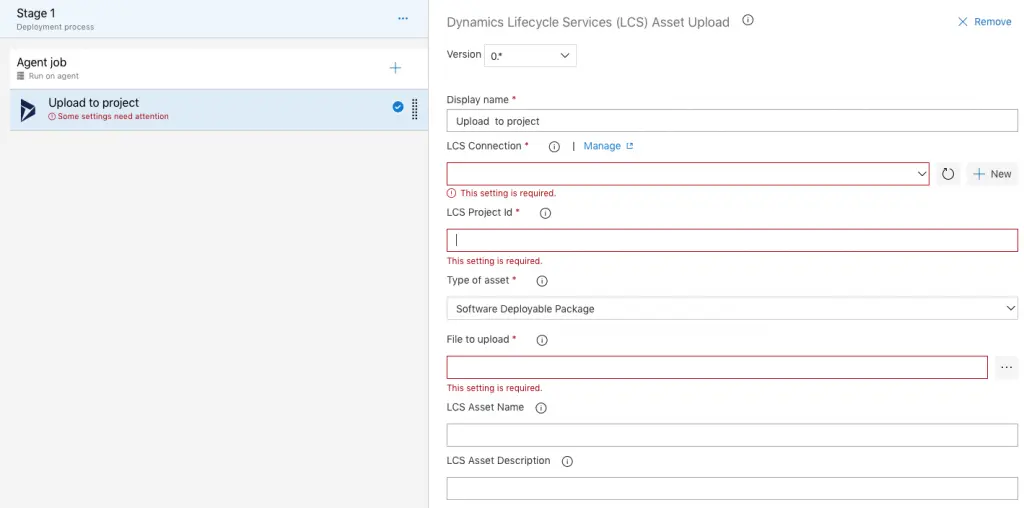

When the task is created we need to fill some parameters:

Apply deployable package

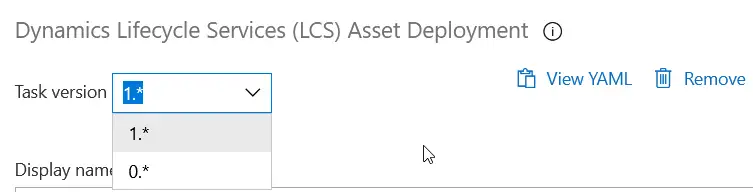

This step is finally available for self-service environments! If you already set this for a regular environment you can still change the task to the new version.

The new task version 1 works for both type of environments: Microsoft managed (regular environments) and self-service environments. The task version 0 is the old one and will only work with regular environments. You can safely switch your deploy tasks to version 1.

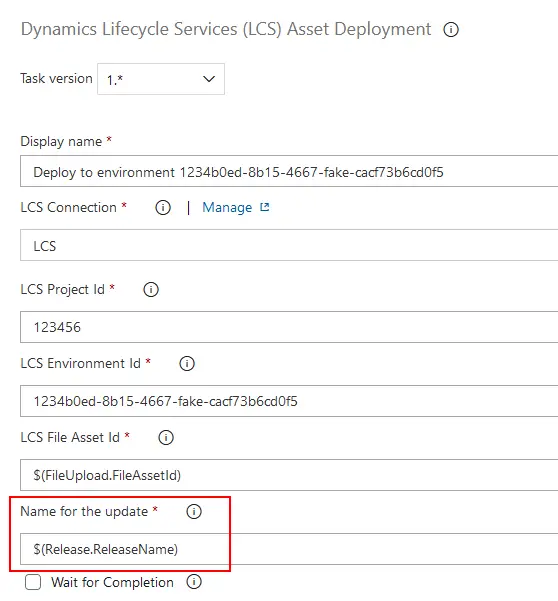

What’s different in task version 1? I guess that some work behind it that we don’t see to make it support self-service, but in the UI we only see a new field called “Name for the update“.

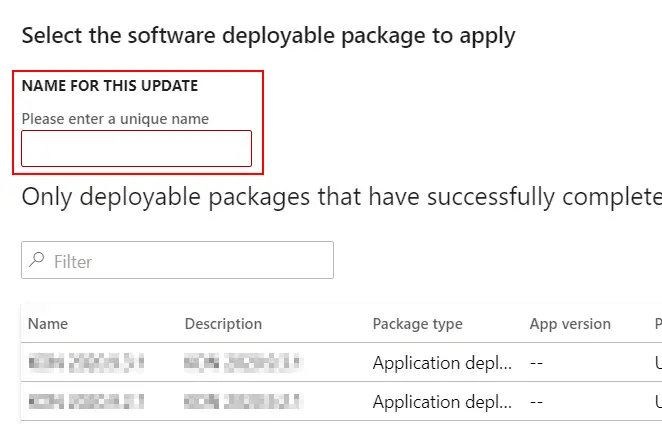

This field is needed only for the self-service environments deployments, it will be ignored for regular ones, and corresponds to the field with the same name that appears on LCS when we apply an update to a sandbox environment:

The default field’s value is the variable $(Release.ReleaseName) that is the name of the release, but you can change it, for example I’ll be using a pattern like PREFIX BRANCH $(Build.BuildNumber) to have the same name we have for the builds and identifying what we’re deploying to prod quickier.

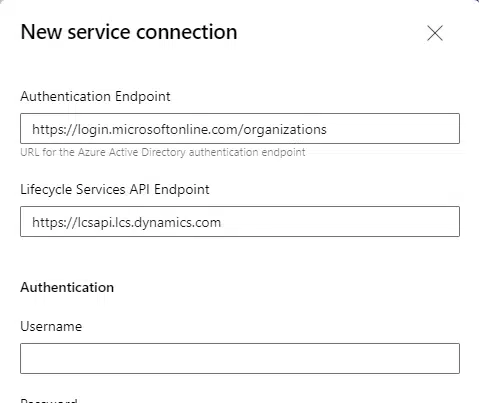

Creating the LCS connection

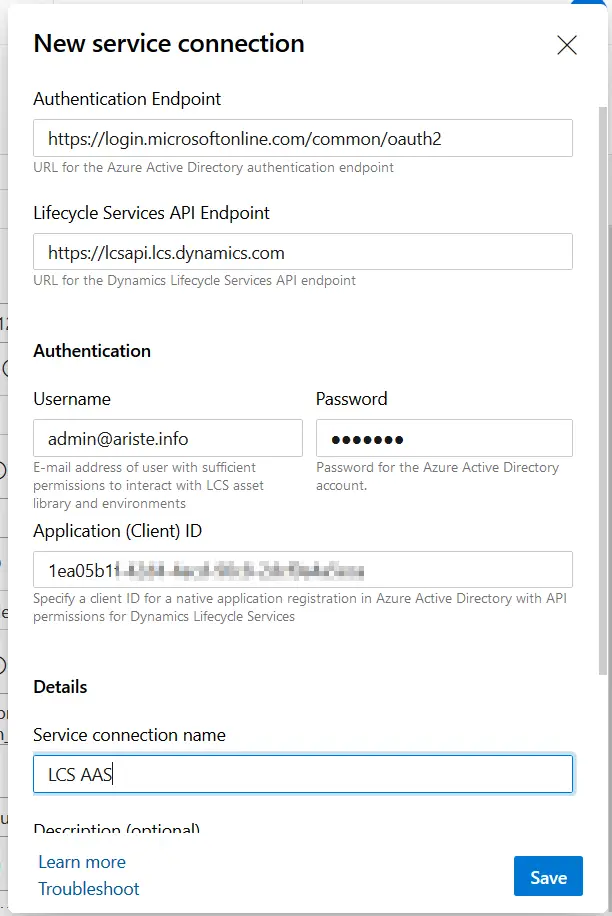

The first step in the task is setting up the link to LCS using the AAD app we created before. Press New and let’s fill the fields in the following screen:

It’s only necessary to fill in the connection name, username, password (from the user and Application (Client) ID fields. Use the App ID we got in the first step for the App ID field. The endpoint fields should be automatically filled in. Finally, press OK and the LCS connection is ready.

In the LCS Project Id field, use the ID from the LCS project URL, for example in https://lcs.dynamics.com/V2/ProjectOverview/1234567 the project is is 1234567.

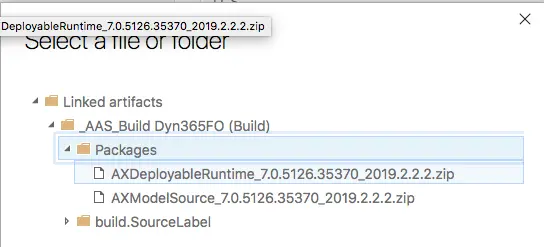

Press the button next to “File to upload” and select the deployable package file generated by the build:

If the build definition hasn’t been modified, the output DP will have a name like AXDeployableRuntime_VERSION_BUILDNUMBER.zip. Change the fixed Build Number for the DevOps variable $(Build.BuildNumber) like in the image below:

The package name and description in LCS are defined in “LCS Asset Name” and “LCS Asset Description”. For these fields, Azure DevOps’ build variables and release variables can be used. Use whatever fits your project, for example a prefix to distinguish between prod and pre-prod packages followed by $(Build.BuildNumber), will upload the DP to LCS with a name like Prod 2019.1.29.1, using the date as a DP name.

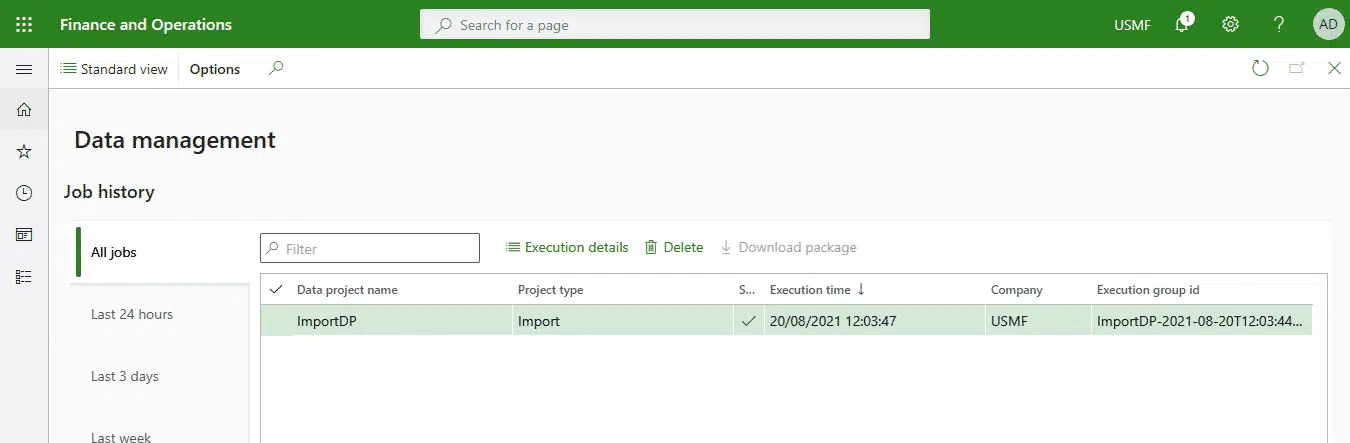

Save the task and release definition and let’s test it. In the Releases select the one we have just created and press the “Create a release” button, in the dialog just press OK. The release will start and, if everything is OK we’ll see the DP in LCS when it finishes:

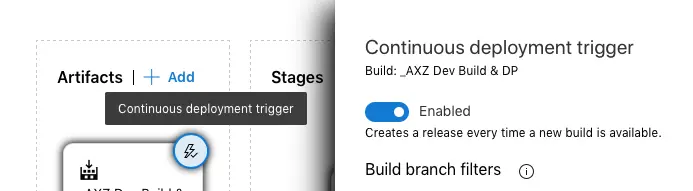

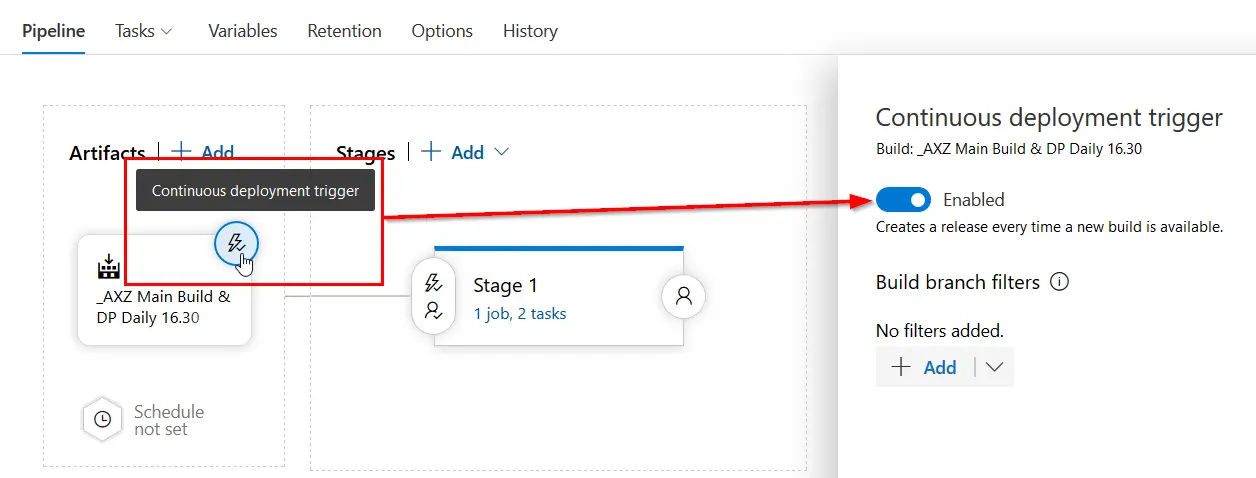

The release part can be automated, just press the lightning button on the artifact and enable the trigger:

And that’s all! Now the build and the releases are both configured. Once the deployment package is published the CI scenario will be complete.

New Azure DevOps release tasks: MSAL authentication and ADAL deprecation

There’s a new version and a new task for our release pipelines that use the Azure-hosted agents. These changes have been introduced recently to support the new MSAL authentication libraries for the LCS service connection used to upload and deploy the deployable packages.

The current service connections use Azure Active Directory (Azure AD) Authentication Library (ADAL), and support for ADAL will end in June 2022.

This means that if we don’t update the Asset Upload and Asset Deployment to their new versions (1.* and 2.* respectively) the release pipelines could stop working after 30th June 2022.

I’d like to thank Joris de Gruyter for the tip, otherwise I couldn’t have written this post 😛

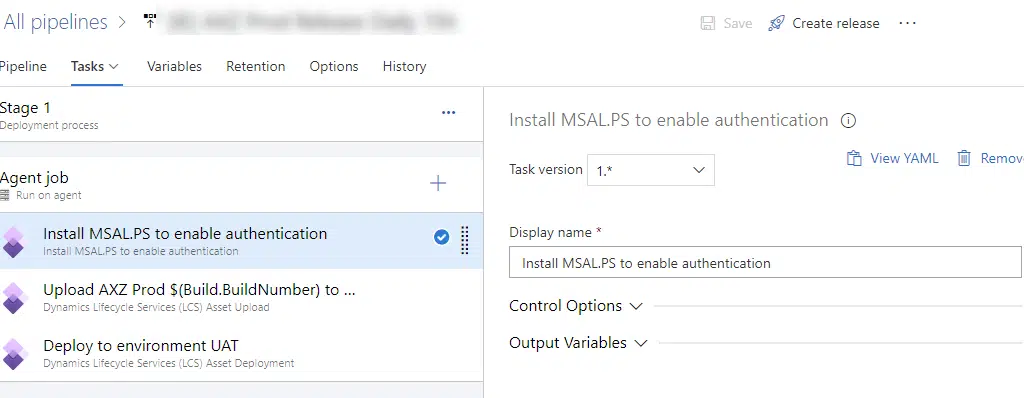

New MSAL task

There’s also a new task to add the support for MSAL authentication. This task will install the MSAL PowerShell libraries in your Microsoft-hosted agent, and you need to add it before any other task authenticates. Like this:

The task has no parameters or options that need to be filled, just add it to your release pipeline, and you’re done.

If you’ve got a multi-stage release pipeline, you have to add this new task to each stage where there’s an authentication step. For example, if you have a first stage that uploads the DP to LCS, and then another one that deploys it and doesn’t have the task, it will fail. This is at least true in projects with additional agents, I need to try it with a single agent project.

New Asset Upload and Deploy versions

To support the new MSAL authentication, the dev tools team at Microsoft have published new versions of both tasks.

Asset Upload

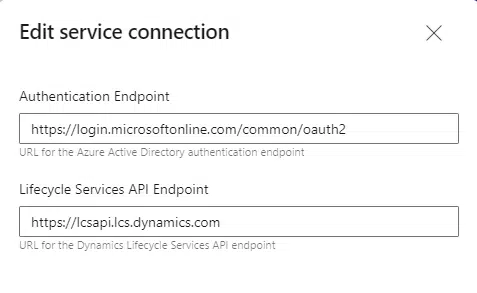

If you change the version of the Asset Upload task from 0.* to 1.* you’ll see no changes. The fields in the task are the same, but it will use MSAL as the new authentication method.

But wait, just changing the version won’t be enough, you need to create a new service connection to LCS because the authentication endpoint has changed to https://login.microsoftonline.com/organizations. This endpoint will be the one used, from now on, in all versions, the old ones and the new.

Here you can see the old service connection endpoint:

And the new one:

Asset Deployment

In the Asset Deployment task we now see three versions: 0.* which was the original one, 1.* which is the one that enabled support for self-service environments, and 2.* which is the new task that supports MSAL authentication.

If you’ve already created the service connection in the previous step, just change it to use the new one.

And what about self-hosted agents (build VM)?

I’m not sure. But probably just installing the MSAL.PS PowerShell library in your build VM will be enough, if it’s not there already.

More automation!

I’ve already explained in the past how to automate the builds, create the CI builds and create the release pipelines on Azure DevOps, what I want to talk about in this post is about adding a little bit more automation.

Builds

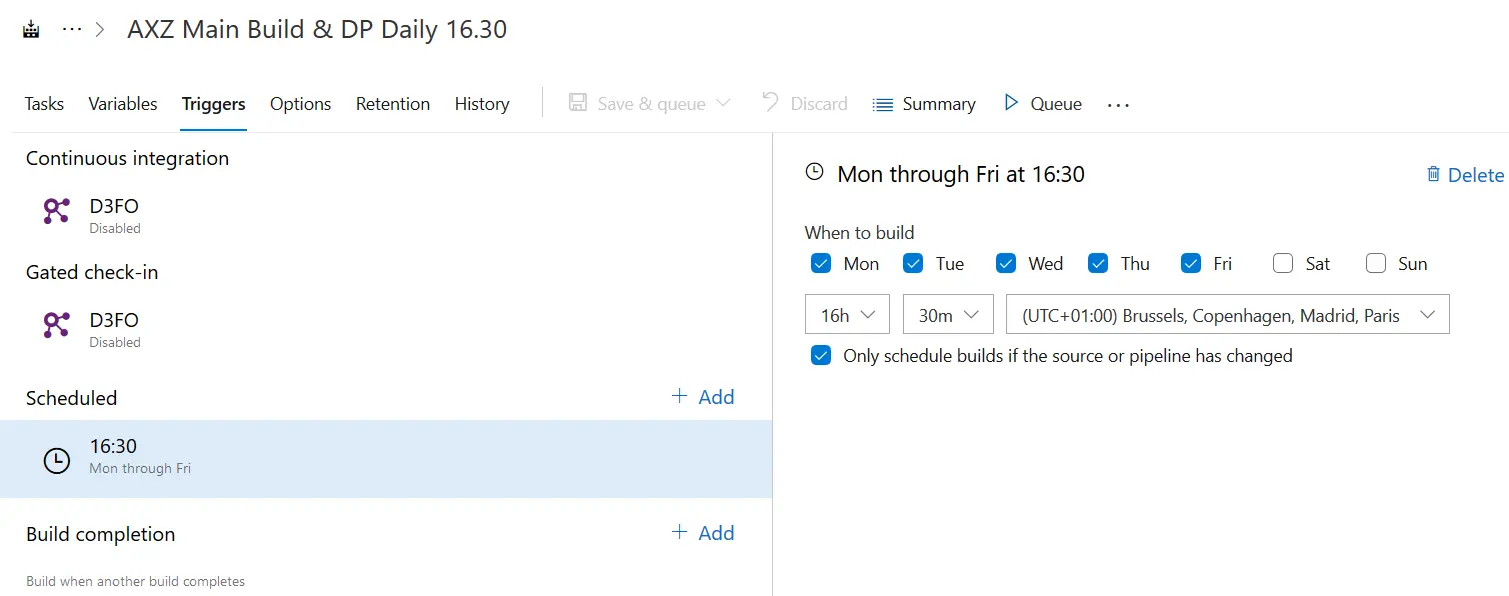

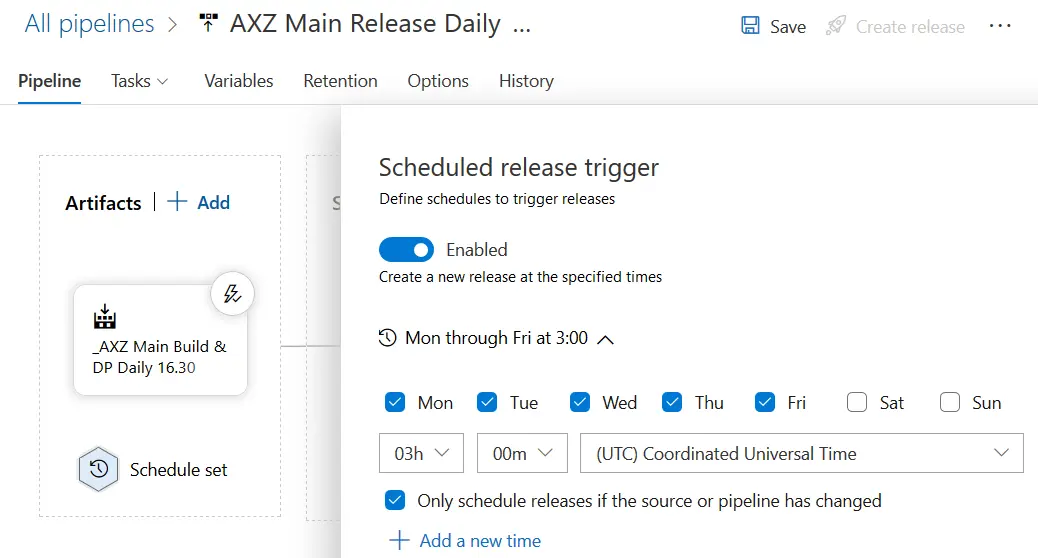

In the build definition go to the “Triggers” tab and enable a scheduled build:

This will automatically trigger the build at the time and days you select. In the example image, every weekday at 16.30h a new build will be launched. But everyday? Nope! What the “Only schedule builds if the source or pipeline has changed” checkbox below the time selector makes is only triggering the build if there’s been any change to the codebase, meaning that if there’s no changeset checked-in during that day no build will be triggered.

Releases

First step done, let’s see what can we do with the releases:

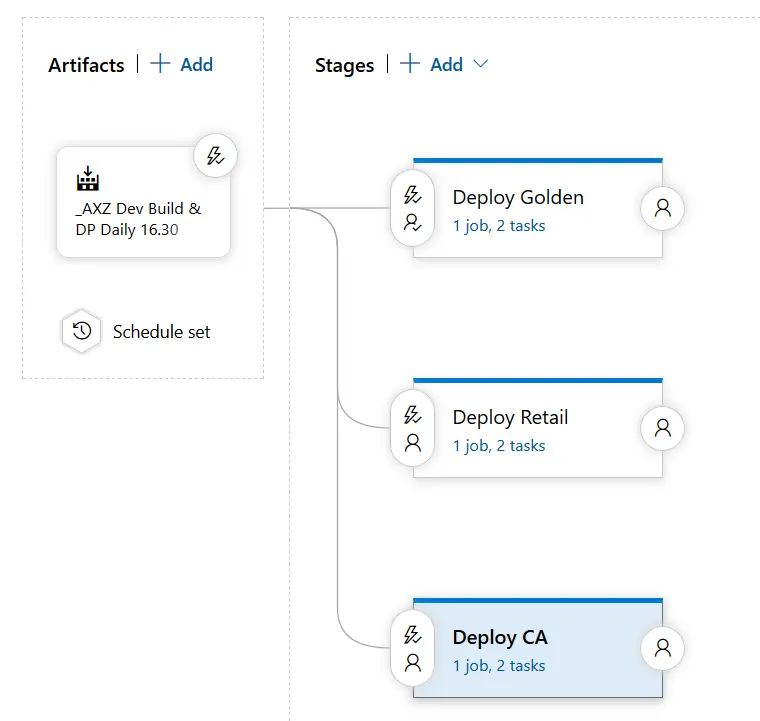

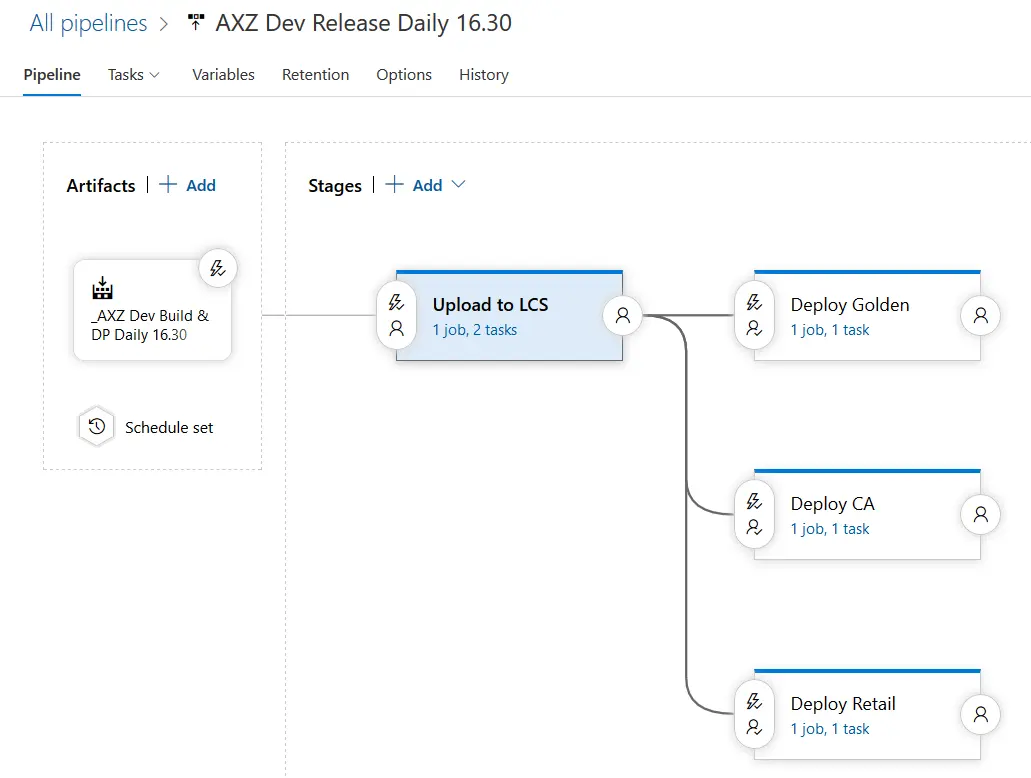

The release pipeline in the image above is the one that launches after the build I’ve created in the first step. For this pipeline I’ve added the following:

The continuous deployment trigger has been enabled, meaning that after the build finishes this release will be automatically run. No need to define a schedule but you could also do that.

As you can see, the schedule screen is exactly the same as in the builds, even the changed pipeline checkbox is there. You can use any of these two approaches, CD or scheduled release, it’s up to your project or team needs.

With these two small steps you can have your full CI and CD strategy automatized and update a UAT environment each night to have all the changes done during that day ready for testing, with no human interaction!

But I like to add some human touch to it

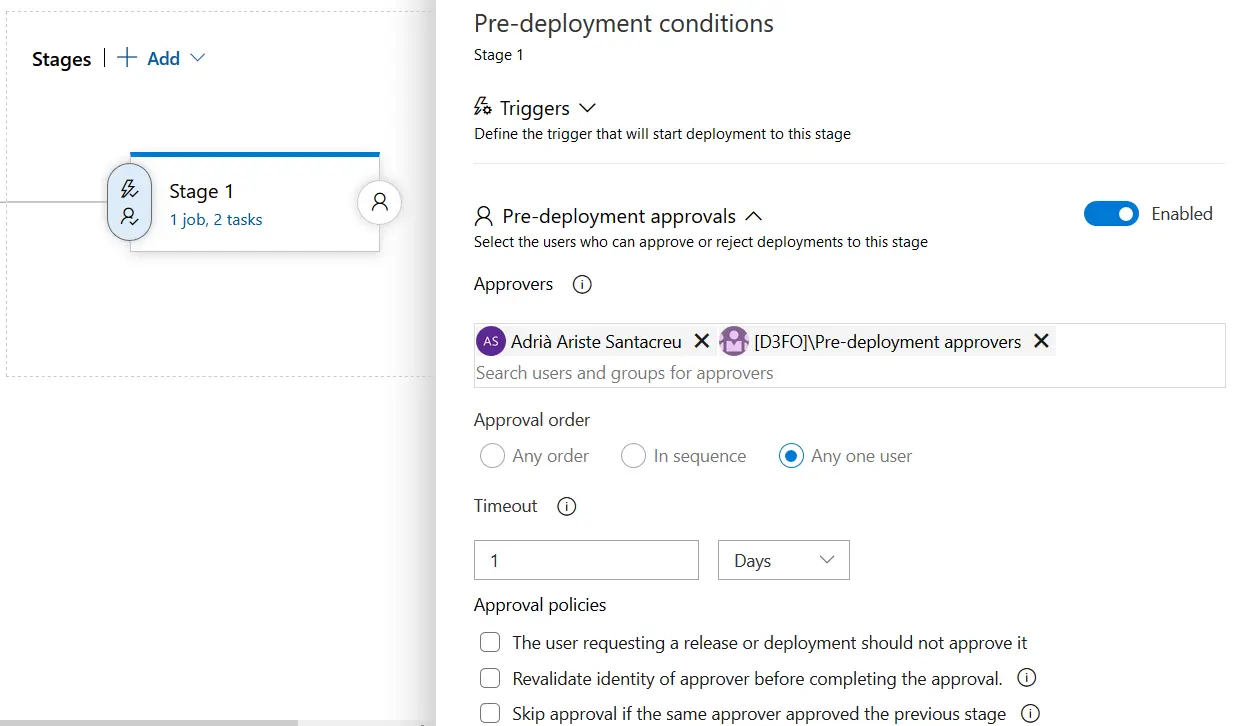

If you don’t like not knowing if an environment is being updated… well that’s IMPOSSIBLE because LCS will SPAM you to make sure you know what’s going on. But if you don’t want to be completely replaced by robots you can add approvals to your release flow:

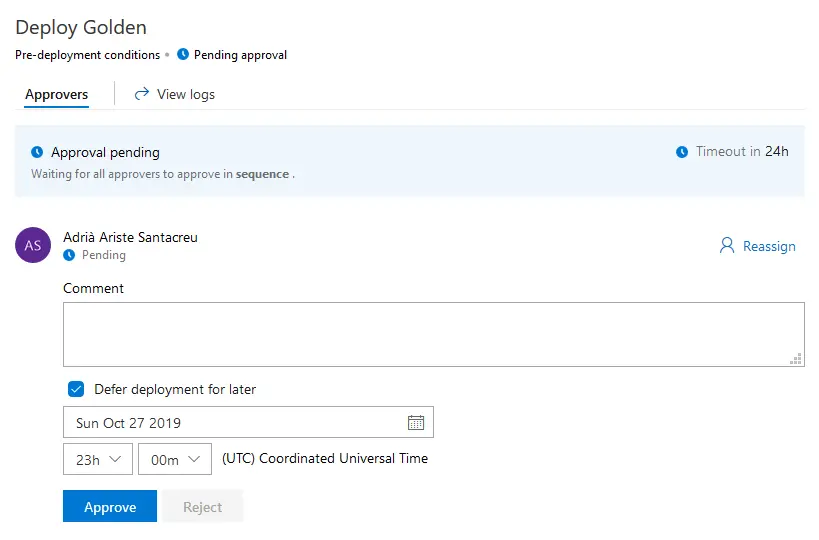

Clicking the left lightning + person button on your release you can set the approvers, a person or a group (which is quite practical), and the kind of approval (all or single approver) and the timeout. You will also receive an email with a link to the approval form:

And you can also postpone the deployment! Everything is awesome!

Extra bonus!

A little tip. Imagine you have the following release:

This will update 3 environments, but will also upload the same Deployable Package three times to LCS. Wouldn’t it be nice to have a single upload and that all the deployments used that file? Yes, but we can’t pass the output variable from the upload to other stages 🙁 Yes that’s unfortunately right. But we can do something with a little help from our friend Powershell!

Update a variable in a release

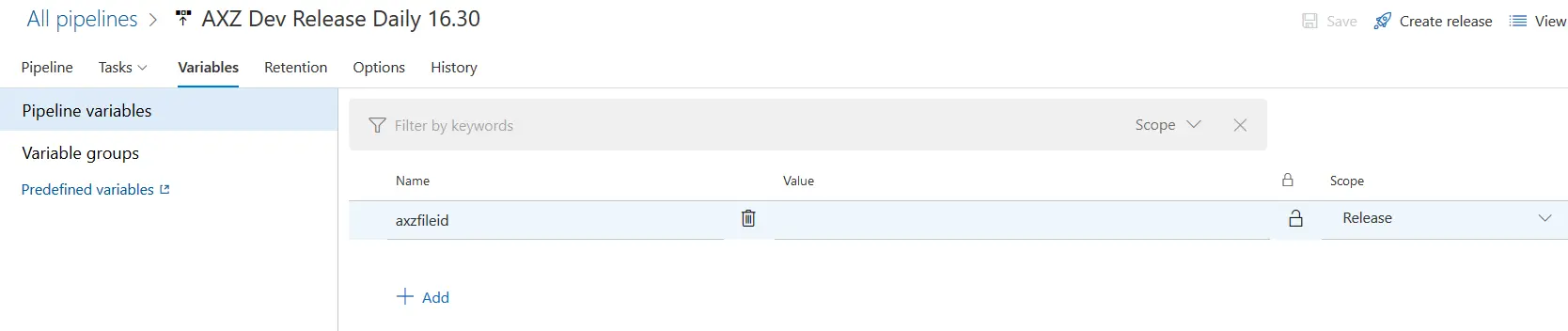

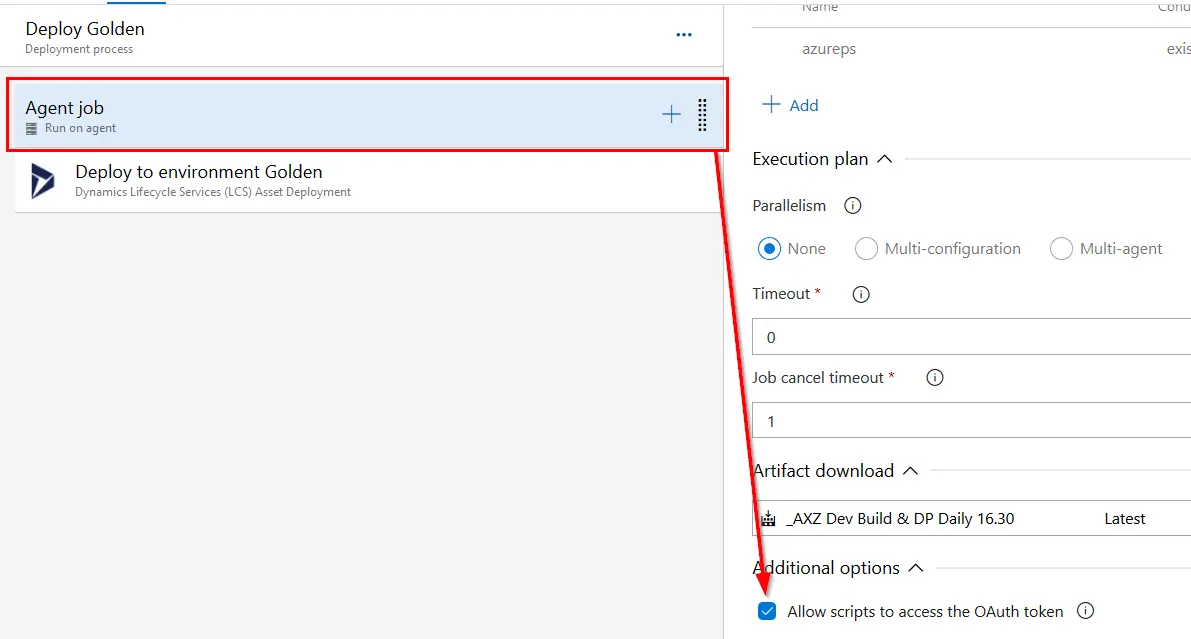

What we need to do is create a variable in the release definition and set its scope to “Release”:

Then, for each stage, we need to enable this checkbox in the agent job:

I explain later why we’re enabling this. We now only need to update this variable after uploading the DP to LCS. Add an inline Powershell step after the upload one and do this:

# Populate & store value to update pipeline

$assetId= "$(GoldenUpload.FileAssetId)"

Write-Output ('##vso[task.setvariable variable=localAsset]{0}' -f $assetId)

#region variables

$ReleaseVariableName = 'axzfileid'

$releaseurl = ('{0}{1}/_apis/release/releases/{2}?api-version=5.0' -f $($env:SYSTEM_TEAMFOUNDATIONSERVERURI), $($env:SYSTEM_TEAMPROJECTID), $($env:RELEASE_RELEASEID) )

#endregion

#region Get Release Definition

Write-Host "URL: $releaseurl"

$Release = Invoke-RestMethod -Uri $releaseurl -Headers @{

Authorization = "Bearer $env:SYSTEM_ACCESSTOKEN"

}

#endregion

#region Output current Release Pipeline

#Write-Output ('Release Pipeline variables output: {0}' -f $($Release.variables | #ConvertTo-Json -Depth 10))

#endregion

#Update axzfileid with new value

$release.variables.($ReleaseVariableName).value = $assetId

#region update release pipeline

Write-Output ('Updating Release Definition')

$json = @($release) | ConvertTo-Json -Depth 99

$enc = [System.Text.Encoding]::UTF8

$json= $enc.GetBytes($json)

Invoke-RestMethod -Uri $releaseurl -Method Put -Body $json -ContentType "application/json" -Headers @{Authorization = "Bearer $env:SYSTEM_ACCESSTOKEN" }

#endregion

You need to change the following:

- Line 2: $assetId= “$(GoldenUpload.FileAssetId)”. Change $(GoldenUpload.FileAssetId) for your output variable name.

- Line 6: $ReleaseVariableName = ‘axzfileid’. Change axzfileid for your Release variable name.

And you’re done. This script uses Azure DevOps’ REST API to update the variable value with the file id, and we enabled the OAuth token checkbox to allow the usage of this API without having to pass any user credentials. This is not my idea obviously, I’ve done this thanks to this post from Stefan Stranger’s blog.

Now, in the deploy stages you need to retrieve your variable’s value in the following way:

Don’t forget the ( ) or it won’t work!

And with these small changes you can have a release like this:

With a single DP upload to LCS and multiple deployments using the file uploaded in the first stage. With approvals, and delays, and emails, and everything!

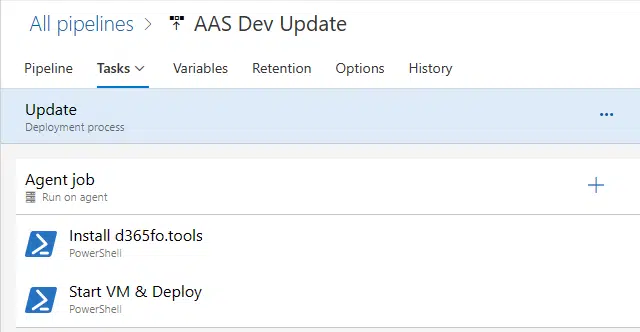

Update VMs using pipelines and d365fo.tools

Now that Microsoft will also update additional Dynamics 365 Finance and Operations Sandbox environments, partners and customers will only need to take care of updating cloud-hosted environments, as we’ve always done.

I’m sure each team manages this differently, maybe leaving it to each developer to update their VM, or there’s someone in the customer or partner side that will do it. That’s in the best cases, maybe nobody is updating the developer machines…

If you want to know more about builds, releases, and the Dev ALM of Dynamics 365 you can read my full guide on MSDyn365 & Azure DevOps ALM.

Today, I’m bringing you a PowerShell script that you can run in a pipeline that will automatically update all your developer virtual machines!

Update script

As I’ve already done many times, I’ll be using Mötz Jensen’s d365fo.tools to run all the operations.

This is the complete script:

# CHANGE THIS!!

$AssetId = "LCS_ASSET_ID"

$User = "YOUR_USER"

$Pass = "YOUR_USER_PASSWORD"

$ClientId = "AAD AppId"

$ProjectId = "LCS_PROJECT_ID"

#Get LCS auth token

Get-D365LcsApiToken -ClientId $ClientId -Username $User -Password $Pass -LcsApiUri https://lcsapi.lcs.dynamics.com | Set-D365LcsApiConfig -ProjectId $ProjectId

Get-D365LcsApiConfig

# Get list of all LCS project environments

$Environments = Get-D365LcsEnvironmentMetadata -TraverseAllPages

$StartedEnvs = @()

Write-Host "=================== STARTING ENVIRONMENTS ==================="

Foreach ($Env in $Environments)

{

# Start Dev VMs only

if ($Env.EnvironmentType -eq "DevTestDev" -and $Env.CanStart)

{

$EnvStatus = Invoke-D365LcsEnvironmentStart -EnvironmentId $Env.EnvironmentId

if ($EnvStatus.IsSuccess -eq "True") {

Write-Host ("Environment {0} started." -f $Env.EnvironmentName)

$StartedEnvs += $Env.EnvironmentId

}

else {

Write-Host ("Environment {0} couldn't be started. Error message: {1}" -f $Env.EnvironmentName, $EnvStatus.ErrorMessage)

}

}

}

Write-Host "=================== STARTING ENVIRONMENTS DONE ==================="

Write-Host "=================== SLEEPING FOR 180 seconds ==================="

# Wait 3 minutes for the VMs to start

Start-Sleep -Seconds 180

$Retries = 0

Write-Host "=================== STARTING DEPLOYMENT ==================="

Do

{

Foreach ($EnvD in $StartedEnvs)

{

$EnvStatus = Get-D365LcsEnvironmentMetadata -EnvironmentId $EnvD

# If the VM has started, deploy the DP

if ($EnvStatus.DeploymentStatusDisplay -eq "Deployed")

{

$OpResult = Invoke-D365LcsDeployment -AssetId $AssetId -EnvironmentId $EnvD

if ($OpResult.IsSuccess -eq "True") {

Write-Host ("Updating environment {0} has started." -f $EnvD)

$StartedEnvs = $StartedEnvs -notmatch $EnvD

}