If you’re integrating Dynamics 365 Finance & Operations with 3rd parties, and your organization or the 3rd party one are using a firewall, you might’ve found yourself in the scenario of being asked “which is the production/sandbox IP address?”.

Well, we don’t know. We know which IP it has now, but we don’t know if it will have the same IP in the future, you will have to monitor this if you plan on opening single IPs. This is something Dag Calafell wrote about on his blog: Static IP not guaranteed for Dynamics 365 for Finance and Operations.

So, what should I do if I have a firewall and need to allow access to/from Dynamics 365 F&O or any other Azure service? The network team usually doesn’t like the answer: if you can’t allow a FQDN, you should open all the address ranges for the datacenter and service you want to access. And that’s a lot of addresses that make the network team sad.

In today’s post, I’ll show you a way to keep an eye on the ranges provided by Microsoft, and hopefully make our life easier.

WARNING: due to this LinkedIn comment, I want to remark that the ranges you can find using this method are for INBOUND communication into Dynamics 365 or whatever service. For outbound communication, check this on Learn: For my Microsoft-managed environments, I have external components that have dependencies on an explicit outbound IP safe list. How can I ensure my service is not impacted after the move to self-service deployment?

Azure IP Ranges: can we monitor them?

Microsoft offers a JSON file you can download with the ranges for all its public cloud datacenters and different services. Yes, a file, not an API.

But wait, don’t complain yet, there IS an API we can use: the Azure REST API. And specifically the Service Tags section under Virtual Networks. The results from calling this API or downloading the file are a bit different, the JSON is structured differently, but both could serve our purpose.

My proposal: an Azure function

We will be querying a REST API, so we could perfectly be using a Power Automate flow to do this. Why am I overdoing things with an Azure function? Because I hate parsing JSON in Power Automate. That’s the main reason, but not the only one. Also because I love Azure functions.

Authentication

To authenticate and be able to access the Azure REST API we need to create an Azure Active Directory app registration, we’ve done this a million times, right? No need to repeat it.

We will need a secret for that app registration too. Keep both. We will create a service principal using it.

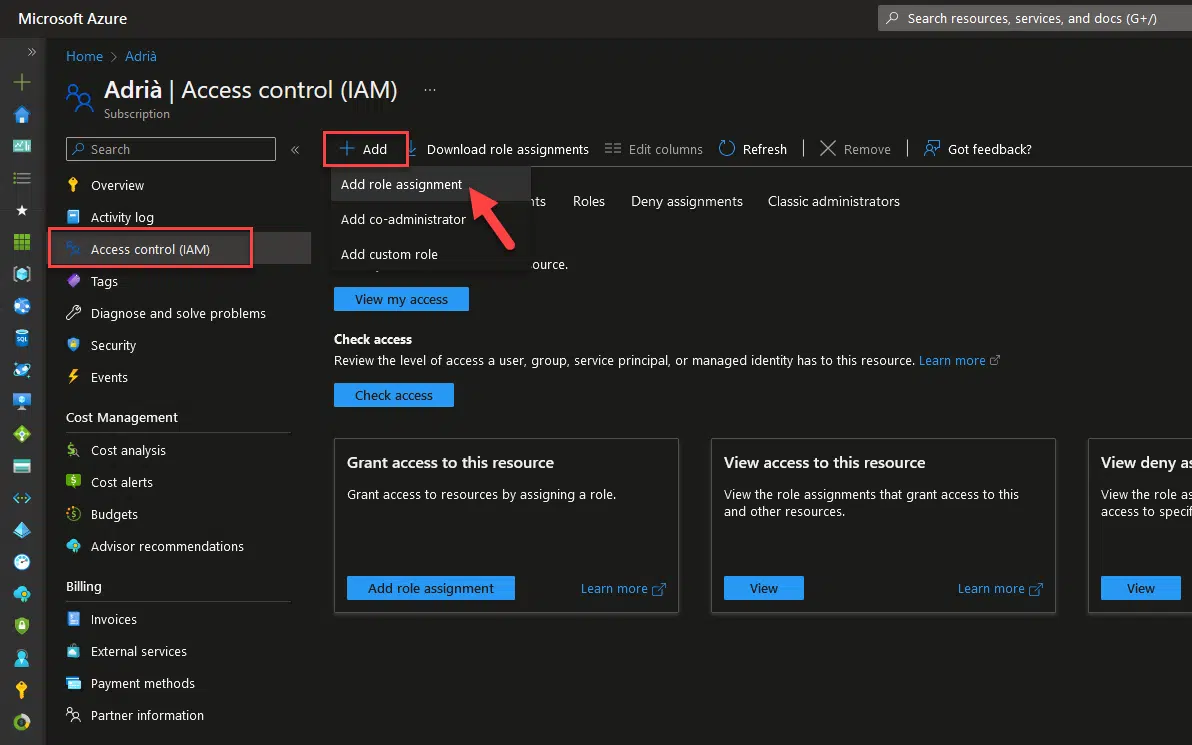

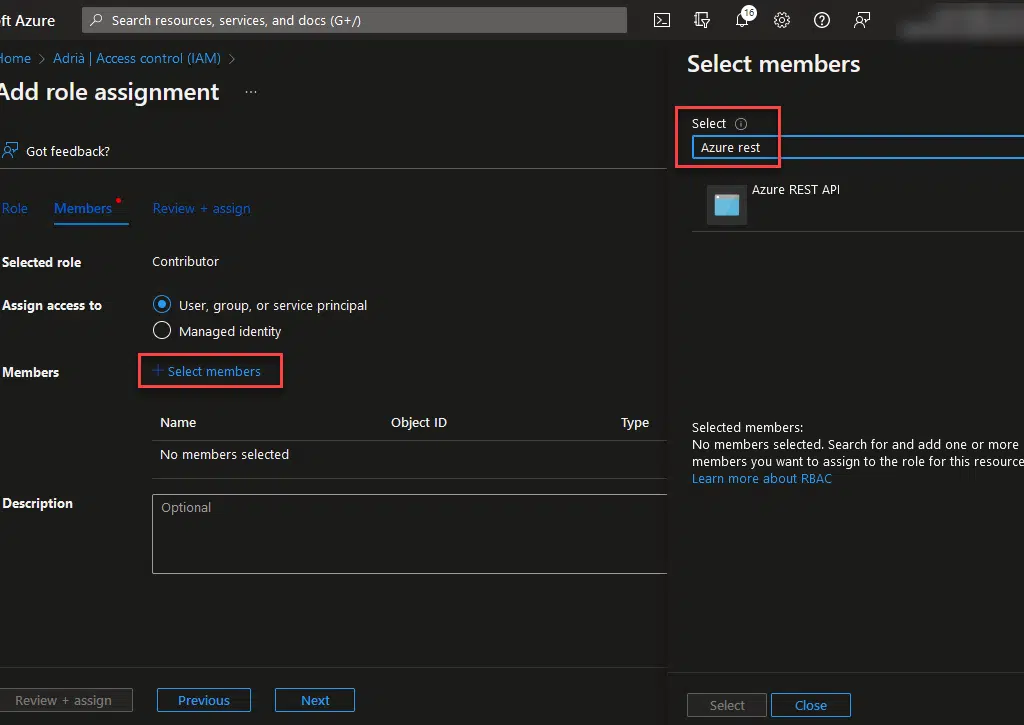

Go to your subscription, to the “Access control (IAM)” section and add a new role assignment:

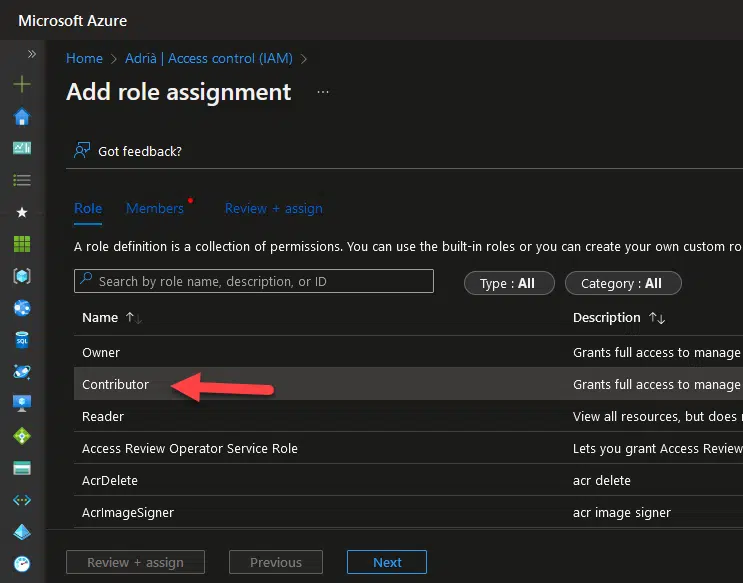

Select the contributor role and click next:

Click the select members text and look for the name of the app registration you created before:

Finally, click the “Select” button, and the “Review + assign” one to end. Now we have a service principal with access to a subscription. We will use this to authenticate and access the Azure REST API.

The function

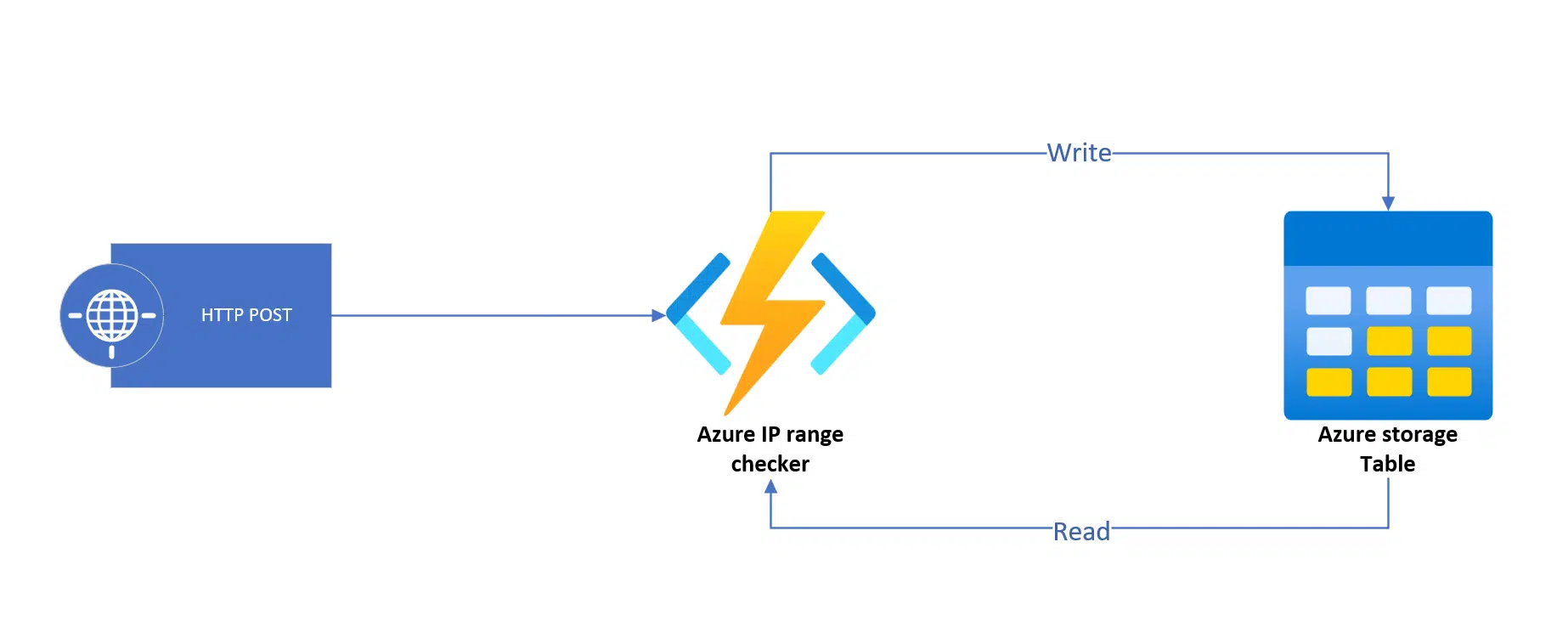

The function will have an HTTP trigger, and will read and write data in an Azure Table. We will do a POST call to the function’s endpoint, and that will trigger the process.

Then the function will download the JSON content from the Azure REST API, do several things, like looking into the table to see if we stored a previous version of the addresses file, compare both and save the latest version into the table. This is the code of the function:

[FunctionName("CheckRanges")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "post", Route = null)] HttpRequest req,

ILogger log)

{

log.LogInformation("C# HTTP trigger function processed a request.");

string ret = string.Empty;

try

{

string body = String.Empty;

using (StreamReader streamReader = new StreamReader(req.Body))

{

body = await streamReader.ReadToEndAsync();

}

if (string.IsNullOrEmpty(body))

{

throw new Exception("No request body found.");

}

dynamic data = JsonConvert.DeserializeObject(body);

string serviceTagRegion = data.serviceTagRegion;

string region = data.region;

if (string.IsNullOrEmpty(serviceTagRegion) || string.IsNullOrEmpty(region))

{

throw new Exception("The values in the cannot be empty.");

}

// Get token and call the API

var token = GetToken().Result;

var latestServiceTag = GetFile(token, region).Result;

if (latestServiceTag is null)

{

throw new Exception("No tag file has been downloaded.");

}

// Download existing file from the blob, if exists, and compare the root changeNumber

var existingServiceTagEntity = await ReadTableAsync();

// If there's a file in the blob container we retrieve it and compare the changeNumber value. If it's the same there's no changes in the file.

if (existingServiceTagEntity is not null)

{

if (existingServiceTagEntity.ChangeNumber == latestServiceTag.changeNumber)

{

// Return empty containers in the JSON file

AddressChanges diff = new AddressChanges();

diff.addedAddresses = Array.Empty<string>();

diff.removedAddresses = Array.Empty<string>(); ;

ret = JsonConvert.SerializeObject(diff);

log.LogInformation("The downloaded file has the same changenumber as the already existing one. No changes.");

// Return empty JSON containers

return new OkObjectResult(ret);

}

}

// Process the new file

var serviceTagSelected = latestServiceTag.values.FirstOrDefault(st => st.name.ToLower() == serviceTagRegion);

if (serviceTagSelected is not null)

{

ServiceTagAddresses addresses = new ServiceTagAddresses();

addresses.rootchangenumber = latestServiceTag.changeNumber;

addresses.nodename = serviceTagSelected.name;

addresses.nodechangenumber = serviceTagSelected.properties.changeNumber;

addresses.addresses = serviceTagSelected.properties.addressPrefixes;

// If a file exists in the table get the differences

if (existingServiceTagEntity is not null)

{

string[] existingAddresses = JsonConvert.DeserializeObject<string[]>(existingServiceTagEntity.Addresses);

ret = CompareAddresses(existingAddresses, addresses.addresses);

}

// Finally upload the file with the new addresses

var newAddressJson = JsonConvert.SerializeObject(addresses);

//await UploadFileAsync(fileName, newAddressJson);

await WriteToTableAsync(addresses);

}

else

{

AddressChanges diff = new AddressChanges();

diff.addedAddresses = Array.Empty<string>();

diff.removedAddresses = Array.Empty<string>();

ret = JsonConvert.SerializeObject(diff);

// Return empty JSON containers

return new OkObjectResult(ret);

}

}

catch (Exception ex)

{

return new BadRequestObjectResult(ex.Message);

}

return new OkObjectResult(ret);

}I’ve added comments to the code to make it clearer, but we’ll go a bit through it. You can find the complete Visual Studio solution in the AzureTagsIPWatcher GitHub repo.

Environment variables

You will find this piece of code several times in the code. This is used to retrieve the environment variables:

Environment.GetEnvironmentVariable("ContainerName")It means that once you’ve deployed the function, you need to create as many different application settings in the Azure function as different variables you’ll find in the code. The variables are used to save the value of keys, secrets, etc. to create the connections to storage and get the token credentials. These are the ones I have used, and you should complete with your own values:

- ContainerConn: the connection string to your Azure storage account.

- ContainerName: the name of your storage account.

- StorageKey: the key for your storage account.

- StorageTable: the name of the table.

- appId: your app registration id.

- secret: the secret in your app registration.

- tokenUrl: this will be the URL used to get the token. You need to change YOUR_TENANT_ID by the id of your tenant: “https://login.microsoftonline.com/YOUR_TENANT_ID/oauth2/token”.

- resource: “https://management.azure.com/”, this is the root URL we will authenticate to.

- apiUrl: “https://management.azure.com/subscriptions/YOUR_SUBSCRIPTION_ID/providers/Microsoft.Network/locations/{0}/serviceTags?api-version=2022-07-01”, and this is the API endpoint URL. You need to change YOUR_SUBSCRIPTION_ID for the id of the subscription in which you’ve created the service principal.

HTTP call

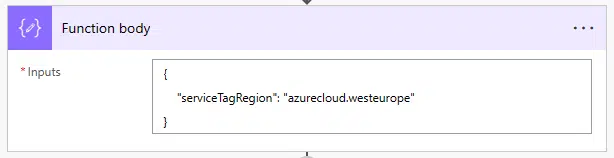

The function is expecting a POST call with a JSON body in its request, containing the node we want to get the addresses from, and also the region:

{

"serviceTagRegion": "azurecloud.westeurope",

"region": "westeurope"

}Function response

The function will return a JSON file with two containers, one for added addresses if any, and one for removed addresses:

{

"removedAddresses":[],

"addedAddresses":[]

}Using the function

Now we have an Azure function with an HTTP trigger that returns a JSON string with two nodes with the changed addresses, or empty nodes if no changes exist.

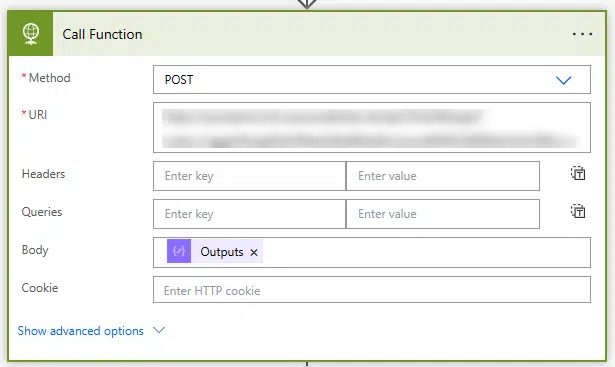

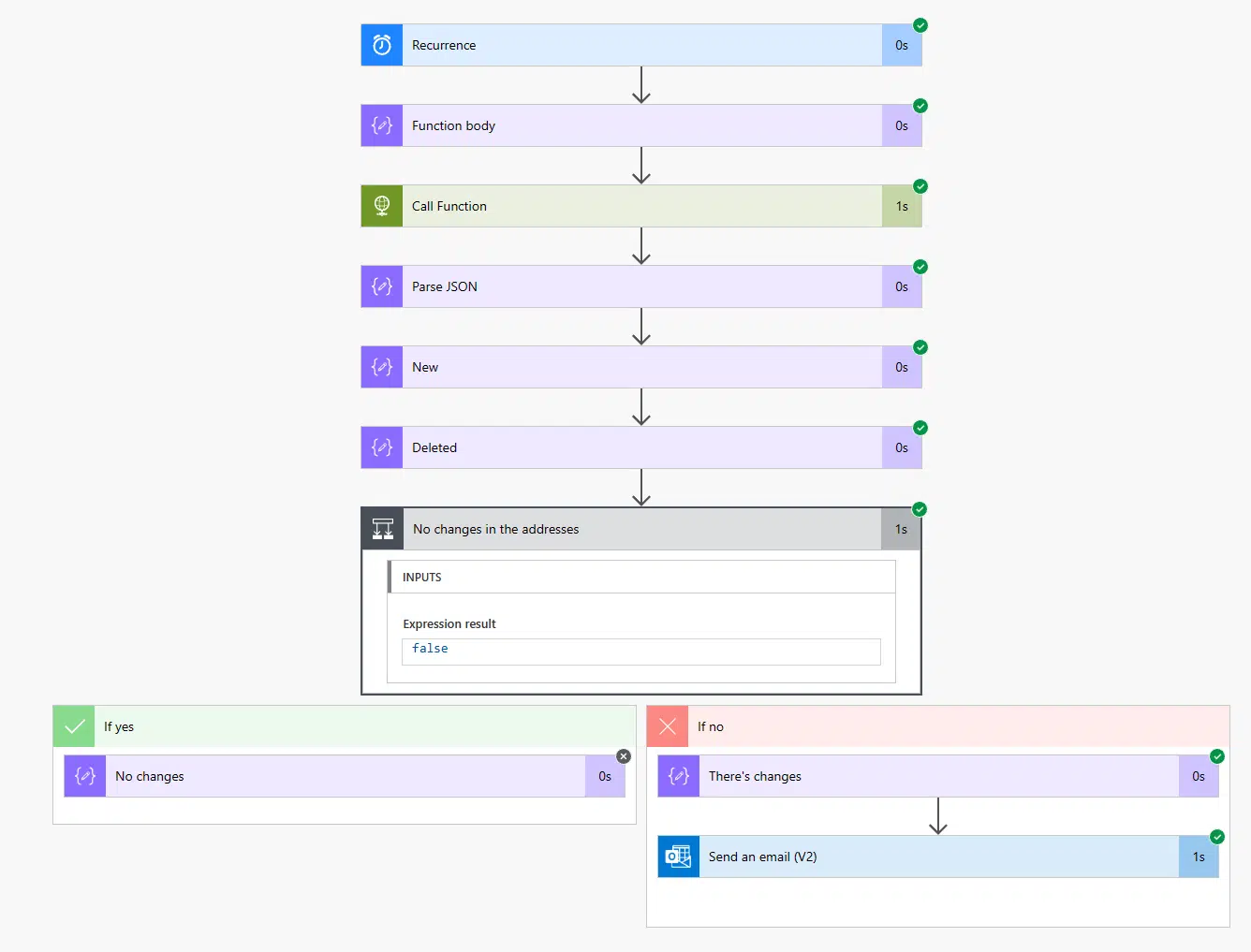

How can we trigger this function on a schedule? With Power Automate, of course! We can use a scheduled cloud flow, that runs once a day, or as many times as you want.

Once we have a trigger, we will call the function. I like to create “Compose” blocks where I “initialize” the value of several “variables”. They’re not variables, but you’re getting what I mean, right?

In this first compose block I just add the body of the function:

Then we add an HTTP action, we set it to the POST method and add the function URL, and in the body the output of the previous compose block:

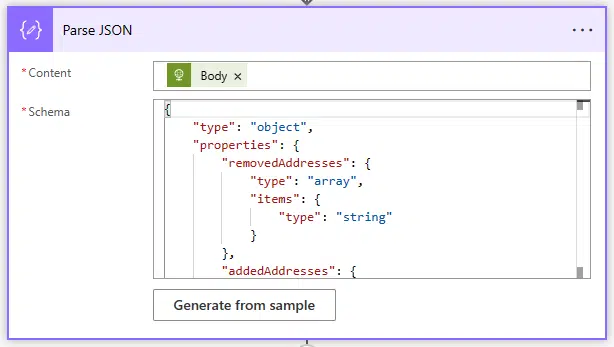

The next step will be parsing the response of the function. It will always return the containers, filled in or empty, so you can use this as the payload to generate the schema. And finally add the Body output of the function:

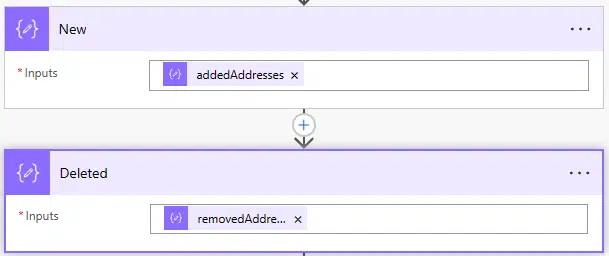

Then I will create two more “Compose” blocks where I’ll save the output values of parsing the JSON, one for the added addresses and one for the removed ones:

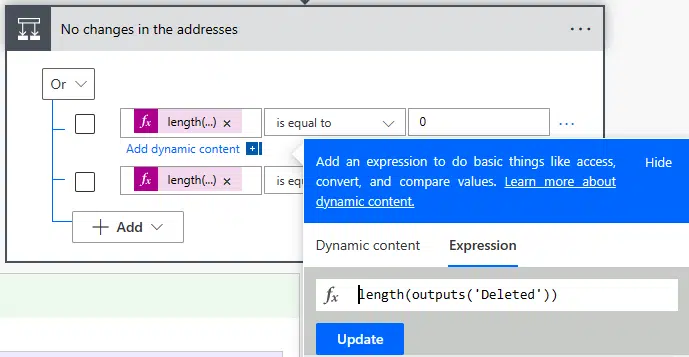

And now I add a condition where we’ll check whether there’s anything inside the new or deleted arrays. How can we check this? We will use an expression in the value field to check if the container’s length is 0:

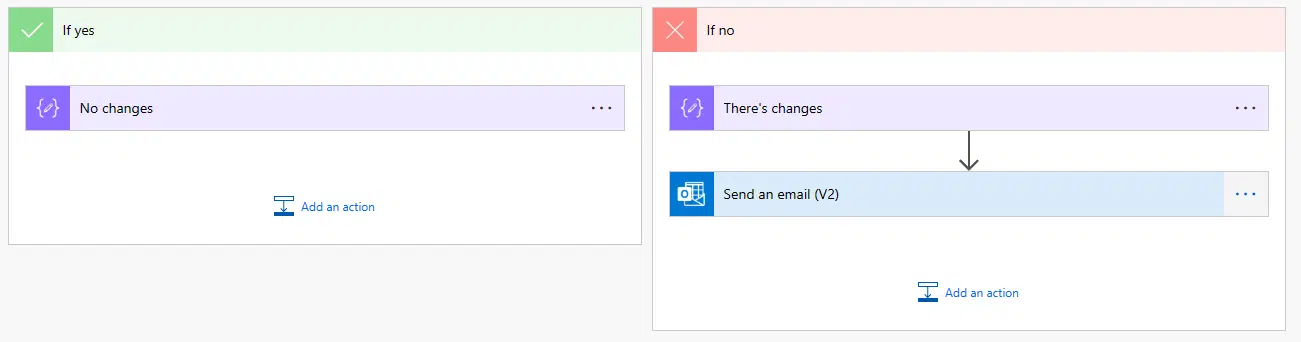

And if it’s 0 (“If yes” branch) we’ll do nothing, and if it contains elements (“If no” branch) we’ll send an email:

Let’s test it!

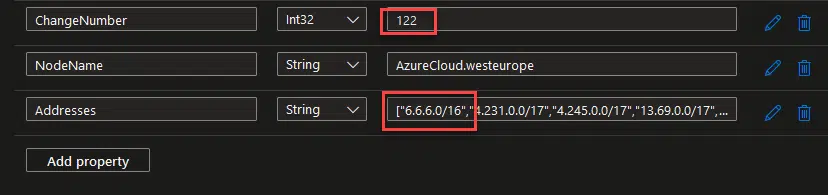

To test it, I’ll go into my Azure Table and edit the latest entry it has, because I’ll run the function locally several times. I’ll change the value of the columns ChangeNumber and Addresses.

And now run the flow…

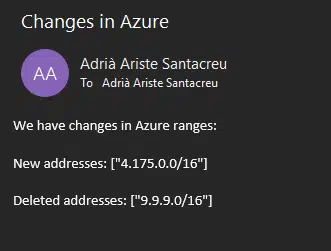

We can see the flow has gone through the “If no” branch in the condition, because the arrays contained changes. And I can see those in the email I’ve received:

And that’s all! As I said at the beginning, this could also be done solely using a Power automate cloud flow, but I preferred using an Azure function to do the dirty job of comparing values.

You can find all the code in the GitHub project AzureTagsIPWatcher.