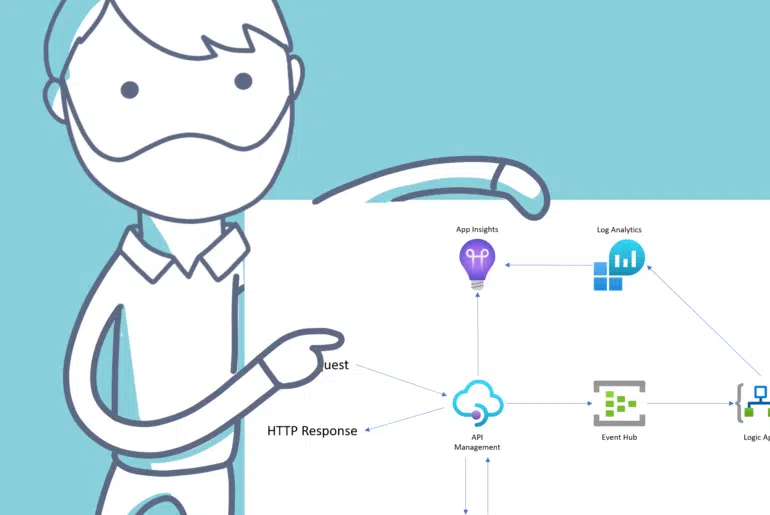

Today I’m bringing you a post that’s all about Dynamics 365 F&O, but the main focus is on security, specifically Microsoft Sentinel. Some days ago it was announced a Microsoft Sentinel Solution for F&O had been published and is currently in preview. Let’s learn a bit about it!

Sometimes we overlook the security aspects of the things that are not directly related to F&O, specially regarding resources like networking, storage accounts, dev VMs, Microsoft Entra ID (this is Azure AD’s new name!) or using Bastion.

And we don’t do that because we just don’t care about security, but because we’re Dynamics 365 people, and sometimes we might lack the knowledge in other things. If you’re lucky, you’ll have an in-house security team that’ll take care of that, otherwise we need to train ourselves a bit.

This is the first time I’ve used Microsoft Sentinel, and I’m for sure missing on a lot of things and features. Time to learn!