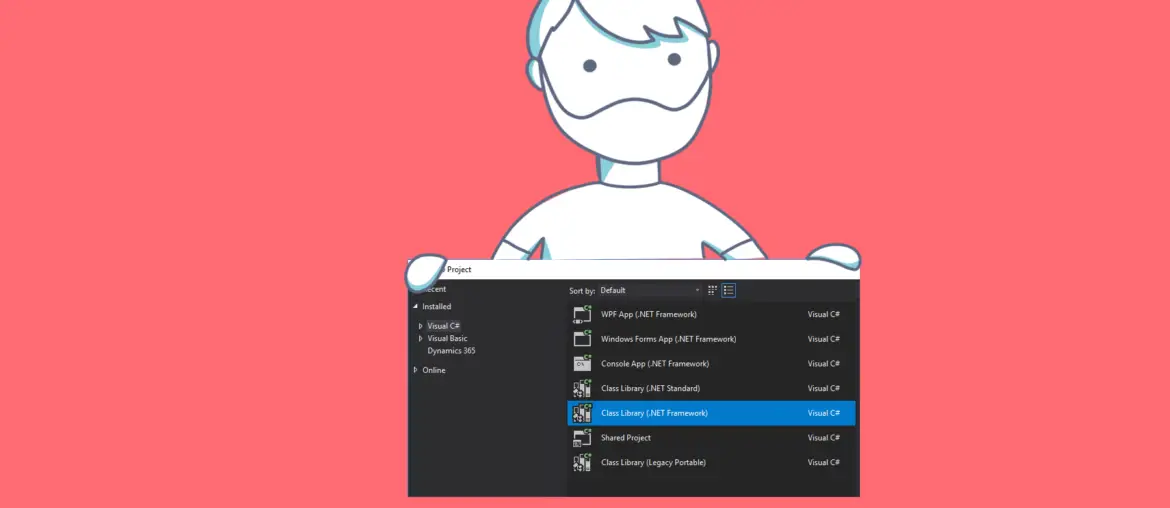

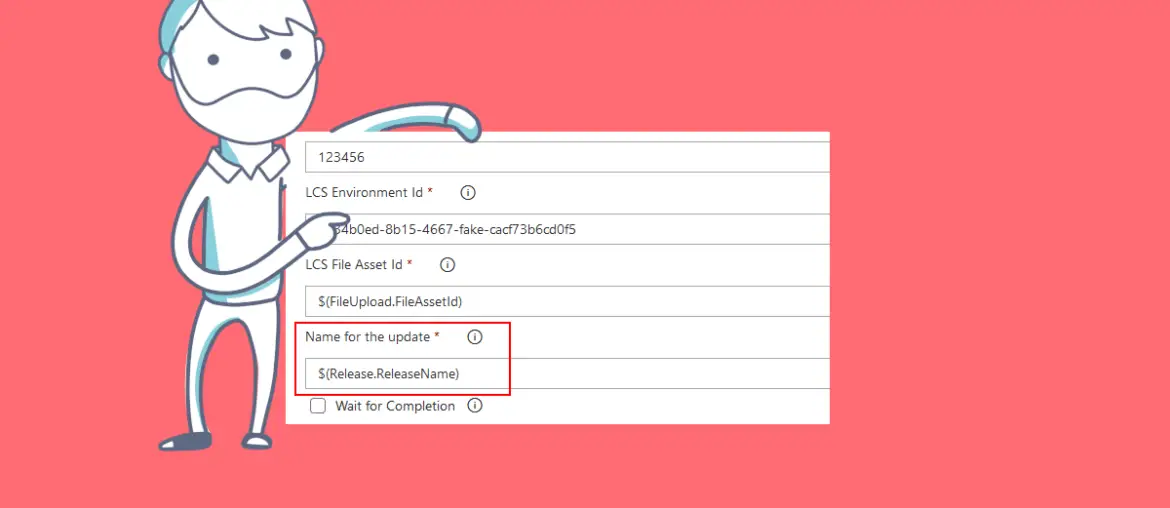

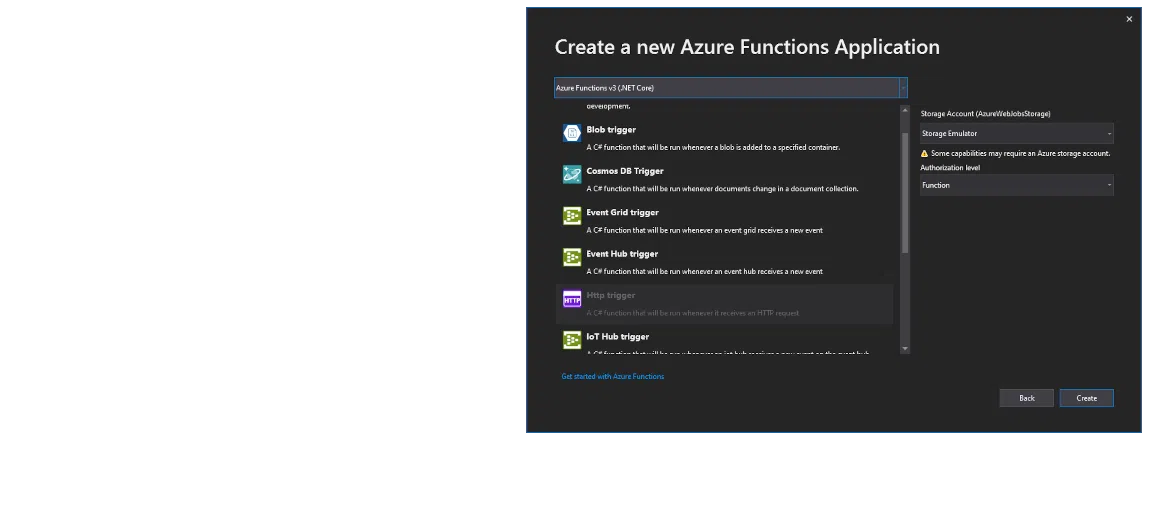

I bet that most of us have had to develop some .NET class library to solve something in Dynamics 365 Finance and Operations. You create a C# project, build it, and add the DLL as a reference in your FnO project. Don’t do that anymore! You can add the .NET project to source control, build it in your pipeline, and the DLL gets added to the deployable package!

I’ve been trying this during the last days after a conversation on Yammer, and while I’ve managed to build .NET and X++ code in the same pipeline, I’ve found some issues or limitations.

If you want to know more about builds, releases, and the Dev ALM of Dynamics 365 you can read my full guide on MSDyn365 & Azure DevOps ALM.