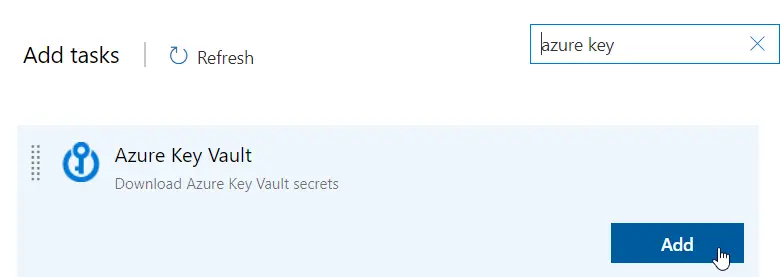

There’s a new version and a new task for our release pipelines that use the Azure-hosted agents. These changes have been introduced recently to support the new MSAL authentication libraries for the LCS service connection used to upload and deploy the deployable packages.

The current service connections use Azure Active Directory (Azure AD) Authentication Library (ADAL), and support for ADAL will end in June 2022.

This means that if we don’t update the Asset Upload and Asset Deployment to their new versions (1.* and 2.* respectively) the release pipelines could stop working after 30th June 2022.