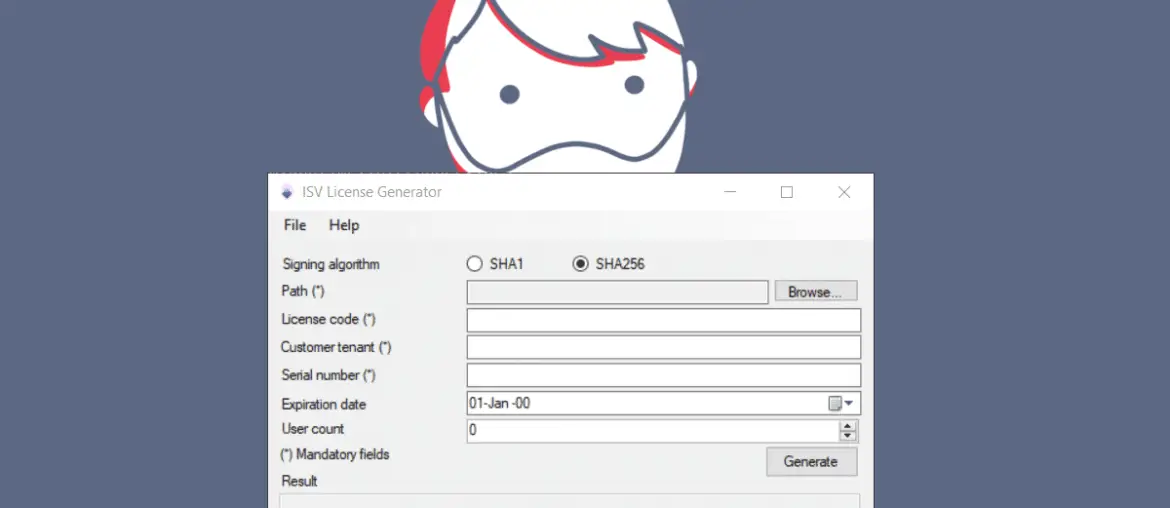

After a long, long, long, long, long time waiting for it, the Dynamics 365 F&O local development feature is finally here… in public preview. It’s a bit different since the first time we heard about this at the MBAS in 2019… but it opens up a new scenario for developers.

I won’t go into much detail about the new unified developer experience, which is its name now, but if you want to know what you need to use it and how to configure it, you can read this blog post from Aurélien Clere: Dynamics 365 FinOps Unified developer experience.

Today I’ll explain what Microsoft Dev Box is and how we can use it with the Dynamics 365 F&O local development tools. We will also learn about the cost, and in which scenarios we could benefit from Microsoft Dev Box as our development environment. You know, the best of both worlds, or the worst… 🤣

But first…